Robotics Applications for Embedded Vision

CES 2026: Physical AI moves from concept to system architecture

This market analysis was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The world’s largest consumer electronics conference demonstrated the technical synergies between automotive and robotics. At CES 2026, there was a clear cross-sector message: Physical AI is the common language across the automotive, robotaxi

Into the Omniverse: OpenUSD and NVIDIA Halos Accelerate Safety for Robotaxis, Physical AI Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Editor’s note: This post is part of Into the Omniverse, a series focused on how developers, 3D practitioners and enterprises can transform their workflows using the latest advancements in OpenUSD and NVIDIA Omniverse. New NVIDIA safety

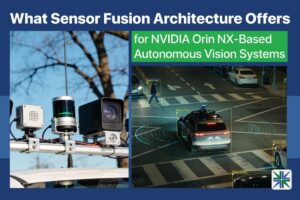

What Sensor Fusion Architecture Offers for NVIDIA Orin NX-Based Autonomous Vision Systems

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why multi-sensor timing drift weakens edge AI perception How GNSS-disciplined clocks align cameras, LiDAR, radar, and IMUs Role of Orin NX as a central timing authority for sensor fusion Operational gains from unified time-stamping

Production Software Meets Production Hardware: Jetson Provisioning Now Available with Avocado OS

This blog post was originally published at Peridio’s website. It is reprinted here with the permission of Peridio. The gap between robotics prototypes and production deployments has always been an infrastructure problem disguised as a hardware problem. Teams build incredible computer vision models and robotic control systems on NVIDIA Jetson developer kits, only to hit

Robotics Builders Forum offers Hardware, Know-How and Networking to Developers

On February 25, 2026 from 8:30 am to 5:30 pm ET, Advantech, Qualcomm, Arrow, in partnership with D3 Embedded, Edge Impulse, and the Pittsburgh Robotics Network will present Robotics Builders Forum, an in-person conference for engineers and product teams. Qualcomm and D3 Embedded are members of the Edge AI and Vision Alliance, while Edge Impulse

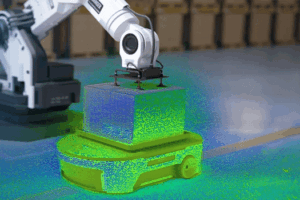

Faster Sensor Simulation for Robotics Training with Machine Learning Surrogates

This article was originally published at Analog Devices’ website. It is reprinted here with the permission of Analog Devices. Training robots in the physical world is slow, expensive, and difficult to scale. Roboticists developing AI policies depend on high quality data—especially for complex tasks like picking up flexible objects or navigating cluttered environments. These tasks rely

NAMUGA Successfully Concludes CES Participation, official Launch of Next-Generation 3D LiDAR Sensor ‘Stella-2’

Las Vegas, NV, Jan 15 — NAMUGA announced that it successfully concluded the unveiling of its new product, Stella-2, at CES 2026, the world’s largest IT and consumer electronics exhibition, held in Las Vegas, USA, from January 6 to 9. The newly unveiled product, Stella-2, is a solid-state LiDAR jointly developed by NAMUGA and Lumotive. In

NVIDIA Unveils New Open Models, Data and Tools to Advance AI Across Every Industry

This post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Expanding the open model universe, NVIDIA today released new open models, data and tools to advance AI across every industry. These models — spanning the NVIDIA Nemotron family for agentic AI, the NVIDIA Cosmos platform for physical AI, the new NVIDIA Alpamayo family for autonomous vehicle

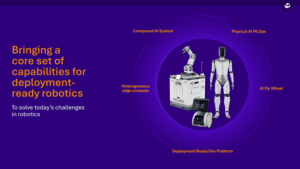

Qualcomm Introduces a Full Suite of Robotics Technologies, Powering Physical AI from Household Robots up to Full-Size Humanoids

Key Takeaways: Utilizing leadership in Physical AI with comprehensive stack systems built on safety-grade high performance SoC platforms, Qualcomm’s general-purpose robotics architecture delivers industry-leading power efficiency, and scalability, enabling capabilities from personal service robots to next generation industrial autonomous mobile robots and full-size humanoids that can reason, adapt, and decide. New end-to‑end architecture accelerates automation

The Coming Robotics Revolution: How AI and Macnica’s Capture, Process, Communicate Philosophy Will Define the Next Industrial Era

This blog post was originally published at Macnica’s website. It is reprinted here with the permission of Macnica. Just as networking and fiber-optic infrastructure quietly laid the groundwork for the internet economy, fueling the rise of Amazon, Facebook, and the digital platforms that redefined commerce and communication, today’s breakthroughs in artificial intelligence are setting the stage

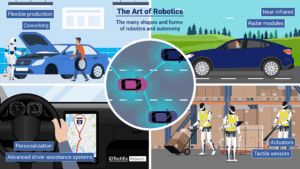

The Art of Robotics and The Growing Intellect of Autonomy

This blog post was originally published at IDTechEx’s website. It is reprinted here with the permission of IDTechEx. ‘Robotics’ takes on many different forms today, from cars pre-empting a driver’s needs and making coffee-stop decisions in their best interest, to humanoid robots operating in warehouses and cobots assisting humans in production lines. IDTechEx’s portfolio of

Upcoming Webinar Explores Real-time AI Sensor Fusion

On December 17, 2025, at 9:00 am PST (12:00 pm EST), Alliance Member companies e-con Systems, Lattice Semiconductor, and NVIDIA will deliver a joint webinar “Real-Time AI Sensor Fusion with e-con Systems’ Holoscan Camera Solutions using Lattice FPGA for NVIDIA Jetson Thor Platform.” From the event page: Join an exclusive webinar hosted by e-con Systems®,

Humanoid Robots 2025: The Race to Useful Intelligence

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. AI, dexterity, and cost reduction are converging to bring humanoids from prototypes to real-world deployment. Key Takeaways The global humanoid robot market will grow to US$51 billion by 2035. Three adoption waves have

Reconstruct a Scene in NVIDIA Isaac Sim Using Only a Smartphone

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Building realistic 3D environments for robotics simulation can be a labor-intensive process. Now, with NVIDIA Omniverse NuRec, you can complete the entire process using just a smartphone. This post walks you through each step—from capturing photos using an iPhone

STMicroelectronics Empowers Data-Hungry Industrial Transformation with Unique Dual-Range Motion Sensor

Nov 6, 2025 Geneva, Switzerland Unique MEMS innovation means deeper contextual awareness for monitoring and safety equipment also in harsh environments Richly detailed motion and event tracking with simultaneous, independent sensing in high-g and low-g ranges Integrates ST’s advanced in-sensor edge AI for autonomy, performance, and power saving STMicroelectronics (NYSE: STM), a global semiconductor leader serving customers

NVIDIA Contributes to Open Frameworks for Next-generation Robotics Development

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. At the ROSCon robotics conference, NVIDIA announced contributions to the ROS 2 robotics framework and the Open Source Robotics Alliance’s new Physical AI Special Interest Group, as well as the latest release of NVIDIA Isaac ROS. This

Open-source Physics Engine and OpenUSD Advance Robot Learning

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The Newton physics engine and enhanced NVIDIA Isaac GR00T models enable developers to accelerate robot learning through unified OpenUSD simulation workflows. Editor’s note: This blog is a part of Into the Omniverse, a series focused on how

Upcoming Seminar Explores the Latest Innovations in Mobile Robotics

On October 22, 2022 at 9:00 am PT, Alliance Member company NXP Semiconductors, along with Avnet, will deliver a free (advance registration required) half-day in-person robotics seminar at NXP’s office in San Jose, California. From the event page: Join us for a free in-depth seminar exploring the latest innovations in mobile robotics with a focus