LLMs and MLLMs

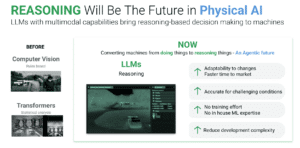

The past decade-plus has seen incredible progress in practical computer vision. Thanks to deep learning, computer vision is dramatically more robust and accessible, and has enabled compelling capabilities in thousands of applications, from automotive safety to healthcare. But today’s widely used deep learning techniques suffer from serious limitations. Often, they struggle when confronted with ambiguity (e.g., are those people fighting or dancing?) or with challenging imaging conditions (e.g., is that shadow in the fog a person or a shrub?). And, for many product developers, computer vision remains out of reach due to the cost and complexity of obtaining the necessary training data, or due to lack of necessary technical skills.

Recent advances in large language models (LLMs) and their variants such as vision language models (VLMs, which comprehend both images and text), hold the key to overcoming these challenges. VLMs are an example of multimodal large language models (MLLMs), which integrate multiple data modalities such as language, images, audio, and video to enable complex cross-modal understanding and generation tasks. MLLMs represent a significant evolution in AI by combining the capabilities of LLMs with multimodal processing to handle diverse inputs and outputs.

The purpose of this portal is to facilitate awareness of, and education regarding, the challenges and opportunities in using LLMs, VLMs, and other types of MLLMs in practical applications — especially applications involving edge AI and machine perception. The content that follows (which is updated regularly) discusses these topics. As a starting point, we encourage you to watch the recording of the symposium “Your Next Computer Vision Model Might be an LLM: Generative AI and the Move From Large Language Models to Vision Language Models“, sponsored by the Edge AI and Vision Alliance. A preview video of the symposium introduction by Jeff Bier, Founder of the Alliance, follows:

If there are topics related to LLMs, VLMs or other types of MLLMs that you’d like to learn about and don’t find covered below, please email us at [email protected] and we’ll consider adding content on these topics in the future.

View all LLM and MLLM Content

“A View From the 2025 Embedded Vision Summit (Part 1),” a Presentation from the Edge AI and Vision Alliance

Jeff Bier, Founder of the Edge AI and Vision Alliance, welcomes attendees to the May 2025 Embedded Vision Summit on May 21, 2025. Bier provides an overview of the edge AI and vision market opportunities, challenges, solutions and trends. He also introduces the Edge AI and Vision Alliance and the resources it offers for both

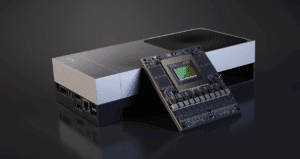

NVIDIA Blackwell-powered Jetson Thor Now Available, Accelerating the Age of General Robotics

News Summary: NVIDIA Jetson AGX Thor developer kit and production modules, robotics computers designed for physical AI and robotics, are now generally available. Over 2 million developers are using NVIDIA’s robotics stack, with Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Hexagon, Medtronic and Meta among early Jetson Thor adopters. Jetson Thor, powered by NVIDIA

“The Future of Visual AI: Efficient Multimodal Intelligence,” a Keynote Presentation from Trevor Darrell

Trevor Darrell, Professor at the University of California, Berkeley, presents the “Future of Visual AI: Efficient Multimodal Intelligence” tutorial at the May 2025 Embedded Vision Summit. AI is on the cusp of a revolution, driven by the convergence of several breakthroughs. One of the most significant of these advances is the development of large language

Maximize Robotics Performance by Post-training NVIDIA Cosmos Reason

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. First unveiled at NVIDIA GTC 2025, NVIDIA Cosmos Reason is an open and fully customizable reasoning vision language model (VLM) for physical AI and robotics. The VLM enables robots and vision AI agents to reason using prior

Implementing Multimodal GenAI Models on Modalix

This blog post was originally published at SiMa.ai’s website. It is reprinted here with the permission of SiMa.ai. It has been our goal since starting SiMa.ai to create one software and hardware platform for the embedded edge that empowers companies to make their AI/ML innovations come to life. With the rise of Generative AI already

“Customizing Vision-language Models for Real-world Applications,” a Presentation from NVIDIA

Monika Jhuria, Technical Marketing Engineer at NVIDIA, presents the “Customizing Vision-language Models for Real-world Applications” tutorial at the May 2025 Embedded Vision Summit. Vision-language models (VLMs) have the potential to revolutionize various applications, and their performance can be improved through fine-tuning and customization. In this presentation, Jhuria explores the concept and shares insights on domain

XR Tech Market Report

Woodside Capital Partners (WCP) is pleased to share its XR Tech Market Report, authored by senior bankers Alain Bismuth and Rudy Burger, and by analyst Alex Bonilla. Why we are interested in the XR Ecosystem Investors have been pouring billions of dollars into developing enabling technologies for augmented reality (AR) glasses aimed at the consumer market,

The Era of Physical AI is Here

This blog post was originally published at SiMa.ai’s website. It is reprinted here with the permission of SiMa.ai. The AI landscape is undergoing a monumental shift. After a decade where AI flourished in the cloud, scaled by hyperscalers, we are now entering the era of Physical AI. Physical AI is poised to touch every facet

R²D²: Boost Robot Training with World Foundation Models and Workflows from NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. As physical AI systems advance, the demand for richly labeled datasets is accelerating beyond what we can manually capture in the real world. World foundation models (WFMs), which are generative AI models trained to simulate, predict, and

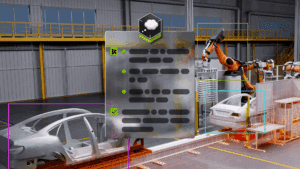

“LLMs and VLMs for Regulatory Compliance, Quality Control and Safety Applications,” a Presentation from Camio

Lazar Trifunovic, Solutions Architect at Camio, presents the “LLMs and VLMs for Regulatory Compliance, Quality Control and Safety Applications” tutorial at the May 2025 Embedded Vision Summit. By using vision-language models (VLMs) or combining large language models (LLMs) with conventional computer vision models, we can create vision systems that are able to interpret policies and

Collaborating With Robots: How AI Is Enabling the Next Generation of Cobots

This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. Collaborative robots, or cobots, are reshaping how we interact with machines. Designed to operate safely in shared environments, AI-enabled cobots are now embedded across manufacturing, logistics, healthcare, and even the home. But their role goes beyond automation—they

R²D²: Building AI-based 3D Robot Perception and Mapping with NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Robots must perceive and interpret their 3D environments to act safely and effectively. This is especially critical for tasks such as autonomous navigation, object manipulation, and teleoperation in unstructured or unfamiliar spaces. Advances in robotic perception increasingly

A World’s First On-glass GenAI Demonstration: Qualcomm’s Vision for the Future of Smart Glasses

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Our live demo of a generative AI assistant running completely on smart glasses — without the aid of a phone or the cloud — and the reveal of the new Snapdragon AR1+ platform spark new possibilities for

We Built a Personalized, Multimodal AI Smart Glass Experience — Watch It Here

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Our demo shows the power of on-device AI and why smart glasses make the ideal AI user interface Gabby walks into a gym while carrying a smartphone and wearing a pair of smart glasses. Unsure of where

AI Blueprint for Video Search and Summarization Now Available to Deploy Video Analytics AI Agents Across Industries

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The age of video analytics AI agents is here. Video is one of the defining features of the modern digital landscape, accounting for over 50% of all global data traffic. Dominant in media and increasingly important for

R²D²: Unlocking Robotic Assembly and Contact Rich Manipulation with NVIDIA Research

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. This edition of NVIDIA Robotics Research and Development Digest (R2D2) explores several contact-rich manipulation workflows for robotic assembly tasks from NVIDIA Research and how they can address key challenges with fixed automation, such as robustness, adaptability, and