Cameras and Sensors for Embedded Vision

WHILE ANALOG CAMERAS ARE STILL USED IN MANY VISION SYSTEMS, THIS SECTION FOCUSES ON DIGITAL IMAGE SENSORS

While analog cameras are still used in many vision systems, this section focuses on digital image sensors—usually either a CCD or CMOS sensor array that operates with visible light. However, this definition shouldn’t constrain the technology analysis, since many vision systems can also sense other types of energy (IR, sonar, etc.).

The camera housing has become the entire chassis for a vision system, leading to the emergence of “smart cameras” with all of the electronics integrated. By most definitions, a smart camera supports computer vision, since the camera is capable of extracting application-specific information. However, as both wired and wireless networks get faster and cheaper, there still may be reasons to transmit pixel data to a central location for storage or extra processing.

A classic example is cloud computing using the camera on a smartphone. The smartphone could be considered a “smart camera” as well, but sending data to a cloud-based computer may reduce the processing performance required on the mobile device, lowering cost, power, weight, etc. For a dedicated smart camera, some vendors have created chips that integrate all of the required features.

Cameras

Until recent times, many people would imagine a camera for computer vision as the outdoor security camera shown in this picture. There are countless vendors supplying these products, and many more supplying indoor cameras for industrial applications. Don’t forget about simple USB cameras for PCs. And don’t overlook the billion or so cameras embedded in the mobile phones of the world. These cameras’ speed and quality have risen dramatically—supporting 10+ mega-pixel sensors with sophisticated image processing hardware.

Consider, too, another important factor for cameras—the rapid adoption of 3D imaging using stereo optics, time-of-flight and structured light technologies. Trendsetting cell phones now even offer this technology, as do latest-generation game consoles. Look again at the picture of the outdoor camera and consider how much change is about to happen to computer vision markets as new camera technologies becomes pervasive.

Sensors

Charge-coupled device (CCD) sensors have some advantages over CMOS image sensors, mainly because the electronic shutter of CCDs traditionally offers better image quality with higher dynamic range and resolution. However, CMOS sensors now account for more 90% of the market, heavily influenced by camera phones and driven by the technology’s lower cost, better integration and speed.

Upcoming Webinar on Industrial 3D Vision with iToF Technology

On February 18, 2026, at 9:00 am PST (12:00 pm EST), and on February 19, 2026 at 11:00 am CET, Alliance Member company e-con Systems in partnership with onsemi will deliver a webinar “Enabling Reliable Industrial 3D Vision with iToF Technology” From the event page: Join e-con Systems and onsemi for an exclusive joint webinar

OpenMV’s Latest: Firmware v4.8.1, Multi-sensor Vision, Faster Debug, and What’s Next

OpenMV kicked off 2026 with a substantial software update and a clearer look at where the platform is headed next. The headline is OpenMV Firmware v4.8.1 paired with OpenMV IDE v4.8.1, which adds multi-sensor capabilities, expands event-camera support, and lays the groundwork for a major debugging and connectivity upgrade coming with firmware v5. If you’re

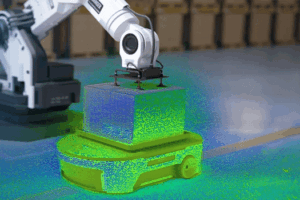

Faster Sensor Simulation for Robotics Training with Machine Learning Surrogates

This article was originally published at Analog Devices’ website. It is reprinted here with the permission of Analog Devices. Training robots in the physical world is slow, expensive, and difficult to scale. Roboticists developing AI policies depend on high quality data—especially for complex tasks like picking up flexible objects or navigating cluttered environments. These tasks rely

Upcoming Webinar on Challenges of Depth of Field (DoF) in Macro Imaging

On January 29, 2026, at 9:00 am PST (12:00 pm EST) Alliance Member company e-con Systems will deliver a webinar “Challenges of Depth of Field (DoF) in Macro Imaging” From the event page: We’re excited to invite you to an exclusive webinar hosted by e-con Systems: Challenges of DoF in Macro Imaging. In this session,

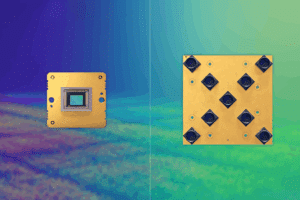

NAMUGA Successfully Concludes CES Participation, official Launch of Next-Generation 3D LiDAR Sensor ‘Stella-2’

Las Vegas, NV, Jan 15 — NAMUGA announced that it successfully concluded the unveiling of its new product, Stella-2, at CES 2026, the world’s largest IT and consumer electronics exhibition, held in Las Vegas, USA, from January 6 to 9. The newly unveiled product, Stella-2, is a solid-state LiDAR jointly developed by NAMUGA and Lumotive. In

Why Camera Selection is Extremely Critical in Lottery Redemption Terminals

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Lottery redemption terminals represent the frontline of trust between lottery operators and millions of players. The interaction at the terminal carries high stakes: money changes hands, fraud attempts must be caught instantly, and regulators demand

Vision Components to Present VC MIPI IMX454 Multispectral Camera Module at Photonics West

Ettlingen, Germany, January 12, 2026 — Vision Components will present its new VC MIPI IMX454 Camera Module at SPIE Photonics West, 20-22 January in San Francisco, California. The MIPI Camera features Sony’s new multispectral image sensor IMX454 and enables to capture up to 41 wavelength in one shot. VC will also showcase its lineup of

Deep Learning Vision Systems for Industrial Image Processing

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Deep learning vision systems are often already a central component of industrial image processing. They enable precise error detection, intelligent quality control, and automated decisions – wherever conventional image processing methods reach their limits. We show how a

Free Webinar Examines Autonomous Imaging for Environmental Cleanup

On March 3, 2026 at 9 am PT (noon ET), The Ocean Cleanup’s Robin de Vries, ADIS (Autonomous Debris Imaging System) Lead, will present the free hour webinar “Cleaning the Oceans with Edge AI: The Ocean Cleanup’s Smart Camera Transformation,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration

What is a Red Light Camera? A Quick Guide to Vision-Based Traffic Violation Detection

This article was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Intersections remain among the most accident-prone areas in traffic networks, with violations like red-light running leading to severe crashes. Red light cameras automate detection by linking vehicle movement with signal state, capturing clear evidence for enforcement. They