This article was originally published at John Day's Automotive Electronics News. It is reprinted here with the permission of JHDay Communications.

Cameras installed in various locations around a vehicle exterior, in combination with cost-effective, powerful and energy-efficient processors, deliver numerous convenience and protection benefits.

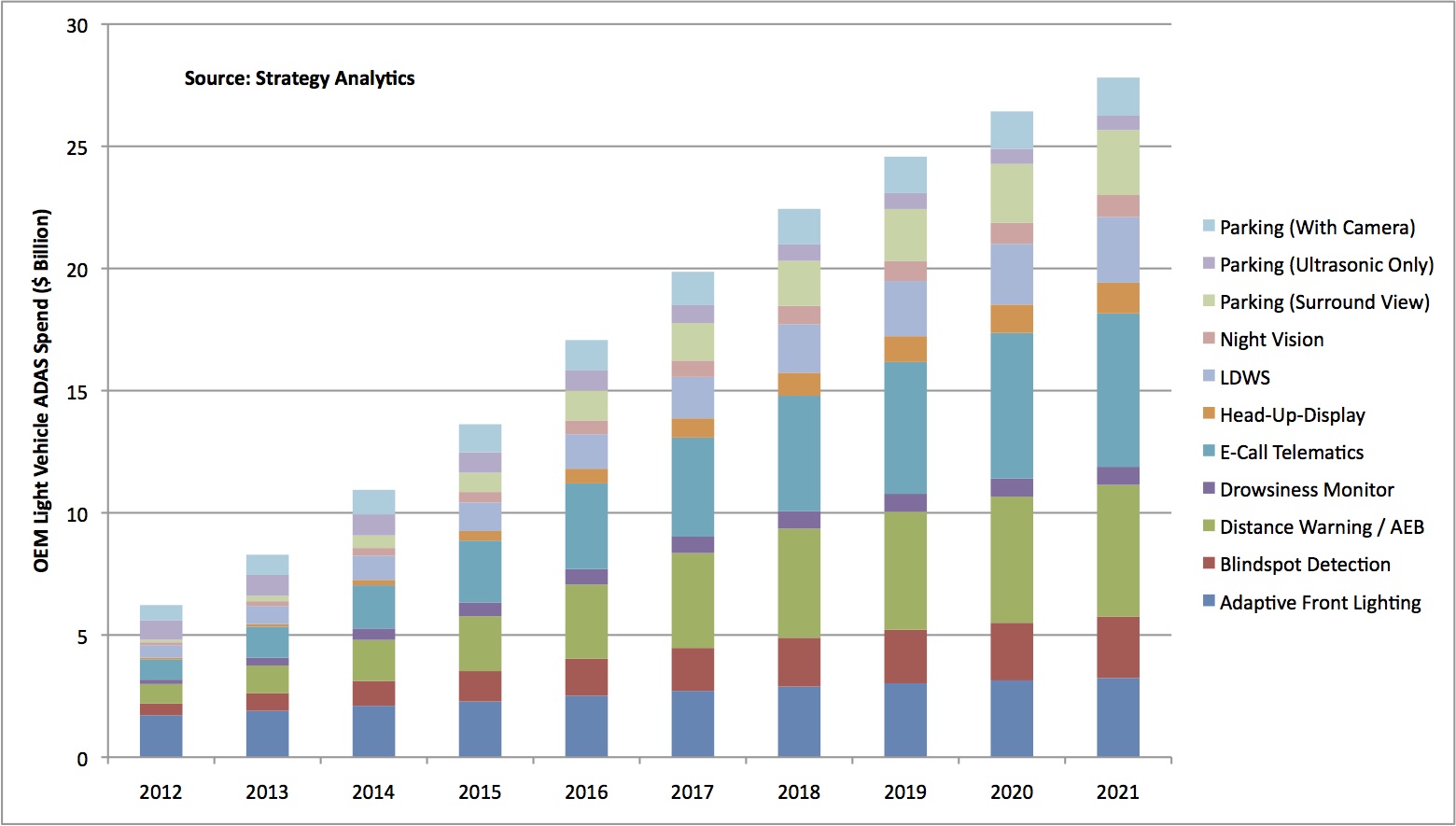

The advanced driver assistance systems (ADAS) market is one of the brightest stars in today's technology sector, with adoption rapidly expanding beyond high-end vehicles into high-volume models. It's also one of the fastest-growing application areas for automotive electronics. Market analysis firm Strategy Analytics, for example, now expects that by 2021, automotive OEMs will be spending in excess of $28B USD per year on a diversity of assistance and safety solutions (Figure 1).

Figure 1. The advanced driver assistance systems (ADAS) market is expected to grow rapidly in the coming years, and in a diversity of implementation forms.

At the heart of ADAS technology are the sensors used to detect what is happening both inside and outside the vehicle. Among externally focused sensor technologies, Strategy Analytics predicts that cameras will offer the best blend of growth and volumes, for the following reasons:

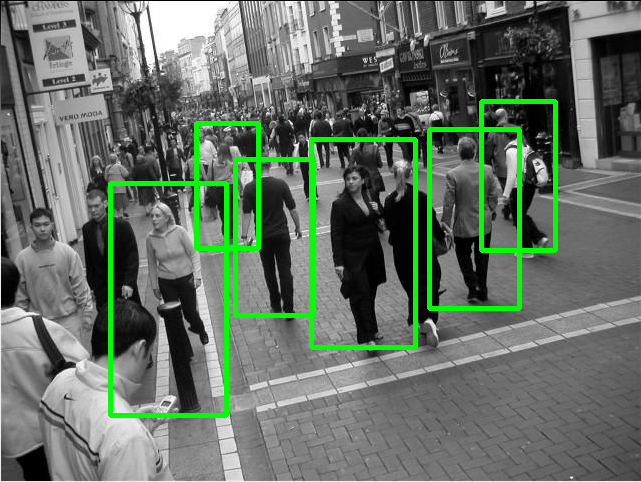

- Some ADAS functions, such as lane departure warning, traffic sign recognition and pedestrian detection, either require a camera or cannot currently be done as effectively using another method (Figure 2).

- Cameras are now price- and performance-competitive in applications traditionally associated with radar or lidar (light detection and ranging) sensors, such as adaptive cruise control and automatic emergency braking.

- For many ADAS functions, a combined radar-plus-camera platform offers the optimal solution. For lower-end, cost-sensitive products, however, Strategy Analytics believes that the camera-only approach is far more likely to be successful than a radar-only alternative.

- Cameras are becoming an integral part of parking-assistance solutions, including high-end surround view systems that include four or more cameras per vehicle.

Figure 2. Pedestrian detection algorithms are much more complex than those for vehicle detection, and a radar-only system is insufficient to reliably alert the driver to the presence of such non-metallic objects.

Strategy Analytics thus expects the total worldwide demand for external-looking automotive cameras to rise from 23 million cameras in 2013 to more than 83 million by 2018, representing a compound average annual growth rate of 29%. By 2021, almost 120 million cameras are expected to be fitted, an average of more than one per vehicle. The key factors behind the rapid ADAS market growth, according to Strategy Analytics, are safety and convenience. Governments across the globe are focusing on improving auto safety through public awareness campaigns, legislation and other means. And increasing traffic congestion and longer commute times are opening drivers' minds to the idea of leveraging increased vehicle automation in order to make those commutes less stressful and, in the fully-autonomous future, more time-efficient.

Automated image understanding has historically been achievable only using large, heavy, expensive, and power-hungry computers, restricting computer vision's usage to a few niche applications like factory automation. Beyond these few success stories, computer vision has mainly been a field of academic research over the past several decades. However, with the emergence of increasingly capable processors, image sensors, memories, and other semiconductor devices, along with robust algorithms, it's becoming practical to incorporate practical computer vision capabilities into a wide range of systems, including ADAS designs.

Two previous articles in this series provided an overview of vision processing in ADAS, followed by a more focused look at in-vehicle ADAS applications. This article focuses on vehicle-exterior ADAS applications, discussing implementation details and the tradeoffs of various approaches. And it introduces an industry alliance intended to help product creators incorporate vision capabilities into their ADAS designs, and provides links to the technical resources that this alliance offers.

Rear Camera Applications

In March 2014, the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) issued a final ruling that requires rear visibility technology in all new vehicles of less than 10,000 pounds by May 2018. As justification, the NHTSA cites statistics such as 210 fatalities and 15,000 injuries per year caused by back-over collisions. Children under 5 years old account for 31 percent of yearly back-over fatalities, according to the NHTSA; adults 70 years of age and older account for 26 percent.

The NHSTA rear-view mandate only requires a simple camera that captures the view behind the car for display to the driver, either in the center console or at the traditional rear view mirror location (supplementing or even replacing it). However, many car manufacturers are taking the technology to the next step. Incorporating computer vision intelligence enables the back-over prevention system to automatically detect objects and warn the driver (passive safety) or even apply the brakes (active safety) to prevent a collision with a pedestrian or other object.

Front Camera Applications

Forward-facing camera applications have evolved considerably in just a short time, and the pace is accelerating. Early vision processing systems were informational-only and included such functions as lane departure warning, forward collision warning and, in more advanced implementations, traffic sign recognition that alerted the driver to upcoming speed limit changes, sharp curves in the road, etc. Being passive systems, they provided driver feedback (illuminating dashboard icons, accompanied by warning buzzers, for example) but the driver maintained complete control of the vehicle.

Subsequent-generation active safety systems evolved, for example, by migrating from lane departure warning to lane keeping, with the car actively resisting a lane change that isn’t accompanied by a driver-activated turn signal. Active safety similarly now encompasses autonomous emergency braking to prevent forward collisions with other vehicles. Motivations for manufacturers to adopt active safety enhancements include government regulations such as EuroNCAP, which requires various ADAS features in order to achieve a five-star rating.

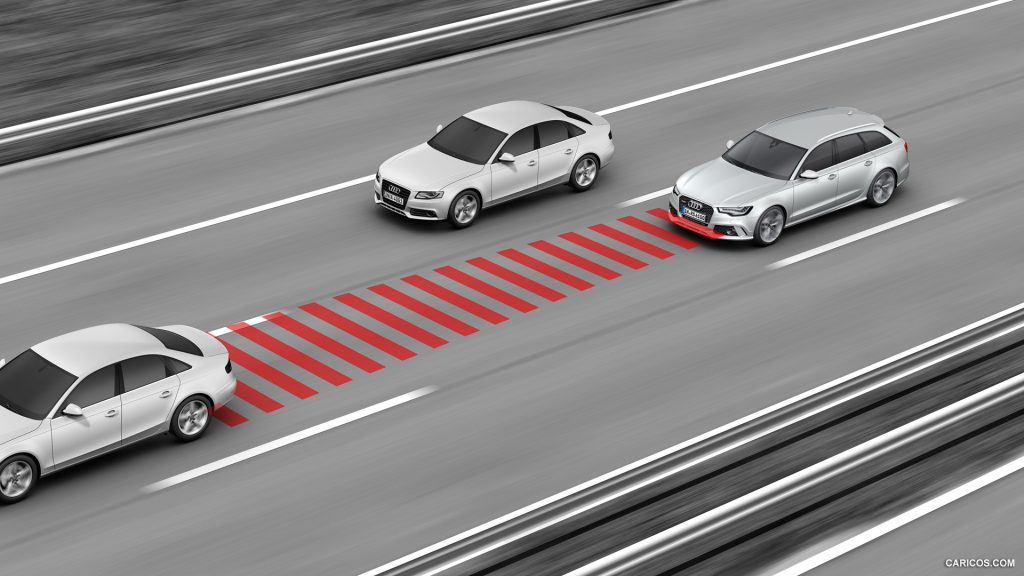

Many additional front camera applications are now finding their way into next-generation cars. Adaptive headlight control, for example, automatically switches from high to low beam mode in response to incoming traffic. Adaptive cruise control allows the vehicle to automatically maintain adequate spacing behind traffic ahead of it (Figure 3). Intelligent speed adaptation detects the posted speed limit and, expanding on the earlier passive traffic sign recognition, automatically adjusts the speed of the car to compensate. And adaptive suspension systems discern variations in the road ahead, such as potholes, and accordingly adjust the vehicle's suspension to maintain a smooth ride.

Figure 3. Adaptive cruise control automatically adjusts a vehicle's speed to keep pace with traffic ahead, and can incorporate camera-captured and vision processor-discerned speed limit and other road sign data in its algorithms.

Side Camera Applications

One key application of side cameras is blind-spot object detection. Cameras mounted on either side of the vehicle detect pedestrians, bicycles and motorcycles, vehicles and other objects in adjacent lanes. Passive implementations warn the driver with a flashing light, an audible alarm, or by vibrating the steering wheel or seat. Active implementations apply resistance to oppose the driver's attempts to turn the wheel. Blind-spot object detection can be implemented using either radar or vision technologies. But as previously noted, mainstream vehicles are increasingly standardizing on camera-first or camera-only approaches, whose versatility enables them to support numerous ADAS functions.

Side object detection is useful, for example, not only when the vehicle is traveling at high speed but also for assisted or fully autonomous parking systems. Or consider side-view mirrors. A long-standing vehicle feature, they nonetheless have several disadvantages: their view is often obstructed by passengers or the vehicle's chassis, for example, and their added air drag degrades fuel efficiency. High frame rate (approximately 60 fps) side cameras driving displays located in the traditional mirror locations (for driver familiarity) or at the center console, potentially also with other "smart" information such as upcoming-traffic warnings superimposed on top of the cameras' views, are an appealing successor for aerodynamic, ergonomic and other reasons.

Side cameras can also be used to implement various driver convenience features. Natural user interface applications, for example, are becoming common in the living room and may also make their way to the vehicle. Just as a rear facing camera primarily for rear collision avoidance can be re-purposed for gesture recognition to automatically open the trunk, a side camera installed primarily for blind spot detection can also be leveraged for gesture recognition to open doors and raise and lower windows, in conjunction with key fob proximity detection. Or consider the opportunity to completely dispense with the key fob, relying instead on face recognition to enable vehicle access (and operation) only by specifically registered users.

Surround View Applications

Multi-camera passive ADAS systems are already fairly common in developing countries where they assist drivers with parking in congested spaces. Known as surround view or around-view monitoring systems, they typically comprise four-camera (for cars) or six-camera (for buses, etc.) setups mounted at the front, sides and rear to create a 360 degree "bird’s eye" full-visibility view around the vehicle. Current multi-camera systems employ analog cameras with VGA or D1 resolution; next-generation systems will be digital with 1MP or higher resolution. Similarly, whereas current systems deliver 2D outputs, next-generation systems will offer a depth-enhanced rendering of the scene around the vehicle.

The cameras' outputs are brightness-adjusted and geometrically aligned in preparation for creating a "stitched" unified image. Key to success in this endeavor is synchronization of the image signal processing and image sensor settings inside each of the cameras. If gain or auto white balance settings are divergent, for example, obvious inter-camera "seams" will be present in the resultant combined image. Consider, too, that the lighting conditions around a car can be significantly different from one part of the car to another. The front camera might capture an oncoming vehicle's headlights, for example, while the rear camera sees only a dark empty road. Minimizing artifacts in the combined image requires a centralized algorithm that dynamically adjusts each camera's settings.

Wide-angle cameras are often used for ADAS systems, since they capture much more of the scene than cameras with "normal" focal length lenses. The captured images are distorted, however, particularly if an ultra-wide "fisheye" lens is employed. Subsequent image processing is therefore required, implementing one of several techniques with varying implementation complexity and quality of results. Barrel lens distortion correction, the simplest approach, compensates for the wide-angle lens effect by returning the image to a "flat" state. Projecting the captured image onto a plane (for instance the ground plane, as if the viewpoint was from above the car) delivers an even more "natural" overall outcome, with the exception of objects above the ground plane such as people or sidewalks, which are stretched and no longer look natural. Projecting onto a bowl results in an even more natural image.

A 360-degree view around the vehicle becomes necessary for certain autonomous driving scenarios (Figure 4). One example is the earlier-mentioned self-parking system. Autonomous driving is, perhaps obviously, even more challenging for automated systems to tackle, yet tangible process is also being made here. Google's highly publicized autonomous vehicle program is one example, as is Audi's autonomous A7, which largely drove itself from Palo Alto, CA to Las Vegas, NV for January's Consumer Electronics show, and Mercedes Benz's F 015 concept car, which was also unveiled at CES. More recently, Tesla announced that its Model S electric car will gain highway autonomous driving capabilities via a software update due this summer.

Figure 4. Many of the ADAS technologies discussed in this article are stepping stones on the path to the fully autonomous vehicle of the (near?) future.

Camera Alternatives

Speedy and precise distance-to-object measurement becomes increasingly important as ADAS systems become more active in nature. While, as mentioned earlier, it's possible (and, in high-end implementations, likely— for redundancy and other reasons) to combine cameras with other technologies such as ultrasound and radar for depth-sensing purposes, camera-only setups will be dominant in entry-level and mainstream ADAS systems. And whereas conventional 2D cameras can derive distance approximations adequate for passive guidance, active ADAS and autonomous vehicles benefit greatly from the inherent precision capable with true 3D camera systems.

Stereo camera setups are likely to be the most common depth-sensing option employed for vehicle-exterior ADAS applications, by virtue of their applicability across wide distance ranges in conjunction with their comparative immunity to illumination variances, various weather-relates degradations, and other environmental effects. Alternative depth camera approaches such as structured light and time-of-flight will likely be employed to a greater degree within the vehicle interior, and used for head tracking, precise gesture interface control, and other purposes. However, the stereo technique requires computationally intensive disparity mapping and stereo matching algorithms. And as camera resolutions increase, performance requirements also grow proportionally.

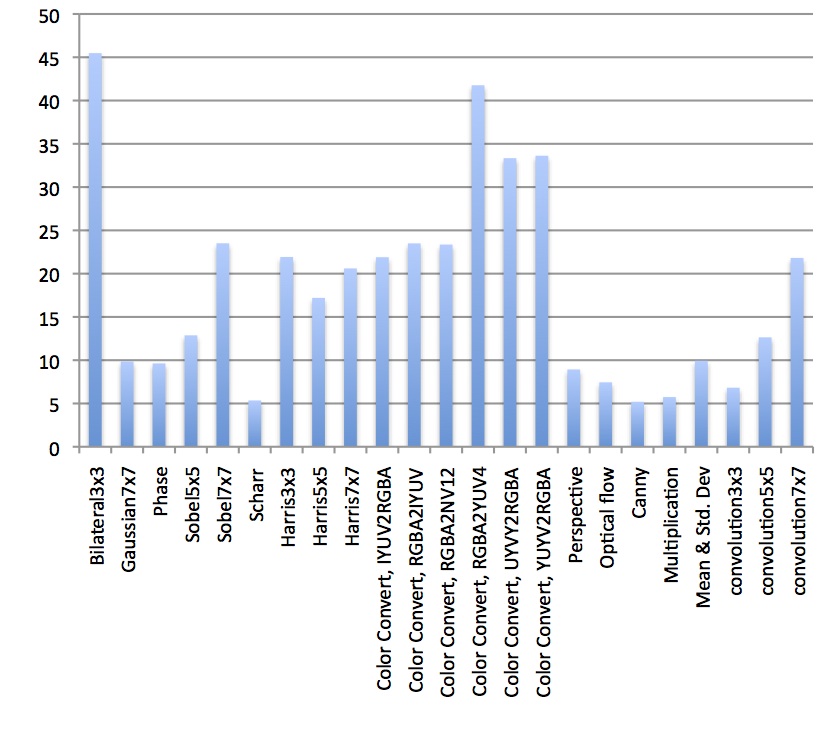

More generally, as ADAS systems evolve from simple scene-view applications to more intelligent object detection and tracking approaches, the level of processing required escalates rapidly. The processors must manage multiple classifiers (for pedestrians, bicycles, motorcycles, vehicles, etc.) as well as optical flow algorithms for motion tracking. Additionally, the algorithms need to work reliably across a range of challenging environments, such as rain and snow, and from bright mid-day sun to the darkest night. And, as the processor performance requirements grow dramatically, challenging power consumption, heat dissipation, size and weight specifications also need to be met. And of course, bill-of-material cost is always a key design metric. Optimizing for any one parameter may force compromise to another.

Processing Approaches

Since ADAS systems are not yet as pervasive as infotainment systems in vehicles, some manufacturers are in the near term considering augmenting existing infotainment systems with rear cameras for mirror-replacement purposes. Although such a passive ADAS implementation is relatively straightforward, the requirement for the camera to be fully operational within a few hundred milliseconds is challenging in an infotainment system running a high-level operating system. Consider, too, functional safety specifications such as ISO 26262, where the Automotive Safety Integrity Level's (ASIL) highest-possible D rating is often now a mandatory requirement for active safety ADAS systems. Meeting ASIL D may necessitate challenging design approaches such as multi-CPU lockstep fault tolerant processing.

One key design consideration, therefore, involves deciding where the computer vision processing takes place. At one end of the spectrum of possible implementations, it occurs on a single, powerful centralized processor. Other ADAS systems are based on multiple distributed processing units, each responsible for a focused set of functions. Such distributed systems have many advantages. Various ADAS functions have emerged at different times and evolved independently in the market. Supplied by distinct vendors and offered as discrete from-factory and aftermarket options by vehicle manufacturers, these standalone systems were therefore optimized for low cost and ease of integration.

Distributed processing units are also simpler to independently test. If a manufacturer wants to include a rear-view camera for example, it can simply add a "smart" module to a base design with a lower-cost central ECU inside. Similarly, a full-blown surround view system can be optionally supported without impacting the foundation cost of an entry-level vehicle. And over time, mass-market vehicles will inherit the well-tested ADAS units of current high-end models, a favorable scenario for consumers whose vehicles currently lack such safety and convenience capabilities.

The mostly-distributed processing model will likely continue to be popular. However, as high-end vehicles both add new features such as autonomy and further optimize existing ADAS capabilities, the integration of data coming from multiple cameras (and other sensors) and processors will be increasingly necessary, enabling unified decision-making, similar to how humans currently process information when driving. Emerging solutions will initially capitalize on the optimization achieved through intelligent partitioning of vision processing tasks not only among various hardware units, but also between hardware and software both intra- and inter-unit. And over time, the processing-partitioning pendulum may swing in the unified-processing direction, analogous to the centralized processor that is the human brain.

The Embedded Vision Summit

On May 12, 2015, in Santa Clara, California, the Embedded Vision Alliance will hold its next Embedded Vision Summit. Embedded Vision Summits are educational forums for product creators interested in incorporating visual intelligence into electronic systems and software. They provide business and technical presentations, inspiring keynote talks, demonstrations, and opportunities to interact with technical experts. These events are intended to:

- Inspire product creators' imaginations about the potential applications for embedded vision technology through exciting presentations and demonstrations.

- Offer practical know-how to help them incorporate vision capabilities into their products, and

- Provide opportunities for them to meet and talk with leading embedded vision technology companies and learn about their offerings.

Please visit the event page for more information on the Embedded Vision Summit and associated hands-on workshops, such as a detailed agenda, keynotes, business and technical presentations, the technology showcase, and online registration.

The Embedded Vision Alliance

Embedded vision technology is enabling a wide range of ADAS and other electronic products that are more intelligent and responsive than before, and thus more valuable to users. And it can provide significant new markets for hardware, software and semiconductor manufacturers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform this potential into reality. Altera, CogniVue, Kishonti Informatics, Texas Instruments, videantis and Xilinx, the co-authors of this article, are members of the Embedded Vision Alliance.

First and foremost, the Alliance's mission is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into new and existing products. To execute this mission, the Alliance maintains a website providing tutorial articles, videos, code downloads and a discussion forum staffed by a diversity of technology experts. Registered website users can also receive the Alliance’s twice-monthly email newsletter, Embedded Vision Insights, among other benefits.

In addition, the Embedded Vision Alliance offers a free online training facility for embedded vision product developers: the Embedded Vision Academy. This area of the Alliance website provides in-depth technical training and other resources to help engineers integrate visual intelligence into next-generation embedded and consumer devices. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools such as OpenCV.

By Brian Dipert

Editor-in-Chief, Embedded Vision Alliance

Sue Poniatowski

Strategic Applications Manager, Automotive Business Unit, Altera

Tom Wilson

Vice President, Product Management and Marketing, CogniVue

Laszlo Kishonti

CEO, Kishonti Informatics

Ian Riches

Director, Global Automotive Practice, Strategy Analytics

Gaurav Agarwal

Automotive ADAS Marketing and Business Development Manager, Texas Instruments

Marco Jacobs

Vice President, Marketing, videantis

and Dan Isaacs

Director, Embedded Processing Platform Marketing, Xilinx