In order for industrial automation systems to meaningfully interact with the objects they're identifying, inspecting and assembling, they must be able to see and understand their surroundings. Cost-effective and capable vision processors, fed by depth-discerning image sensors and running robust software algorithms, continue to transform longstanding industrial automation aspirations into reality. And, with the emergence of the Industry 4.0 "smart factory," this visual intelligence will further evolve and mature, as well as expand into new applications, as a result becoming an increasingly critical aspect of various manufacturing processes.

Computer vision-based products have already established themselves in a number of industrial applications, with the most prominent one being factory automation, where the application is also commonly referred to as machine vision. Machine vision was one of the first, and today is one of the most mature, high volume computer vision opportunities. And as manufacturing processes become increasingly autonomous and otherwise more intelligent, the associated opportunities for computer vision leverage similarly expand in both scope and robustness.

The term "Industry 4.0" is shorthand for the now-underway fourth stage of industrial evolution, with the four stages characterized as:

- Mechanization, water power, and steam power

- Mass production, assembly lines, and electricity

- Computers and automation

- Cyber-physical systems

Wikipedia introduces its entry for the term Industry 4.0 via the following summary:

Industry 4.0 is the current trend of automation and data exchange in manufacturing technologies. It includes cyber-physical systems, the Internet of things and cloud computing. Industry 4.0 creates what has been called a "smart factory". Within the modular structured smart factories, cyber-physical systems monitor physical processes, create a virtual copy of the physical world and make decentralized decisions. Over the Internet of Things, cyber-physical systems communicate and cooperate with each other and with humans in real time, and via the Internet of Services, both internal and cross-organizational services are offered and used by participants of the value chain.

The following series of essays expands on previously published information about industrial automation, which covered robotics systems and other aspects of computer vision-enabled autonomy at specific steps in the manufacturing process. The capabilities possible at each of these steps have notably improved in recent times, courtesy of deep learning-based algorithms and other technology advancements. And, given the current focus on the "smart factory," it's critical to implement robust interoperability and data interchange between various manufacturing steps (as well as between the hardware and software present at each of these stages), along with at the centralized "cloud" server resources that link steps together, enable data archive and notably contribute to the overall data processing chain.

This article provides both background overview and implementation-specific information on the visual intelligence-enabled capabilities that are key to a robust and evolvable Industry 4.0 smart factory infrastructure, and how to support these capabilities at the chip, software, camera and overall system levels. It focuses on processing both at each "edge" stage in the manufacturing process, and within the "cloud" server infrastructure that interconnects, oversees and assists them. It particularly covers three specific vision-enabled capabilities that are critical to a meaningful Industry 4.0 implementation:

- Identification of piece parts, and of assembled subsystems and systems

- Inspection and quality assurance

- Location and orientation during assembly

The contributors, sharing their insights and perspectives on various Industry 4.0 subjects, are all computer vision industry leaders and members of the Embedded Vision Alliance, an organization created to help product creators incorporate vision capabilities into their hardware systems and software applications (see sidebar "Additional Developer Assistance"):

- Industrial camera manufacturer Basler

- Machine vision software developer MVTec

- Vision processor supplier Xilinx

A case study of an autonomous robot system developed by MVTec's partner, Bosch, provides implementation examples that demonstrate concepts discussed elsewhere in the article.

Basler's Perspectives on Visual Intelligence Opportunities in Industry 4.0

Image processing systems built around industrial cameras are already an essential component in automated production. Throughout all steps of production, from the inspection of raw materials and production monitoring (i.e. flaw detection) to final inspections and quality assurance, they are an indispensable aspect of achieving high efficiency and quality standards.

The term Industry 4.0 refers to new process forms and organization of industrial production. The core elements are networking and extensive data communication. The goal is self-organized, more strongly customized and efficient production based on comprehensive data collection and effective exchange of information.

Image processing plays a decisive role in Industry 4.0. Importantly, cameras are becoming smaller and more affordable, even as their performance improves. Where complex systems were once required, today's small, efficient systems can produce the same or better results. This technological progress, together with the possibilities of ever-expanding networking, opens up the potential for new Industry 4.0 applications.

Identification of piece parts, and of assembled subsystems and systems

Embedded vision systems, each comprised of a camera module and processing unit, can conceivably find use in every step of a manufacturing process. This applicability includes in-machine and otherwise difficult-to-access locations, thanks to the vision systems' small size, light weight and low heat dissipation (Figure 1). Such flexibility makes them useful in identifying both piece parts and complete products, a particularly useful capability with goods that cannot be tracked via conventional barcodes, for example.

Figure 1. Modern industrial cameras' compact form factors and low power consumption enable their use in a wide range of applications and settings (courtesy Basler).

Visual identification is also relevant for individually customized or otherwise uniquely manufactured products. In these and other usage scenarios, a cost-effective embedded vision system can be a beneficial data capture and delivery component, reducing the normally complex logistics of a bespoke manufacturing process.

Cameras utilized in embedded vision systems, whether implemented in small-box or bare-board form factors, are capable of delivering comparable image capture speed to classical machine vision cameras. This is a key capability, given that such performance is often an important parameter in parts identification applications. USB 3, LVDS, and MIPI CSI-2-based interface options correlate to transfer bandwidths of 250-500 MBytes/second, translating into 125-250 frame-per-second capture rates for typical 2 megapixel (i.e. "Full HD") resolution images.

Surface inspection and other quality assurance tests

Enhanced inspection and quality assurance can involve not only the visual inspection of the final product but also of individual components prior to their assembly. By eliminating defective piece parts, final product yields will likely improve, saving money. Historically, however, this additional cost savings did not always justify the expense of an additional vision inspection system.

Nowadays, though, as Industry 4.0 manufacturing setups become commonplace, vision systems are increasingly affordable. From a camera standpoint, this financial improvement is primarily the result of ongoing improvements in CMOS sensor technology. Today, even the most cost-effective image sensors frequently deliver sufficient image quality to meet industrial application demands. As a result, board-level cameras can now increasingly be implemented for piece part prequalification, thereby delivering a sophisticated inspection system leveraged in multiple stages of the manufacturing process.

Determination of part/subsystem location and orientation during assembly

Piece part identification, along with location and orientation determination (and adjustment, if necessary) are common industrial vision operations, frequently employed by robotic assembly systems. Industry 4.0 "smart factories" provide the opportunity to further expand these kinds of functions to include various human-machine interaction scenarios. Consider, for example, a head-mounted mobile vision system, perhaps combined with an augmented reality information display, used when overseeing both human worker and autonomous robot operations.

Such a system, capable of decreasing failure rates and otherwise optimizing various manufacturing processes, would need to be small in size (ideally, around the size of a postage stamp for the camera module), light in weight, and low in power consumption. Fortunately, in the modern day embedded vision era, such a combination is increasingly possible, as well as affordable.

By Thomas Rademacher

Product Market Manager, Basler

MVTec's Perspectives on Visual Intelligence Opportunities in Industry 4.0

Industry 4.0, also known as the Industrial Internet of Things (IIoT), is one of the most significant trends in the history of industrial production. As a result of this digital transformation, processes along the entire value chain are becoming consistently networked and highly automated. All aspects of production, including all participants and technologies involved—people, machines, sensors, transfer systems, smart devices, and software solutions—seamlessly work together via internal company networks and/or the Internet. Another manifestation of this trend is the digital factory, also known as the smart factory. In this environment, automated production components interact independently and in close cooperation with humans via the IIoT.

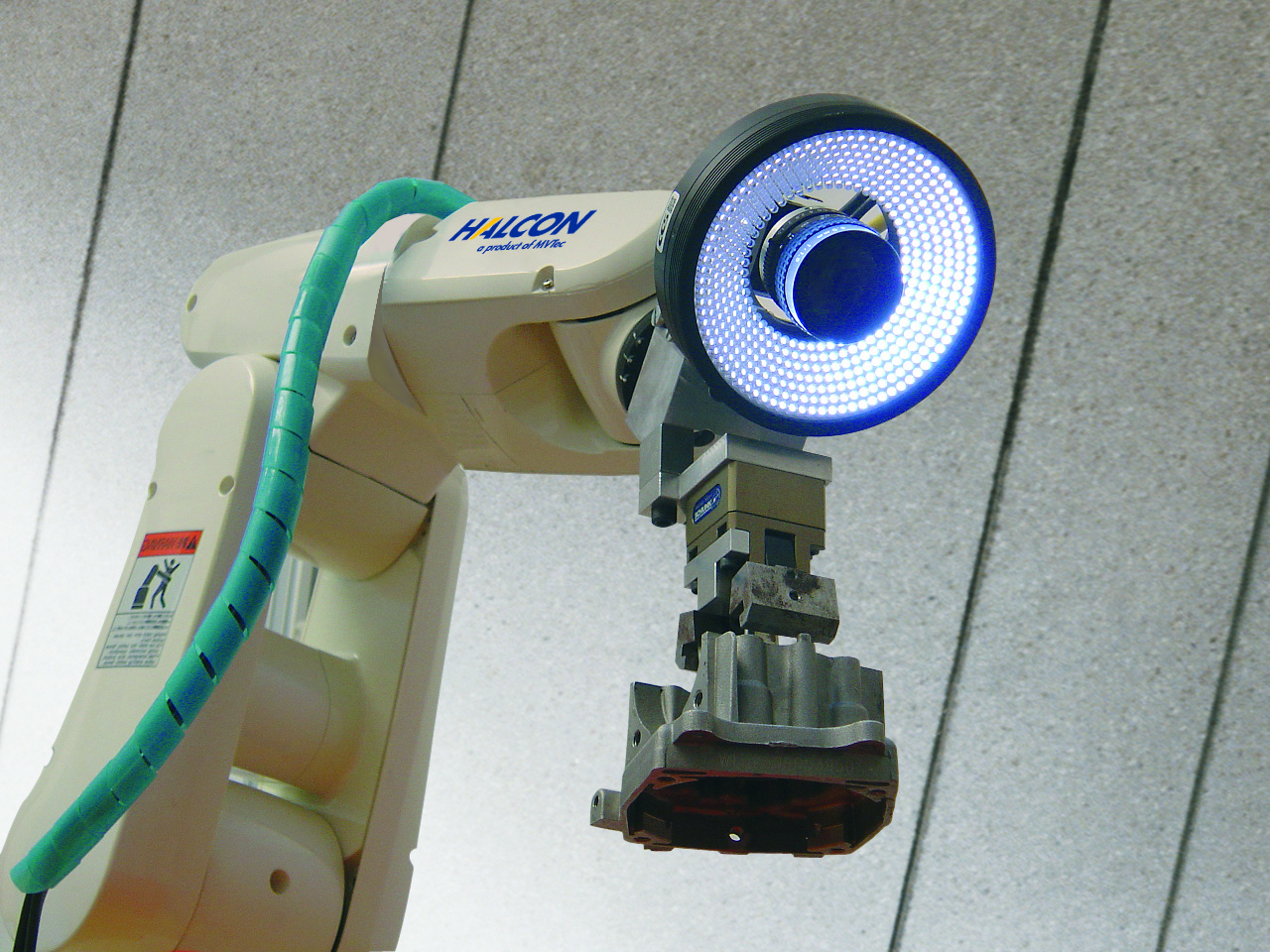

Machine vision, a technology that has paved the way for the IIoT, plays a key role (Figure 2). State-of-the-art image acquisition devices such as high-resolution cameras and sensors, in combination with high-performance machine vision software, process the recorded digital image information. A wide range of objects in the production environment is therefore detected and analyzed automatically based on their visual features – safely, precisely, and at high speeds. The interaction and communication between different system environments, such as machine vision and programmable logic controllers (PLCs), are continuously improved in automated production scenarios. And the systematic development of standards such as the Open Platform Communications Unified Architecture (OPC UA) is key to ongoing development in this area.

Figure 2. The "eye of production" monitors all Industry 4.0 processes (courtesy MVTec).

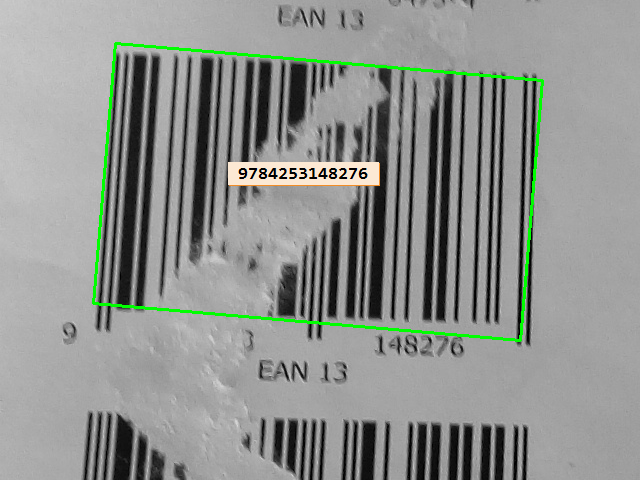

Object identification based on a wide range of features

As the "eye of production", machine vision continuously sees and monitors all workflows relevant to production, using this data to optimize numerous application scenarios within the IIoT. For example, machine vision can unambiguously identify work pieces based on surface features, such as shape, color, and texture. Bar codes and data codes also allow for unmistakable identification. The machine vision software can reliably read overly narrow or wide code bars, as well as identify products by imprinted character combinations via optical character recognition (OCR) with excellent results (Figure 3). The technology can even recognize blurry, distorted, or otherwise hard-to-read letters and numbers.

Figure 3. Robust machine vision software can even reliably read defect-ridden bar codes (courtesy MVTec).

For challenging identification tasks, deep learning methods such as convolutional neural networks (CNNs) are increasingly finding use. One key advantage of these technologies is that they are self-learning. This means that their algorithms analyze and evaluate large amounts of training data, independently recognize certain patterns in it, and subsequently use these trained patterns for identification (inference). This ability enables the software to significantly improve reading rates and accuracy. In networked IIoT processes, both codes and characters contain essential production information that finds use in automatically controlling all other process steps.

Reliable inspection of work pieces for defects

Quality assurance is another important machine vision application. Software fed by hardware-captured images reliably detects and identifies product damage and other various production faults. Erroneous deviations can be immediately identified by comparison, after being trained with only one or a few sample images. This robustness enables users to reliably locate many different defects using only a few parameters. The technology can also reveal tiny scratches, dents, hairline cracks, etc. which are not visible to the naked eye. Rejects can thus be removed automatically and in a timely fashion, before they proceed farther in the process chain.

Highly developed 3D-based machine vision methods also make it possible to detect material defects that extend below the surface of objects, such as embedded air bubbles and dust particles. Even the smallest unevenness can be detected using images captured from different perspectives, with corresponding varying shadow formation. This enhancement significantly improves the process of surface inspection. An especially important element in the context of the IIoT is that the number and type of defective parts is immediately reported to production planning and control systems, as well as job planning, thereby allowing for automatic triggering of reorders.

The increasingly widespread use of mobile devices in the industrial setting is also a notable characteristic of the IIoT. It is therefore important that a broad range, if not the entirety, of machine vision capabilities be accessible by devices such as smartphones, tablets, smart cameras, and smart sensors. Specifically, software support for Arm processors, common in such products, is critical. When this requirement is met, machine vision can become increasingly independent of legacy PCs. After all, the majority of these mobile devices also have very powerful components, such as high performance processors, an abundance of RAM and flash memory, and high-resolution image sensors.

Determining the location and orientation of objects during assembly

The use of robots, which are becoming more and more compact, mobile, flexible, and human-like over time, is typical in IIoT scenarios. So-called collaborative robots (cobots) often work closely with their human colleagues; they may, for example, transfer raw materials or work pieces to each other during assembly. Machine vision solutions ensure a high standard of safety and efficiency in such scenarios. Depth-sensing functionality integrated into the software can safely determine the position, direction of movement, and speed of objects in three-dimensional space, thus preventing collisions and other calamities.

The handling of parts can likewise be consistently automated by the ability to accurately determine the parts’ locations. Robots can thus precisely position, reliably grip, process, place, and palletize a wide range of components. It is also possible to use components' sample images and CAD data to determine the exact 3D position, in order to detect curvature and edges.

Optimizing maintenance processes

Machine vision also performs valuable services in maintenance processes. Via its assistance, smartphones and tablets can find use for machine maintenance tasks. For example, if a defective component requires replacement, the technician can simply point a camera-equipped mobile device toward the control cabinet and take a photo. The embedded machine vision software integrated into the smart phone will then determine the appropriate part to be substituted, processing the image data and making it available to the remainder of the maintenance process.

Machine vision can implement predictive maintenance, too. Cameras installed in various locations are capable of, for example, monitoring the machine park. If an irregularity is noticed – for example, if a thermal imaging camera detects an overheating machine – machine vision software can immediately sound an alarm. Technicians can then intervene before the machine experiences complete failure.

Best practice: Machine vision and robots work together in assembly

A case study application scenario demonstrates how automated handling is already robustly implemented. Bosch's APAS (Automatic Production Assistants) are capable of performing a wide range of automated assembly and inspection tasks. Individual process modules can be flexibly combined to implement various functions. Both the APAS assistant collaborative robot and the APAS inspector for visual inspections leverage MVTec's HALCON machine vision software (Figure 4). The photometric stereo 3D vision technology integrated into the APAS inspector, for example, analyzes the "blanks" handled by the APAS assistant for scratches and other damage.

Figure 4. Multiple Bosch APAS process modules leverage HALCON machine vision software (courtesy Bosch).

Machine vision software also supports the precise positioning of individual process modules that are not physically connected. By means of optical markers and with the help of machine vision software, the cameras integrated into the APAS assistant's gripper arms precisely determine module coordinates. The robot thus always knows the exact position of individual modules in three-dimensional space and can coordinate them with its gripper arm. This approach results in optimum interaction between the robot and the other modules, even if the modules’ arrangement changes.

By Johannes Hiltner

Product Manager HALCON, MVTec

Xilinx's Perspectives on Visual Intelligence Opportunities in Industry 4.0

Embedded vision-based systems are increasingly being deployed in so-called "Industry 4.0" applications. Here embedded vision enables both automation, using vision for positioning and guidance, and data collection and decision-making, by means of vision systems operating in the visible or wider electromagnetic spectrum. And both the IIoT and the "cloud" serve to interconnect these technologies.

Within Industry 4.0 applications, common use cases for embedded vision include those involving the identification and inspection of components, piece parts, sub-systems and systems. In many cases, the embedded vision system may also be responsible for feeding data back into the manufacturing process in order to adjust part positioning, for example, or to remove defective or otherwise incorrect parts from the manufacturing flow. These diverse functions create challenges for the processing system, which connects together cameras and other subsystems; robotic, positioning, actuation, etc:

- Multi-camera support: The processing system must be able to interface with multiple cameras, co-aligned for depth perception to provide a more complete view of parts in the manufacturing flow.

- Connectivity: It must be able to connect to both the operational and information networks, along with other standard industrial interfaces such as illumination systems, and actuation and positioning systems.

- Highly deterministic: It must be able to perform the required analysis and decision-making tasks without delaying the production line.

- Leverage machine learning: It must be able to implement machine learning techniques at the "edge" to achieve better quality of results and therefore optimize yield.

- SWAP-C: Due to the number of embedded vision systems deployed, developers must consider the total size, weight, power consumption and cost of the proposed solution.

The processing system must also address the evolving demands brought by ever-increasing frame rates, resolution, and bits per pixel.

Support for multiple cameras and their associated connectivity requires a solution that enables any-to-any connectivity by being PHY-configurable for any necessary interface. Many Industry 4.0 applications utilize flexible programmable logic, which inherently supports any-to-any interfacing capabilities, to address these interface challenges. And image processing also commonly leverages an application processor combining a CPU core with one or multiple heterogeneous co-processors (GPU, DSP, programmable logic, etc.) thereby enabling increased determinism, reduced latency, higher performance, lower power consumption and otherwise more efficient parallel processing within a single SoC.

To simplify the development and implementation of these processing systems, developers implementing Industry 4.0 applications can leverage open-source, high-level languages and frameworks such as OpenCV and OpenVX for image processing and Caffe for machine intelligence. These frameworks enable algorithm development time to be greatly reduced and also allow developers to focus on their value-added activities, thereby differentiating themselves from their competitors. Xilinx’s reVISION stack, which enables OpenCV and Caffe functions to be accelerated in programmable logic, is an example of a vendor-optimized development toolset.

Even if Industry 4.0 applications are processed at the edge, information regarding these processing decisions is commonly also communicated to the cloud for archive, further analysis, etc. One example application involves the centralized collation and analysis of yield information from several manufacturing lines located across the world, in order to determine and "flag" quality issues. While this collective analysis does not necessarily need to be performed in real time, it still needs to occur quickly; should a quality issue arise, a swift response is necessary in order to minimize yield and other impacts. And archive of the data is valuable to assess long-term trends.

Such situations can potentially employ deep machine inference within the "cloud" server, guided by prior training. FPGA-based inference accelerators are beneficial here, to minimize analysis and response latencies. Xilinx's Reconfigurable Acceleration Stack (RAS) is one example of a vendor-optimized toolset that simplifies "cloud" application development, leveraging industry-standard frameworks and libraries such as Caffe and SQL.

By Giles Peckham

Regional Marketing Director, Xilinx

and Adam Taylor

Embedded Systems Consultant, Xilinx

Conclusion

The rapidly expanding use of vision technology in industrial automation is part of a much larger trend. From consumer electronics to automotive safety systems, vision technology is enabling a wide range of products that are more intelligent and responsive than before, and thus more valuable to users. The term “embedded vision” refers to this growing practical use of visual intelligence in embedded systems, mobile devices, special-purpose PCs, and the cloud, with industrial automation being one showcase application.

Embedded vision can add valuable capabilities to existing products, such as the vision-enhanced industrial automation systems discussed in this article. It can provide significant new markets for hardware, software and semiconductor manufacturers. And a worldwide industry alliance is available to help product creators optimally implement vision processing in their hardware and software designs.

By Brian Dipert

Editor-in-Chief, Embedded Vision Alliance

Sidebar: Additional Developer Assistance

The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower product creators to transform the potential of vision processing into reality. Basler, MVTec, and Xilinx, the co-authors of this article, are members of the Embedded Vision Alliance. The Embedded Vision Alliance's mission is to provide product creators with practical education, information and insights to help them incorporate vision capabilities into new and existing products. To execute this mission, the Embedded Vision Alliance maintains a website providing tutorial articles, videos, code downloads and a discussion forum staffed by technology experts. Registered website users can also receive the Embedded Vision Alliance’s twice-monthly email newsletter, Embedded Vision Insights, among other benefits.

The Embedded Vision Alliance also offers a free online training facility for vision-based product creators: the Embedded Vision Academy. This area of the Embedded Vision Alliance website provides in-depth technical training and other resources to help product creators integrate visual intelligence into next-generation software and systems. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools such as OpenCL, OpenVX and OpenCV, along with Caffe, TensorFlow and other deep learning frameworks. Access is free to all through a simple registration process.

The Embedded Vision Alliance and its member companies periodically deliver webinars on a variety of technical topics. Access to on-demand archive webinars, along with information about upcoming live webinars, is available on the Alliance website. Also, the Embedded Vision Alliance has begun offering "Deep Learning for Computer Vision with TensorFlow," a full-day technical training class planned for a variety of both U.S. and international locations. See the Alliance website for additional information and online registration.

The Embedded Vision Alliance’s annual technical conference and trade show, the Embedded Vision Summit, is intended for product creators interested in incorporating visual intelligence into electronic systems and software. The Embedded Vision Summit provides how-to presentations, inspiring keynote talks, demonstrations, and opportunities to interact with technical experts from Embedded Vision Alliance member companies. The Embedded Vision Summit is intended to inspire attendees' imaginations about the potential applications for practical computer vision technology through exciting presentations and demonstrations, to offer practical know-how for attendees to help them incorporate vision capabilities into their hardware and software products, and to provide opportunities for attendees to meet and talk with leading vision technology companies and learn about their offerings.

The most recent Embedded Vision Summit took place in Santa Clara, California on May 1-3, 2017; a slide set along with both demonstration and presentation videos from the event are now in the process of being published on the Alliance website. The next Embedded Vision Summit is scheduled for May 22-24, 2018, again in Santa Clara, California; mark your calendars and plan to attend.