|

Dear Colleague,

Are you looking to amp up your latest product success? Getting ready to introduce a new product that is a game changer? Enter to win a Vision Product of the Year Award! Embedded Vision Alliance Member companies can apply today for this great opportunity to garner industry recognition for products judged best at enabling visual AI and the rapid deployment of computer vision systems and applications. From a unique award logo you can use in your marketing materials to multiple opportunities for media exposure, winning a Vision Product of the Year Award will enable you to stand out in the increasingly complex embedded vision market. All this and a cool looking trophy, too! As a previous winner told us, "This award generated so much interest in what we’re doing that we’ll probably invent another product so we can try to win again next year!" The deadline for entries is March 1; for detailed instructions and the entry form, please see the Vision Product of the Year Awards page on the Alliance website. Good luck! (If you're interested in having your company become a Member of the Embedded Vision Alliance, see here for more information.)

The Embedded Vision Summit attracts a global audience of over one thousand product creators, entrepreneurs and business decision-makers who are developing and using computer vision technology. The Embedded Vision Summit has experienced exciting growth over the last few years, with 97% of 2018 Summit attendees reporting that they’d recommend the event to a colleague. The next Summit will take place May 20-23, 2019 in Santa Clara, California, and online registration is now available. The Summit is the place to learn about the latest applications, techniques, technologies, and opportunities in visual AI and deep learning. And in 2019, the event will feature new, deeper and more technical sessions, with more than 90 expert presenters in 4 conference tracks and 100+ demonstrations in the Technology Showcase. Register today using promotion code SUPEREBNL19 to save 25% with our Super Early Bird Discount rate ending this Friday, February 22.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

|

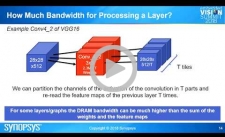

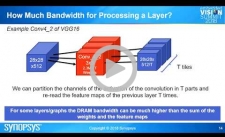

New Deep Learning Techniques for Embedded Systems

In the past few years, the application domain of deep learning has rapidly expanded. Constant innovation has improved the accuracy and speed of training and inference. Many techniques are proposed to represent and learn more knowledge with smaller/more compact networks. Mapping these new techniques on low power embedded platforms is challenging because they are often very demanding on compute, bandwidth and accuracy. In this presentation, Tom Michiels, System Architect for Embedded Vision at Synopsys, discusses the latest state-of the art deep learning techniques and their implications for the requirements of embedded platforms. Also see the technical article "Implementing Vision with Deep Learning in Resource-constrained Designs," co-authored by the Alliance and Member companies Au-Zone Technologies, BDTI and Synopsys, for more on this topic.

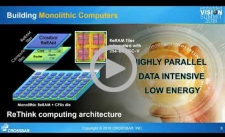

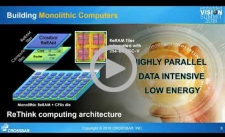

New Memory-centric Architecture Needed for AI

AI will not replace the need for humans any time soon, but it will have a profound impact on everyday lives, transforming industries from transportation to education, medical to entertainment. AI is about data computing, and the more data, the smarter the AI algorithms will be. However, the current bottleneck between data storage and computing cores is limiting the innovation of future AI applications. In this presentation, Sylvain Dubois, Vice President of Strategic Marketing & Business Development at Crossbar, discusses how non-volatile memory technologies such as ReRAM can be directly integrated on-chip with processing logic enabling brand new memory-centric computing architectures. The superior characteristics of ReRAM over legacy non-volatile memory technologies are helping to address the performance and energy challenges required by AI algorithms. High performance computing applications, such as AI, require high-bandwidth, low latency data access across processors, storage and IO. ReRAM memory technologies provide significant improvements by reducing the performance gap between storage and computing.

|

|

How Simulation Accelerates Development of Self-Driving Technology

Virtual testing, as discussed by László Kishonti, founder and CEO of AImotive, in this presentation, is the only solution that scales to address the billions of miles of testing required to make autonomous vehicles robust. However, integrating simulation technology into the development of a self-driving system requires consideration of several factors. One of these is the difference between real-time and fixed time step simulation. Both real-time simulation, which demands high-performance computing resources, and fixed time step simulation, which can be executed more economically, are needed, and the two serve different purposes. Specialized frameworks are also needed to support the work of development teams and maximize the utilization of available hardware. AI researchers and developers, according to Kishonti, should be able to request server-based tests from their workstations. These tests run selected versions of the simulator and self-driving solution and provide quick feedback about the effects of changes to the solution or to the simulator. Using established test scenarios and different software versions allows the simulator and self-driving solution to be used to validate each other. This prevents wasting resources on changes that degrade or destabilize either the simulator or the self-driving solution, accelerating the development of solutions while also making them safer. Also listen to Kishonti's insights as a Vision Entrepeneurs' Panel member at the 2018 Embedded Vision Summit.

Cameras and Cockpit Convergence: Trends in Automotive Sensing

In this presentation, Roger Lanctot, Director of Automotive Connected Mobility at Strategy Analytics, shares his unique perspectives and data on fundamental trends in ADAS and autonomous vehicles.

|

|

Embedded World: February 26-28, 2019, Nuremberg, Germany

Vision Systems Design Webinar – Embedded Vision: The Four Key Trends Driving the Proliferation of Visual Perception: March 27, 2019, 11 am ET

Embedded Vision Summit: May 20-23, 2019, Santa Clara, California

More Events

|