|

Dear Colleague,

Joining MIT's Ramesh Raskar as a keynote presenter at the upcoming Embedded Vision Summit is Google's Pete Warden, Staff Research Engineer and lead developer on the company's TensorFlow Lite machine learning framework for mobile and embedded applications. Warden, a popular presenter at past Summits, is a leading entrepreneur in visual AI, software and big data, with a track record of building innovative image processing and machine learning technology at scale. In his keynote presentation, "The Future of Computer Vision and Machine Learning is Tiny," he will share his unique perspective on the state of the art and future of low-power, low-cost machine learning and highlight some of the most advanced examples of current machine learning technology and applications.

Here’s why I’m so excited about Warden's keynote:

- There are 150 billion embedded processors in the world — more than twenty for every person on earth — and this number grows by 20% each year.

- Imagine a world in which these hundreds of billions of devices not only collect data, but transform that data into actionable insights — insights that in turn can improve the lives of billions of people.

- To do this, we need machine learning, which has radically transformed our ability to extract meaningful information from noisy data. But conventional wisdom is that machine learning consumes a vast amount of processing performance and memory.

What if we could change that? What would it take to do that, and what would that world look like? If computer vision is in your product roadmap, your investment plans or your startup dreams, Warden's talk is a don’t-miss session. I hope you will join me to hear it – and to learn from more than 90 other speakers, over two solid days of presentations and demos. (You can see the current line-up of talks here, with more being added every day.) I look forward to seeing you at the Summit!

Rise of the Robots 2019 is a one-day showcase of global robotics, taking place May 30, 2019 at SRI International in Menlo Park, California. You'll hear from Dr Terry Fong, Chief Roboticist at NASA, meet with humanoid robots and learn about the latest investments and startups. Join the invitation list at www.svrobo.org/the-rise-of-the-robots-2019.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

|

The Roomba 980: Computer Vision Meets Consumer Robotics

In 2015, iRobot launched the Roomba 980, introducing intelligent visual navigation to its successful line of vacuum cleaning robots. The availability of affordable electro-mechanical components, powerful embedded microprocessors and efficient algorithms created the opportunity for a successful deployment of a vision-enabled consumer robot. In this talk, Mario Munich, Senior Vice President of Technology at iRobot, discusses some of the challenges and the benefits of introducing a vision-based navigating robot, and explores what made the timing right for bringing vision to Roomba. Based on the learnings gathered during development of the Roomba 980, he elaborates on the potential impact of computer vision in the future of robotics applications.

Understanding Real-World Imaging Challenges for ADAS and Autonomous Vision Systems – IEEE P2020

ADAS and autonomous driving systems rely on sophisticated sensor, image processing and neural-network based perception technologies. This has resulted in effective driver assistance capabilities and is enabling the path to full autonomy, which we see being shown at demonstration events and in controlled regions of operation. But current systems are significantly challenged by real-world operating conditions, such as darkness, poor weather and lens issues. In this talk, Felix Heide, CTO and co-founder of Algolux, examines the difficult use cases that hamper effective ADAS for drivers and cause autonomous vision systems to fail. He explores a range of challenging scenarios and explains the key reasons for vision system failure in these situations. He also introduces industry initiatives that are tackling these challenges, such as IEEE P2020.

|

|

Bad Data, Bad Network, or: How to Create the Right Dataset for Your Application

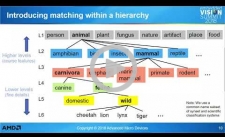

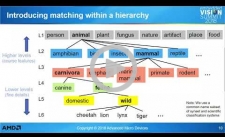

When training deep neural networks, having the right training data is key. In this talk, Mike Schmit, Director of Software Engineering for computer vision and machine learning at AMD, explores what makes a successful training dataset, common pitfalls and how to set realistic expectations. He illustrates these ideas using several object classification models that have won the annual ImageNet challenge. By analyzing accurate and inaccurate classification examples (some humorous and some amazingly accurate) you will gain intuition on the workings of neural networks. Schmit's results are based on his personal dataset of over 10,000 hand-labeled images from around the world.

Rethinking Deep Learning: Neural Compute Stick

In July 2017, Intel released the Movidius Neural Compute Stick (NCS)–a first-of-its-kind USB-based device for rapid prototyping and development of inference applications at the edge. NCS is powered by the same low power, high-performance Movidius Vision Processing Unit (VPU) that can be found in millions of smart security cameras, gesture-controlled drones, industrial machine vision devices and more. Delivering significant acceleration over previous, compute-constrained platforms, the Neural Compute Stick is enabling all sorts of companies to embrace the revolution brought about by deep learning. In this presentation, Ashish Pai, Senior Director in the Neural Compute Program at Intel, discusses the development of this unique product and adoption of this completely new tool, and shares stories of companies–ranging from established multinational tech companies to ambitious startups–that have gone from prototyping on the Neural Compute Stick to developing full-fledged products utilizing embedded neural networks.

|