Manisha Agrawal, Product Marketing Engineer at Texas Instruments, presents the “Making Edge AI Inference Programming Easier and Flexible” tutorial at the September 2020 Embedded Vision Summit.

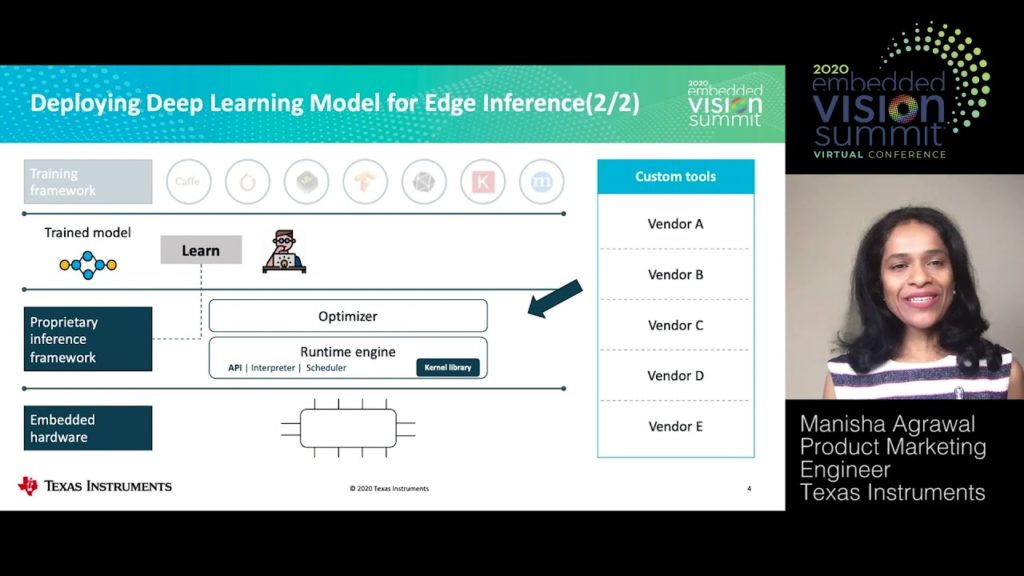

Deploying an AI model at the edge doesn’t have to be challenging—but it often is. Embedded processing vendors have unique sets of software tools for deploying models. It takes time and investment to learn to use proprietary tools and to optimize the edge implementation to achieve your desired performance. While embedded vendors are providing proprietary tools for model deployment, the open source community is also advancing to standardize the model deployment process and make it hardware agnostic.

Texas Instruments has adopted open source software frameworks to make model deployment easier and more flexible. In this talk, you will learn about the struggles developers face when deploying models for inference on embedded processors and how TI addresses these critical software development challenges. You will also discover how TI enables faster time-to-market using a flexible open source development approach without the need to compromise performance, accuracy or power requirements.

See here for a PDF of the slides.