Alexander Kozlov, Deep Learning R&D Engineer at Intel, presents the “Recent Advances in Post-training Quantization” tutorial at the September 2020 Embedded Vision Summit.

The use of low-precision arithmetic (8-bit and smaller data types) is key for the deployment of deep neural network inference with high performance, low cost and low power consumption. Shifting to low-precision arithmetic requires a model quantization step that can be performed at model training time (quantization-aware training) or after training (post-training quantization). Post-training quantization is an easy way to quantize already trained models that provides good accuracy/performance trade-off.

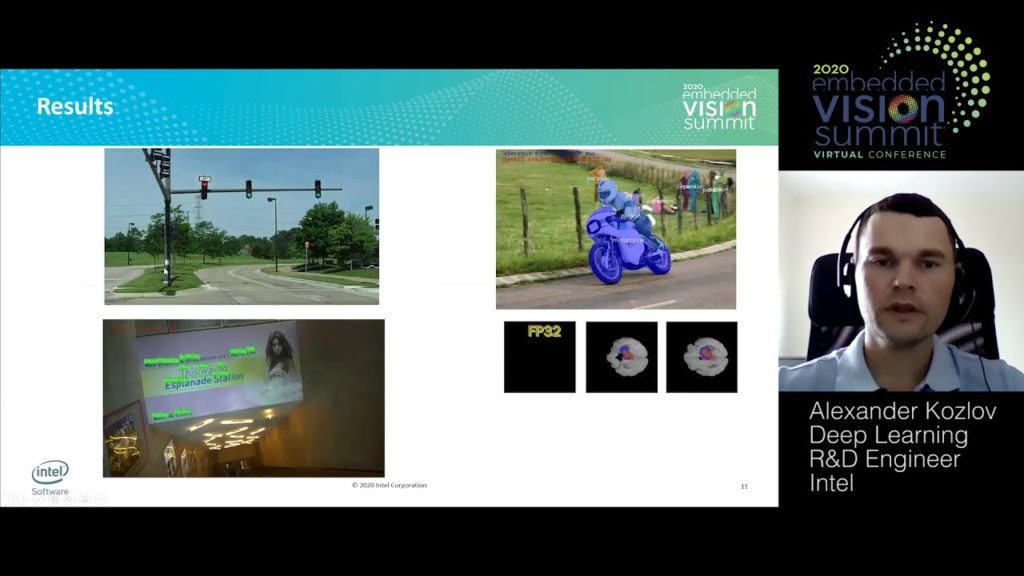

In this talk, Kozlov reviews recent advances in post-training quantization methods and algorithms that help to reduce quantization error. He also shows the performance speed-up that can be achieved for various models when using 8-bit quantization.

See here for a PDF of the slides.