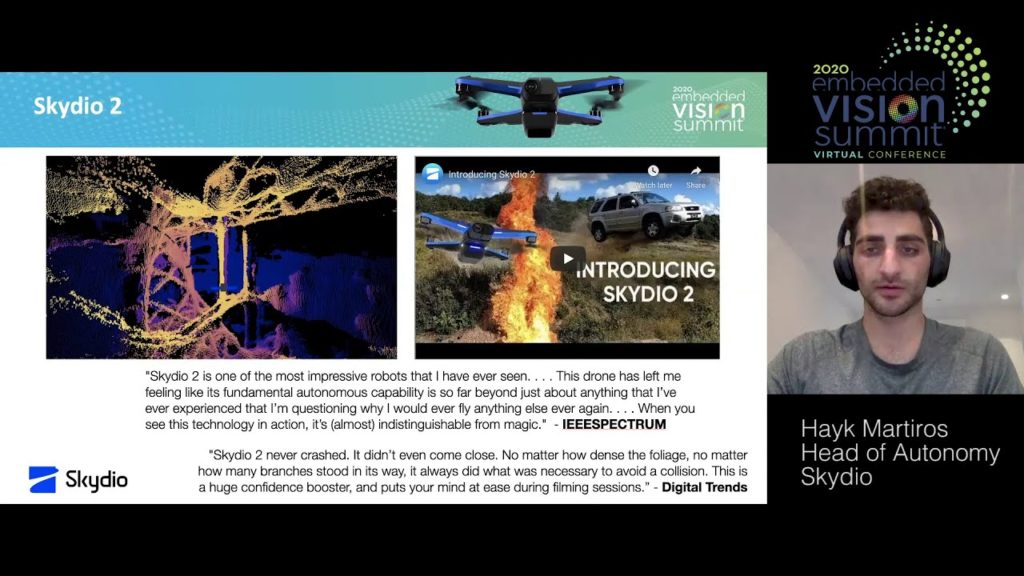

Hayk Martiros, Head of Autonomy at Skydio, presents the “Tackling Extreme Visual Conditions for Autonomous UAVs In the Wild” tutorial at the September 2020 Embedded Vision Summit.

Skydio ships autonomous robots that are flown at scale in complex, unknown environments every day to capture incredible video, automate dangerous inspections and save lives of first responders. They must make decisions at high speed using just their onboard cameras and algorithms running on low-cost hardware. The company has invested five years of R&D into handling extreme visual scenarios not typically considered by academia nor encountered by cars, ground-based robots or AR applications.

Drones are commonly used in scenes with few or no semantic priors on the environment and must deftly navigate in the presence of thin objects, extreme lighting, camera artifacts, motion blur, textureless surfaces, vibrations, dirt, smudges and fog. Because photometric signals are not consistent, these challenges are daunting for both classical vision approaches and unsupervised learning. And there is no ground truth for direct supervision. Martiros takes a detailed look at these issues and how his company tackled them to push autonomous flight into production.

See here for a PDF of the slides.