This blog post was originally published by NXP Semiconductors. It is reprinted here with the permission of NXP Semiconductors.

Edge intelligence is one of the most disruptive innovations since the advent of the Internet of Things (IoT). While the IoT gave rise to billions of smart, connected devices transmitting countless terabytes of sensor data for AI-based cloud computing, another revolution was underway: machine learning (ML) on edge devices. As more and more intelligence migrates to the network edge, NXP has embraced this trend by delivering cost, performance and power-optimized processing solutions to propel ML technology across multiple markets and applications, providing end users with the benefits of enhanced security, greater privacy and lower latency.

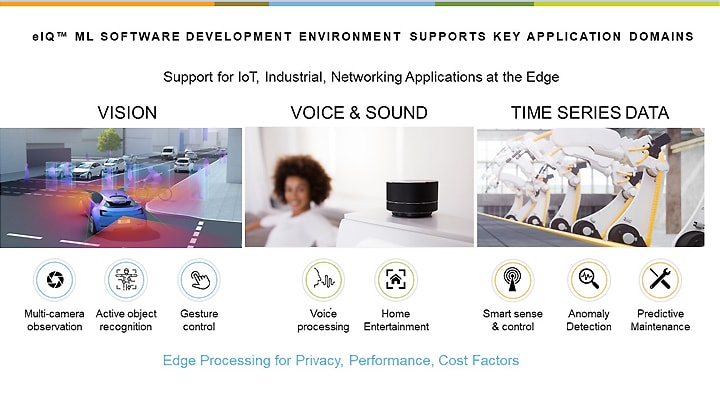

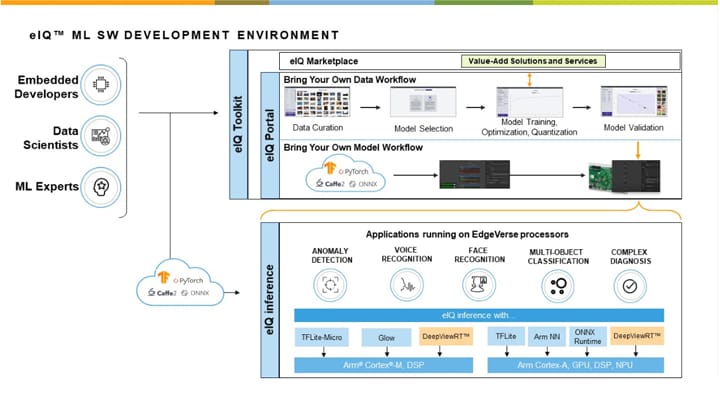

Developing ML, deep learning and neural network applications traditionally has been the domain of data scientists and AI experts. But this is changing as more ML tools and technologies have become available to abstract away some of the complexity of developing machine learning applications. A case in point is NXP’s eIQ (“edge intelligence”) ML development environment. eIQ provides a comprehensive set of workflow tools, inference engines, neural network (NN) compilers, optimized libraries and technologies that ease and accelerate ML development for users of all skill levels, from embedded developers embarking on their first deep learning projects to experts focused on advanced object detection, classification , anomaly detection or voice recognition solutions.

Introduced in 2018, eIQ ML software has evolved to support system-level application and ML algorithm enablement targeting NXP’s i.MX Series, from low-power i.MX RT crossover microcontrollers (MCUs) to multicore i.MX 8 and i.MX 8M applications processors based on Arm® Cortex®-M and Cortex®-A cores.

Today’s Big Update

To help ML developers become even more productive and proficient on NXP’s i.MX 8 processing platforms, we’ve significantly expanded our eIQ software environment to include new eIQ Toolkit workflow tools, GUI-based eIQ Portal development environment and the DeepViewRT™ inference engine optimized for i.MX and i.MX RT devices.

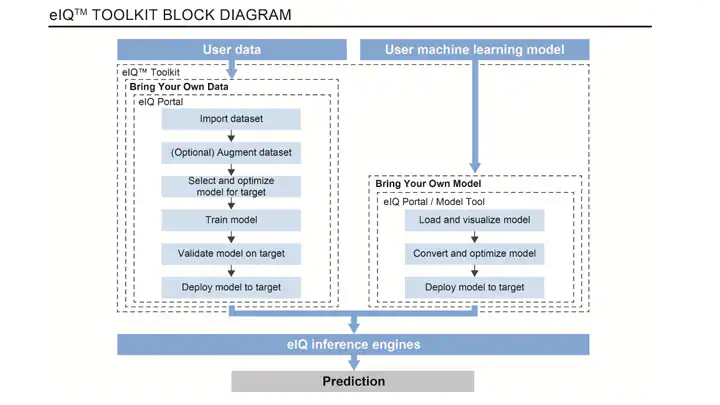

Figure 1. High-level presentation of the eIQ toolkit and eIQ portal features and workflow.

Let’s take a closer look at how these powerful, new additions to the eIQ software environment can help streamline ML development, enhance productivity, and give developers more options and greater flexibility.

eIQ ToolKit: Enabling “ML for Everyone”

Given the underlying complexities of machine learning, neural network and deep learning applications and the varying needs of ML developers, a simple “one-size-fits-all” tool isn’t the answer. A better approach is to provide a comprehensive and flexible toolkit that scales to meet the skill and experience levels of ML developers. To this end, we’ve added the powerful yet easy-to-use eIQ Toolkit to the eIQ ML development environment, enabling developers to import datasets and models and train, quantize, validate and deploy neural network models and ML workloads across NXP’s i.MX 8M family of applications processors and i.MX RT Crossover MCU portfolio. Whether you are an embedded developer starting out on your first ML project, a proficient data scientist or an AI expert, you’ll find the right toolkit capabilities to match your skill level and streamline your ML project.

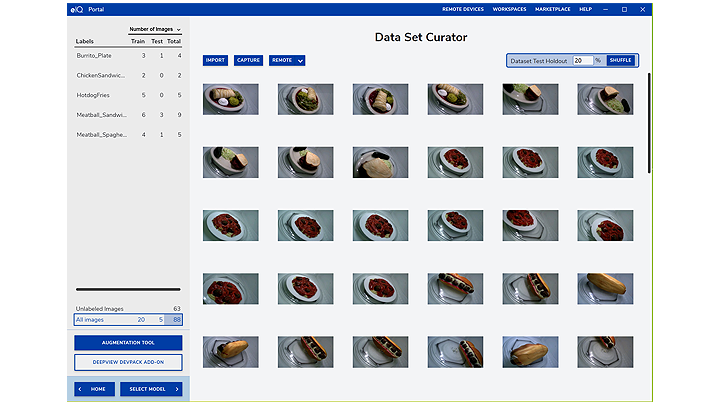

Figure 2. eIQ portal provides a dataset curator to help you annotate and organize all your training data.

The eIQ Toolkit provides straightforward workflows and ML application examples. The Toolkit also provides an intuitive, GUI-based development option with the eIQ Portal and you can also choose to use command-line host tools if you prefer. If you want to leverage off-the-shelf development solutions or need professional services and support from NXP or one of our trusted partners, the toolkit provides easy access to a growing list of options in our eIQ Marketplace from companies like Au-Zone Technologies.

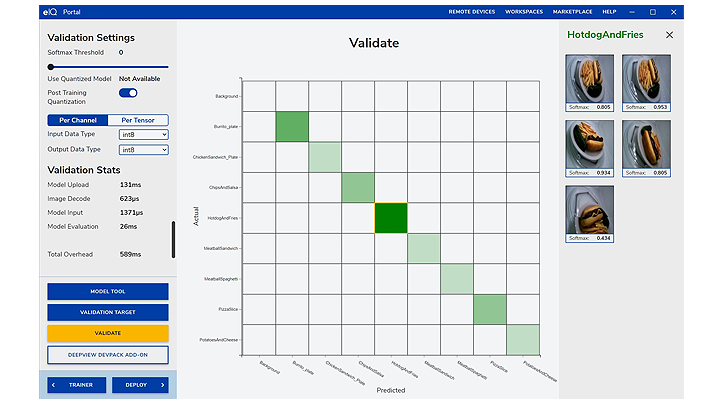

Figure 3. eIQ portal provides a convenient approach to model validation and measuring accuracy.

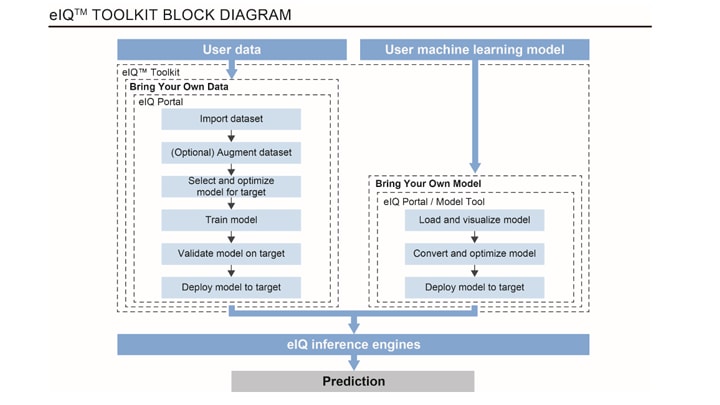

Using the eIQ Portal, you can easily create, optimize, debug, convert and export ML models, as well as import datasets and models from TensorFlow, ONNX and PyTorch ML frameworks. You can train a model with your data with the “bring your own data” (BYOD) flow, select from a database of pre-trained models or import a pre-trained model with the “bring your own model” (BYOM) flow such as the advanced detection models from Au-Zone Technologies. By following the simple BYOM process, you can build a trained model using public or private cloud-based tools and then transfer the model into the eIQ Toolkit to run on the appropriate silicon-optimized inference engine.

Figure 4. eIQ portal provides a flexible approach for BYOM and BYOD.

On-target, graph-level profiling capabilities provide developers with runtime insights to fine-tune and optimize system parameters, runtime performance, memory usage and neural network architectures for execution on i.MX devices.

Revving up NXP’s Latest eIQ Inference Engine

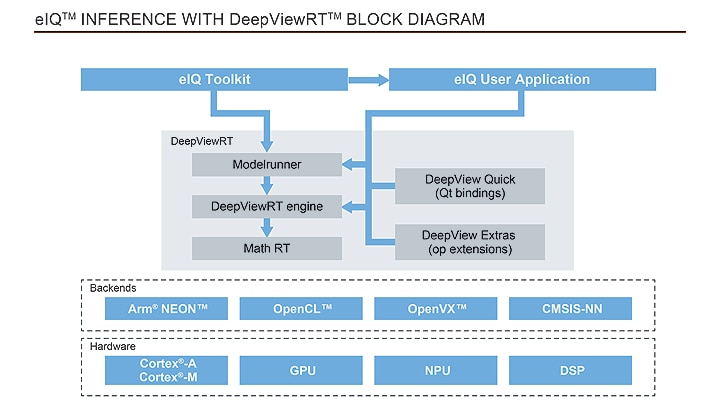

At the heart of a machine learning development project is the inference engine – the runtime component of ML applications. In addition to supporting inference with a variety of open-source, community-based inference engines optimized for i.MX devices and MCUs such as Glow, ONNX and TensorFlow Lite, we have added the DeepViewRT inference engine to our eIQ ML software development environment.

Developed in collaboration with our partner Au-Zone Technologies, DeepViewRT is a proprietary inference engine providing a stable, longer term vendor-maintained solution that complements open community-based inference engines.

Figure 5. The DeepViewRT provides a stable, production-ready, and flexible inference engine for ML applications.

The DeepViewRT inference engine is available as middleware in NXP’s MCUXpresso SDK and Yocto BSP release for Linux™ OS-based development.

More to Love About eIQ

The eIQ ML development environment with all essential baseline enablement including the new eIQ Toolkit, eIQ Portal and eIQ inference with DeepViewRT are provided with no license fees.

Hone Your Skills at the NXP ML/AI Training Academy

NXP’s ML/AI Training Academy provides self-paced learning modules on various topics related to ML development and best practices in using eIQ tools with NXP’s i.MX and i.MX RT devices. The ML/AI Training Academy is open to all NXP customers and features an expanding collection of training modules to help you get started with your ML application development. Learn more at www.nxp.com/ml training.

Get Started With eIQ ML Software and Tools Today

The eIQ Toolkit, including the eIQ Portal, is available for download now with a single click at eIQ Toolkit.

eIQ ML software including the DeepView RT inference engine for i.MX applications processors is supported on the current Yocto Linux release. eIQ ML software for i.MX RT crossover MCUs is fully-integrated into NXP’s MCUXpresso SDK release.

Learn more at www.nxp.com/ai and www.nxp.com/eiq.

Join the eIQ Community: eIQ Machine Learning Software.

Learn more about DeepViewRT and Au-Zone’s ML development tools here.

Ali Osman Örs

Director, AI ML Strategy and Technologies, NXP Semiconductors