This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

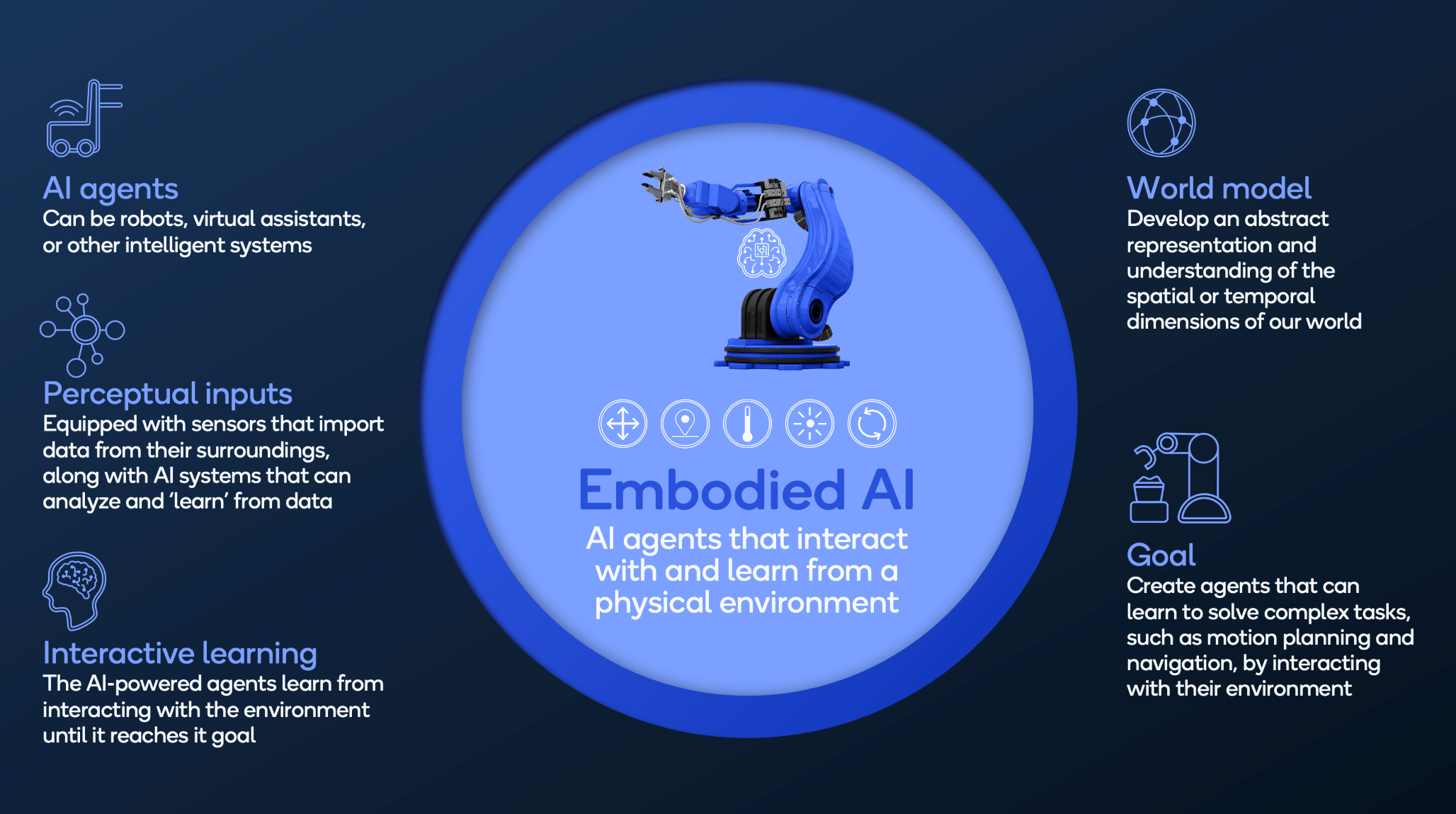

While robots have proliferated in recent years in smart cities, factories and homes, we are mostly interacting with robots controlled by classical handcrafted algorithms. These are robots that have a narrow goal and don’t learn from their surroundings very much. In contrast, artificial intelligence (AI) agents — robots, virtual assistants or other intelligent systems — that can interact with and learn from a physical environment are referred to as embodied AI. These agents are equipped with sensors (cameras, pressure sensors, accelerometers, etc.) that capture data from their surroundings, along with AI systems that can analyze and “learn” from the acquired data.

AI-powered robots learn through interaction with a physical environment.

Through trial and error, the AI agent develops a “world view”: an abstract representation and understanding of the spatial or temporal dimensions of our world. It learns to reach its goal, whether the goal is to walk, stack boxes or something else entirely.

Embodied AI can transform industries and improve lives. The opportunities are endless.

Think enhancing the manufacturing process, making entertainment and games more interactive and immersive, improving medical triage, surgery and elderly assistance, and making smart warehouses much more efficient and automated. The need for embodied AI is certainly there.

The aging population and labor shortages are already felt, especially in the developed world.1 In the past years, robot density in the manufacturing industry has already increased significantly as a result. In the U.S., the density of robots per 10,000 employees grew to 255, a 45% increase from 2015.2

AI-powered robotics has immense potential for improving society.

What is needed for embodied AI to proliferate

At Qualcomm AI Research, we are working on applications of generative modelling to embodied AI and robotics, in order to go beyond classical robotics and enable capabilities such as:

- Open vocabulary scene understanding.

- Natural language interface.

- Reasoning and common sense via large language models (LLMs).

- Closed-loop control, dynamic actions via LLMs or diffusion models.

- Vision-language-action models.

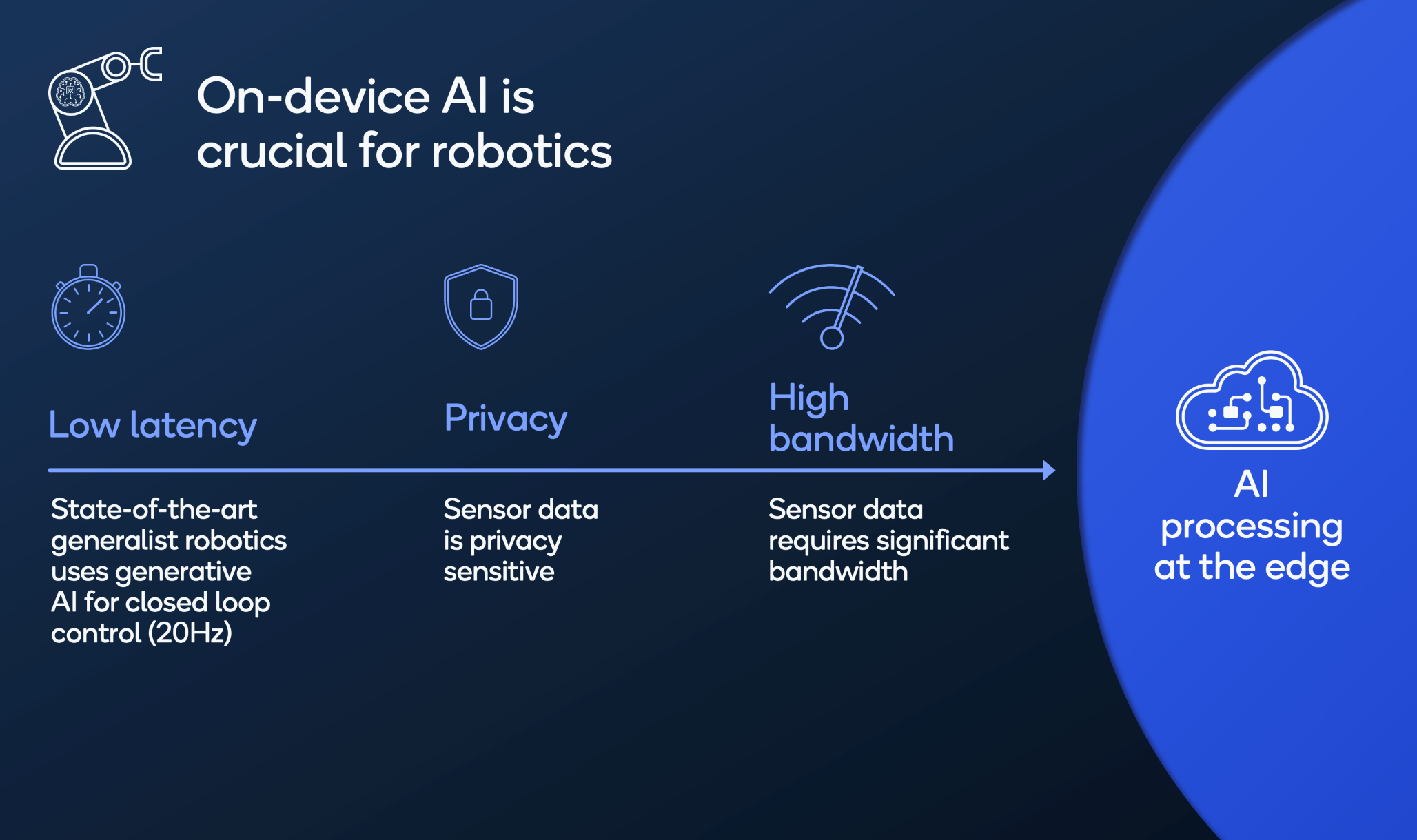

Robotics has a need for data efficiency, low latency, enhanced privacy and sensor processing. All these requirements can be achieved through on-device AI, which is why Qualcomm Technologies has been developing platforms to support the creation of more productive, autonomous and advanced robots, such as the Qualcomm Robotics Platforms. These platforms include the Qualcomm AI Engine, providing capabilities that can unleash innovative applications and possibilities.

AI processing at the edge meets the needs of embodied AI.

A data-efficient robot motion planning architecture

While AI processing at the edge constitutes a good basis for building embodied AI applications, there is a critical issue that remains to be solved. In contrast to internet AI, which learns from static datasets (e.g., ImageNet which contains 2D images) to solve various tasks, embodied AI learns by interacting with a physical environment. Such data is not readily available on the internet, and expensive to acquire. The Qualcomm AI Research team has developed a novel data-efficient architecture model to improve robots’ perception of their environment. We call this architecture “Geometric Algebra Transformers” (GATr) — sign up for my webinar to learn more.

GATr considers geometric structures of the physical environment through geometric algebra representations and equivariance. It has the scalability and expressivity of transformers. Experiments show an impressive performance, even with little data. At its core, GATr is a general-purpose architecture for geometric data. It has three components: geometric algebra representations, equivariant layers and a transformer architecture.

Geometric algebra representations

GATr uses a mathematical framework called geometric algebra to represent geometric data and perform computations on that data. By embedding different kinds of geometric data into a single geometric algebra, GATr can process a variety of geometric data types, making it suitable for a wide range of applications without requiring modifications to the network architecture.

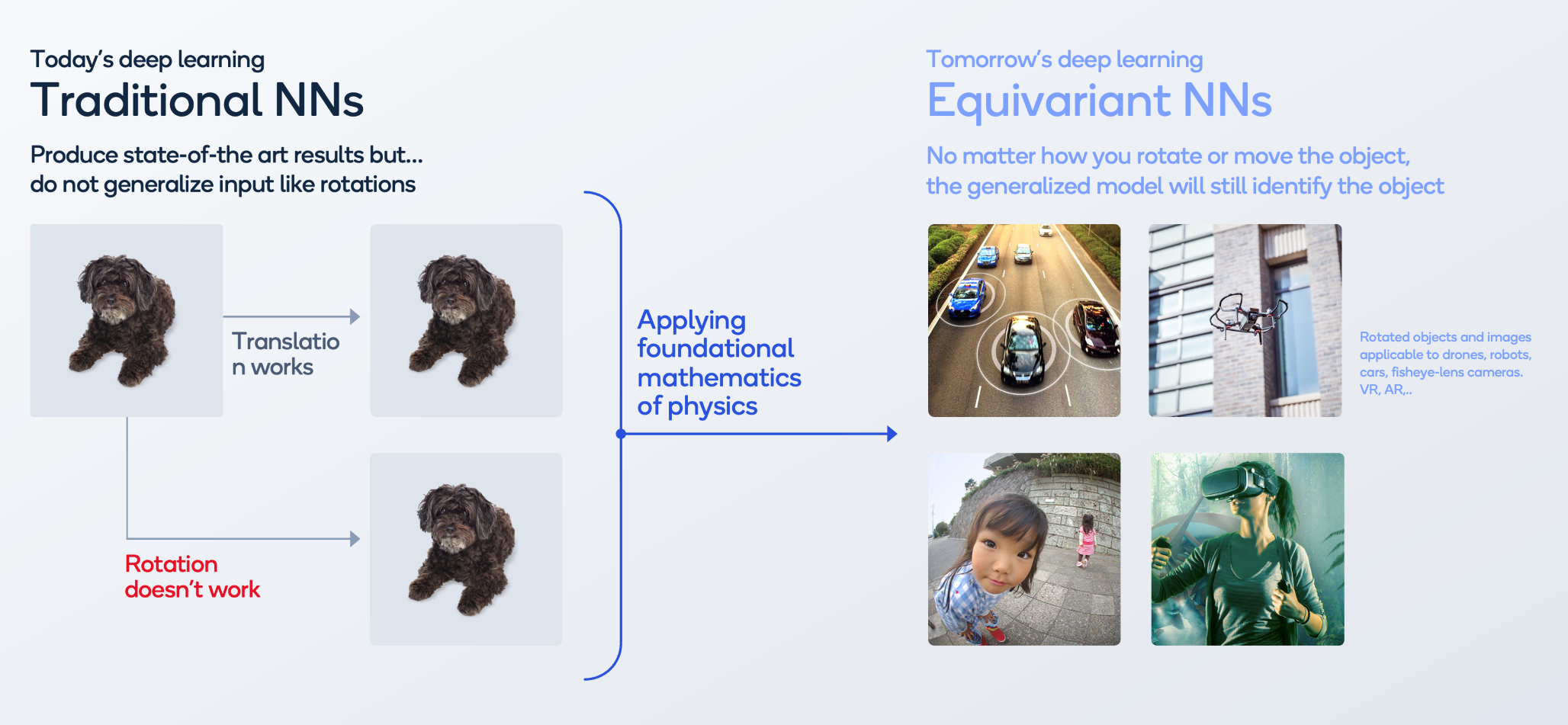

Equivariant layers

The innovation we bring with equivariant neural networks is that no matter how you rotate or move the object, the generalized model will still identify the object. This is key for improving the data-efficiency of AI-powered robots.

When we transform network inputs, the outputs transform consistently.

Transformer architecture

GATr is based on the transformer architecture, one of the most successful generative AI architectures. The fundamental operation in a transformer is called self-attention, for which we propose an equivariant alternative while preserving the excellent scalability properties of classical self-attention.

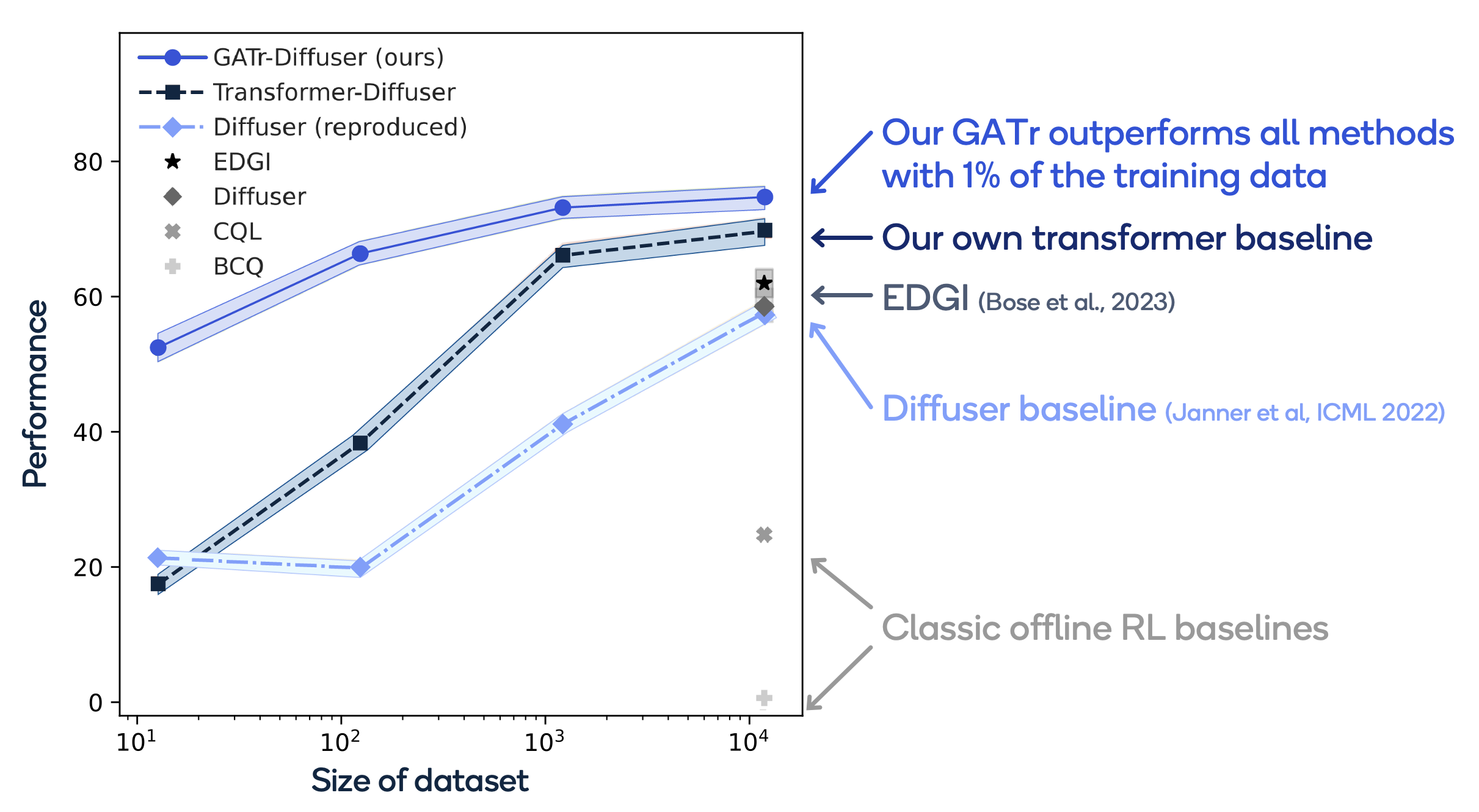

GATr performs well even with little data.

GATr outperforms other state-of-the-art architectures

You can look at our process for generating path planning for a robot in a comparable way to generating an image with a diffusion model, except instead of denoising an image we now denoise a robot trajectory. Furthermore, we use GATr as the denoising network, rather than the more standard U-Nets.

We tested our method on several tasks, including robotic block stacking. In the graph above, our method outperforms all previous methods with 1% of the training data. As we scale the number of items, our method continues to outperform. GATr scales to tens of thousands of tokens, outperforming the geometric deep learning baselines.

Towards making embodied AI a reality

We believe that embodied AI will benefit society in manufacturing, healthcare and more. Our model architecture for data-efficient robot motion planning is just one of the embodied AI projects that the Qualcomm AI Research team is working on. I recommend you also check out our work in “Uncertainty-driven Affordance Discovery for Efficient Robotics Manipulation” for helping AI-powered robots make decisions.

On-device generative AI will continue to play a fundamental role in embodied AI. Furthermore, we believe that equivariance allows for more efficient understanding of 3D images/videos with AI. Stay tuned for more research in this direction.

References

1: United Nations, World Population Prospects, 2017

2: Bloomberg, 2023

Taco Cohen

Principal Engineer, Qualcomm Technologies Netherlands B.V.