This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

The Snapdragon Ride Vision System is designed to enhance vehicle perception for safer driving experiences

Today’s drivers reap the benefits of active safety features in their vehicles. Automatic emergency braking, lane departure warnings, blind spot detection and other crash-avoidance technologies have already made our roads safer. In fact, front-to-rear crashes with injuries have gone down by 56% thanks to automatic emergency braking; backing crashes have reduced by 78% with the help of rear automatic braking; and numerous other reported crashes and insurance claims have decreased because of safety technologies that are now fundamental features in many passenger vehicles.1

These benefits will only continue to improve as more automakers continue to incorporate new technologies into their vehicles. The advanced driver assistance systems (ADAS) being developed today will have the ability to anticipate imminent dangers — providing warnings or automated functionality to avoid them — making travel even safer and more convenient. At the foundation of ADAS is computer vision (CV) and it’s what contributes to the power of the Snapdragon Ride Vision System.

How computer vision uses cameras to improve road safety

Classifying the various types of objects in a roadway environment can be challenging, but with the ability to detect detailed features, cameras are the optimal sensing modality for the job. While other sensors like radar and lidar can provide the precise positioning information required for scaling to higher levels of autonomy, the camera remains an essential sensor because it allows the computer vision system to gather low-level information needed within a given scenario. At the core of the Snapdragon Ride Vision System are neural networks (NNs) that come in various sizes and types for broadly classifying the surrounding environment including objects, signs, lights, road features and more. Building an environment model containing all modalities of sensors allows automakers to extend the operational design domain (ODD) of ADAS features and scale from basic safety to autonomous driving.

By the end of the decade almost all new production vehicles will likely have at least one camera of some type, enabling computer vision that can support everything from safety up to full autonomy. Level 2 (L2) autonomy (combining adaptive cruise control and lane centering with the driver fully in-loop) is emerging as a design baseline for vehicles today, and vision perception carries a big load to enable system functionalities. These L2 features scale up to higher speeds and include additional ODDs, such as urban environments.

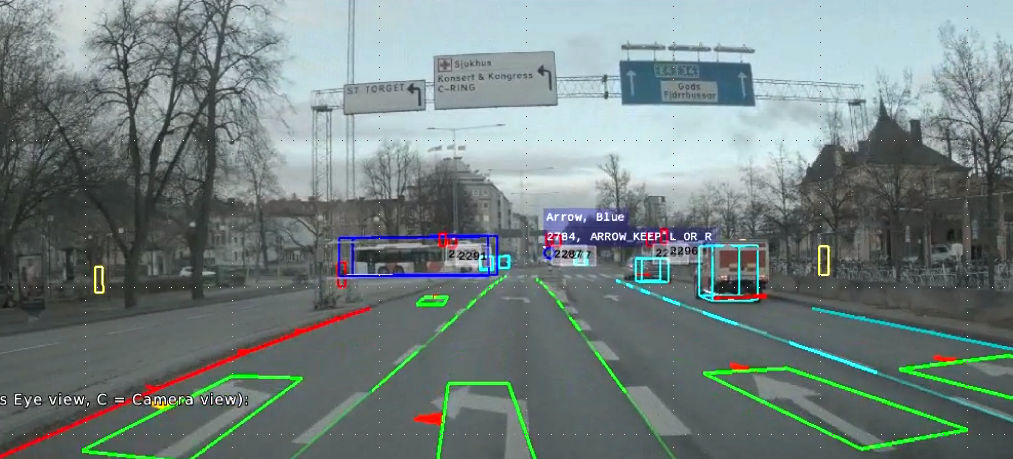

Traffic sign recognition as a form of object classification

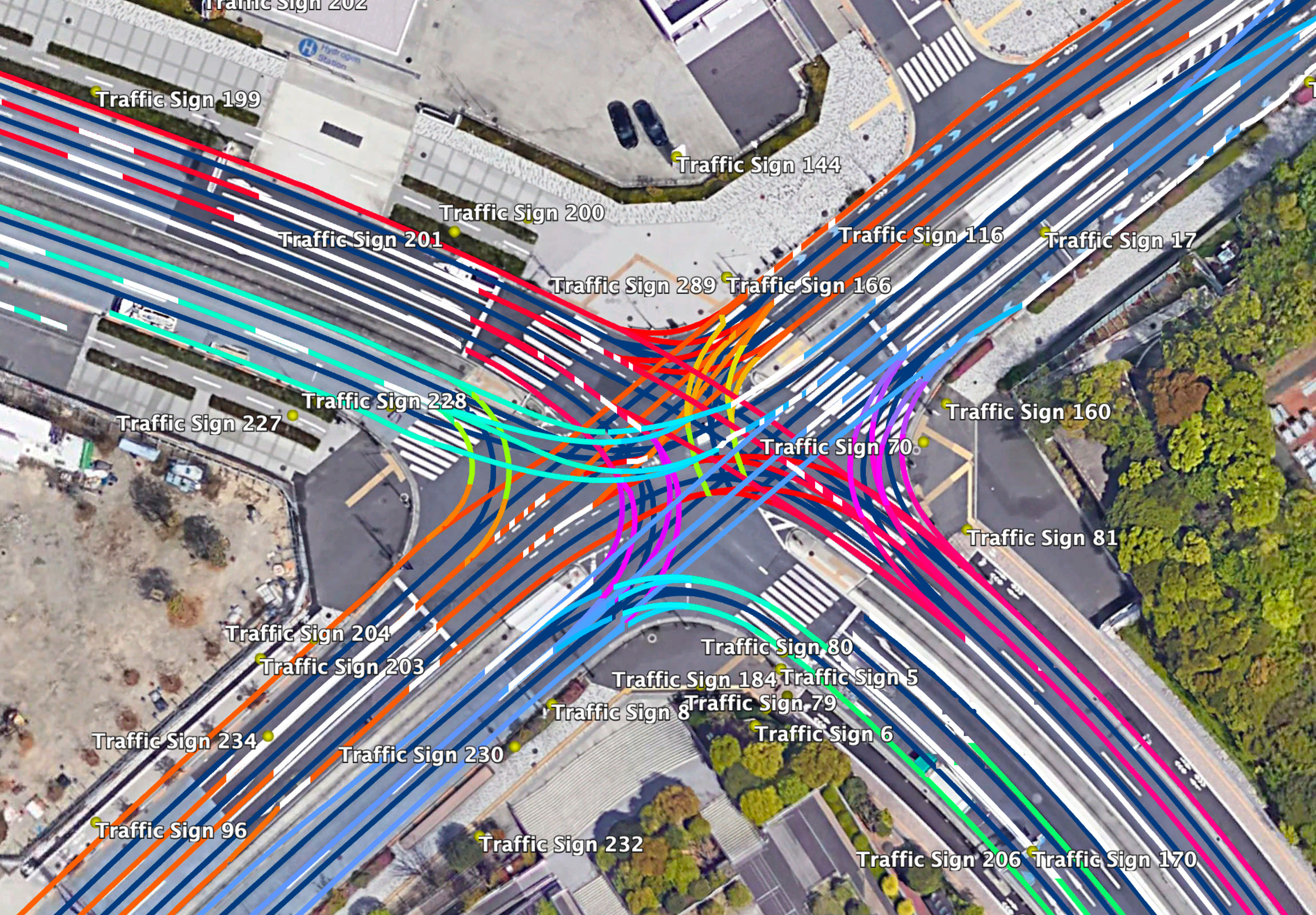

Developing functions that can scale and have application programming interfaces (APIs) containing lower-level information will be essential for object classification and scale. For example, traffic sign recognition (TSR) starts by establishing confidence in a sign’s existence, including its position, shape, color, value, text and overall relevance to the vehicle. A developer may only need sign type, value and relevancy to build their intended functionality, while higher levels of functionality may also require position and confidence. Having a single CV solution that scales allows them to align their API usage to achieve the desired level of autonomy. The Snapdragon Ride SDK provides developers with such scalability and flexibility as they interface with the vision stack.

Build new features for ODDs, like unclassified moving objects

With the imminent extension of ODDs, the list of potential ADAS functions has increased immensely. Decomposing vehicle control algorithms from APIs to the detection function is essential when considering new features for the targeted ODDs. Building new functionality on small data sets with robust labeling speeds up the development process to address market needs proactively. For example, new functions like unclassified moving objects (UMO) in our vision stack provide information about moving objects that don’t meet the classification criteria or are new to the object detection NN. This function evolves from free space, which gives information about the road plane and extends to vertical objects with dimensions. Overhanging load, pictured above, is a good example of a use case for UMO. We can use UMO detections as a trigger for active learning from the fleet.

CV uses lateral control to recognize the road

Computer vision APIs can also evolve to produce low-level information such as road markings, position/size for multiple lanes, road edges and lane/path prediction for cases where lanes are not clearly visible or marked. The lane departure warning (LDW) feature that mitigates crashes by alerting the driver when the vehicle is exiting the lane is a good example of how building trust and validating at scale can support the evolution of safety technologies. Development for the LDW feature started with employing a convolutional neural network (CNN) to support lane detection. The next stage included lane keep assist, where the system automatically intervenes and brings the vehicle back to the center of the lane. Next came lane-centering systems that support traversing on/off ramps, merges, roundabouts and intersections.

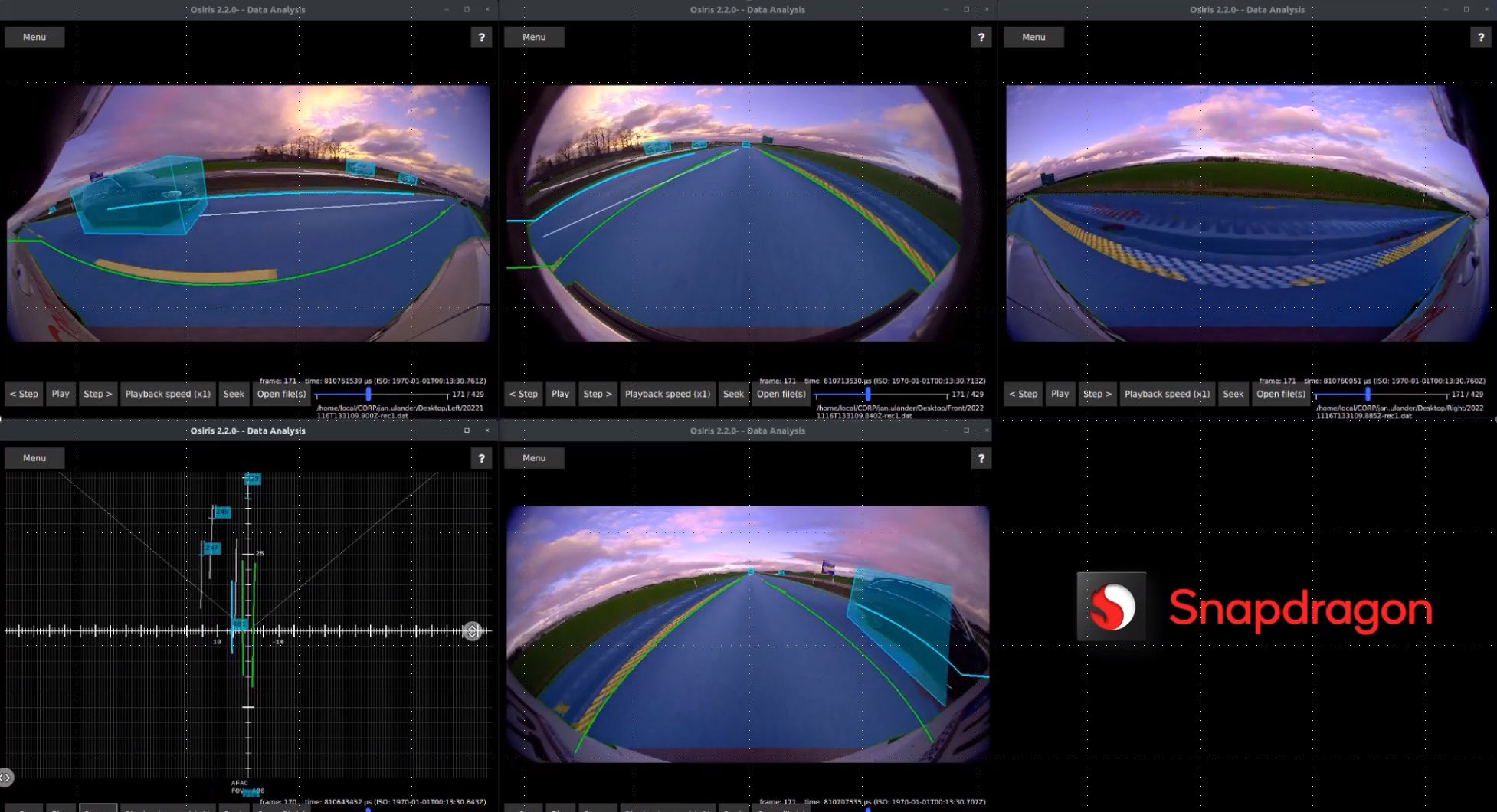

Snapdragon SOCs can support efficient AI processing

Extending ODDs towards full Level 3 highway autonomy requires additional camera angles, which increases the data load correspondingly. Processing this data requires significantly efficient AI processing, which we address with a novel solution we call hardware/software co-design. In such an approach, purpose-built Neural Processing Unit (NPU) cores on our high-performance Snapdragon SoCs support sensor perception and software development tools that efficiently map DNNs to these cores, allowing us to achieve leading Inferences per second (IPS)/watt and IPS/TOPS KPIs on the market. The Snapdragon Ride Platform can now handle 25+ camera feeds at a time for various ADAS neural nets and safety/security applications. Again, these software development tools are part of our Snapdragon Ride SDK, providing a convenient way for our developers to access this hardware-software co-design approach without having to worry about the underlying low-level details.

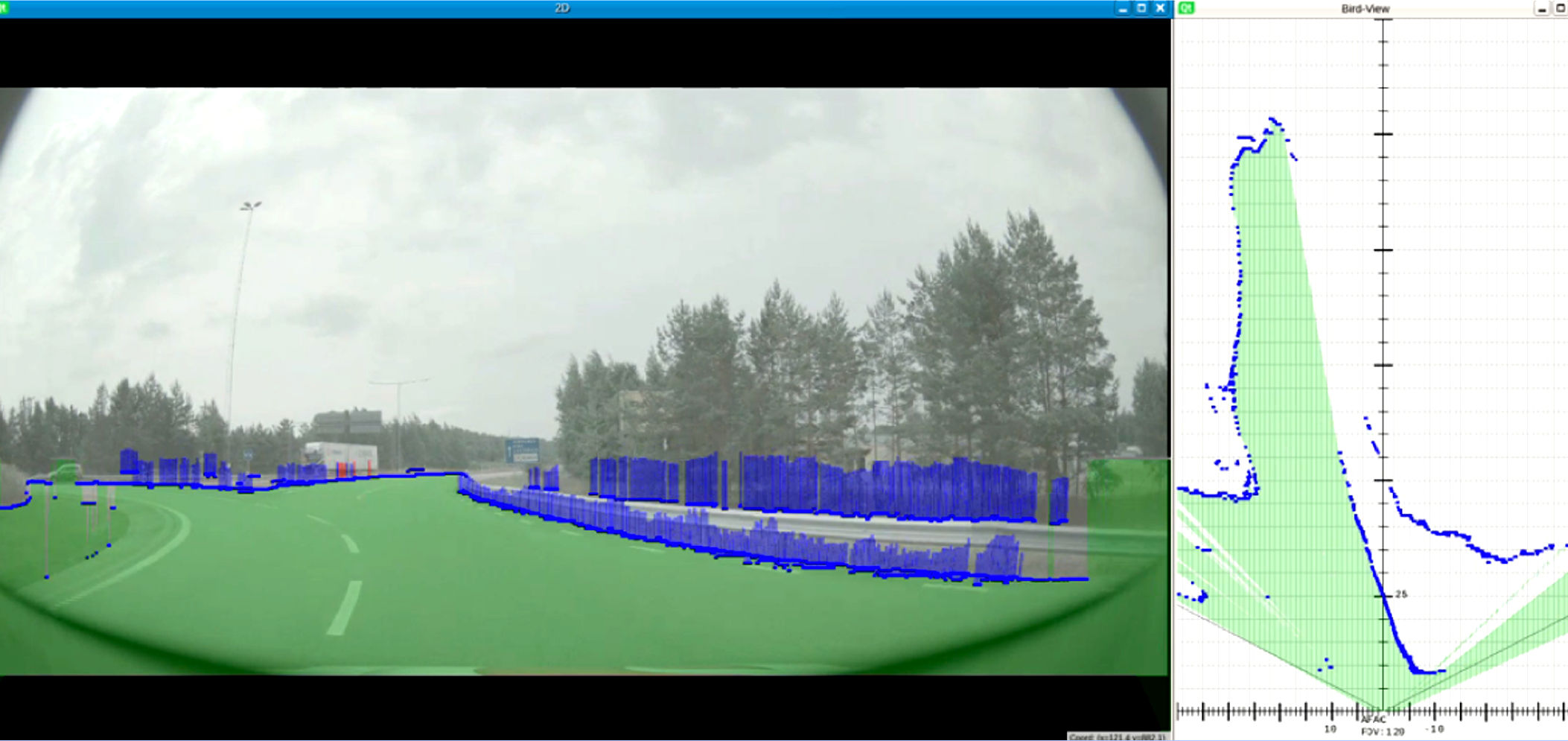

Checking blind spots with a birds eye view

To solve for potential blind spots in the environment around the vehicle, BEVFormer (Birds eye view) networks use low-level data to detect unclassified objects, reaching almost 100% detection rates at the system level. Representing surrounding scenes using BEV perception is intuitive and fusion-friendly, which is most desirable for downstream modules as in planning and/or control.

Setting the standards for computer vision systems

When equipped with ADAS systems, every car on the road is a rich source of data. Gathering this data and leveraging it for various development and validation techniques helps to scale faster. Shadow mode allows the software to be deployed prior to a production release, using learnings from large fleets to identify any issues or weaknesses that may impact performance or the user experience. Having efficient data pipelines and frame selection simplifies the process of deploying these solutions across large fleets, leveraging bandwidth from technologies already in place. In collaboration with our customers, Qualcomm Technologies is building large and diverse validation datasets to better understand key performance indicators (KPIs) across the world.

Standards for the CV system are essential to ensuring perception sensors and technologies are available for a wide range of applications and quick bring-up for customers globally. Designing to industry standards such as the recently defined ISO 23150 for API and ADAS sensors allows for system re-use and drop-in replacements for other perception stacks and/or fusion models.

1: Real-world benefits of crash avoidance technologies. Insurance Institute for Highway Safety. Retrieved on August 2023 from: https://www.iihs.org/media/290e24fd-a8ab-4f07-9d92-737b909a4b5e/oOlxAw/Topics/ADVANCED%20DRIVER%20ASSISTANCE/IIHS-HLDI-CA-benefits.pdf

Rajat Sagar

Senior Director of Product Management, Qualcomm Technologies, Inc.