This blog post was originally published by D3. It is reprinted here with the permission of D3.

We’ve found that a lot of innovators are considering including millimeter wave radar in their solutions, but some are not familiar with the sensing capabilities of radar when compared with vision, lidar, or ultrasonics. I want to review some characteristics of radar vs. these other sensing modalities to explain the differences between these technologies.

Millimeter wave radar is used in applications like automotive, industrial, robotics, medical, and facilities monitoring. For sensing on the exterior of vehicles in motion, most applications are already on or are moving to 77 GHz millimeter wave radar. 77 GHz radar is also used for level sensing, in industrial tanks for example. For other applications, 60 GHz is used. This includes people counting and tracking, healthcare monitoring, and robotics sensing.

Both 60 and 77 GHz millimeter wave radar work by sending out energy across the band and comparing what is sent out with what comes back. Radar is different than most vision systems because it relies on active illumination of the scene rather than ambient light. Exceptions are Lidar and Infrared vision, where illumination is also transmitted. In the case of Lidar, a laser is precisely beamed over the scene progressively to illuminate a small section at once. For infrared vision and millimeter wave radar the illumination is broad across the entire scene.

By comparing the millimeter wave receive signal against the transmit signal, we can use the frequency of peaks in the return energy to identify the range of objects in the scene. We can also analyze the phase of the signal across multiple transmissions to determine the Doppler speed of the objects. If we use multiple transmitters and receivers (Multiple-In/Multiple-Out or MIMO), we can identify the direction of arrival of the energy to pinpoint the azimuth and elevation of objects. With all this information, we build up a rich “point cloud” of returns. An example is shown the figure below. It’s called a point cloud because that’s just what it looks like when visualized. The points can further be “clustered” so that we can identify objects in the scene and their motion.

Point Cloud with Clustering

Vision systems are very good at sensing some details in the scene, including edges, colors, and shapes. This is because the wavelength is much smaller and even microscopic details provide information. Millimeter wave radar has a much longer wavelength, so features in a scene that are smaller than the wavelength on the order of centimeters do not provide information individually. Unfortunately, vision systems struggle to determine the distance to an object precisely. They need complex artificial intelligence to estimate distances and speeds of objects in the scene.

Millimeter wave radar, on the other hand, is incredible at detecting distances to returns, as well as their Doppler speed (toward or away from the sensor). This can be done so precisely that a sensor can measure your heart and breathing rate from meters away and can detect distances with precision of less than one millimeter. Depending on the configuration, radar can detect objects like cars up to 300 meters (around 1000 feet) away. Millimeter wave radar is invisible to the human eye, and doesn’t need illumination, so it can operate in total darkness and is better at penetrating rain, fog, and snow than vision systems. Infrared vision is similarly invisible to the naked eye; however, it can be difficult to tune the exposure of an infrared system to achieve a good picture across the entire scene. Infrared systems also struggle to achieve similar range as radar and continue to suffer from environmental disturbances such as rain, snow, and fog.

Artificial intelligence can be applied to millimeter wave radar systems just as it can be applied to vision systems. Vision AI systems are based on what objects look like. With millimeter wave radar, the AI operates on what the objects are doing, i.e., how they are moving at a macroscopic and microscopic level. For example, with millimeter wave radar, you can track someone in three dimensions as they move about a space, detect their activity type, or detect hand gestures. You can also characterize how objects vibrate to differentiate living vs. non-living objects. These techniques are useful for detecting falls, activity types, and permitting touch free control as a human-machine interface.

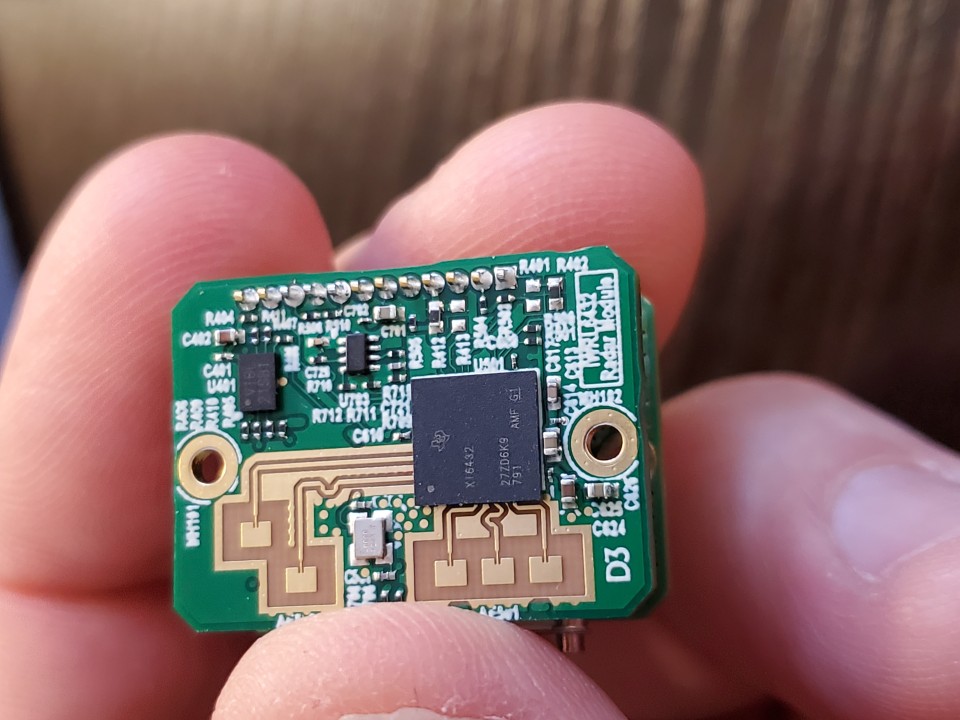

Another thing that’s interesting about millimeter wave radar is the sensors. Cameras and illumination can be somewhat large and can contain moving parts (for focus or scanning). Millimeter wave radar is solid state and has no moving parts. It can also be quite small. See the figure below, which shows one of our radar sensors. This model is approximately 25 x 25 x 15 mm (1 x 1 x 0.6 inches).

D3 RS-L6432U Sensor

I hope this helped identify some of the differences between millimeter wave radar and other sensing modalities. Obviously, there is a ton more that could be said about this topic. D3 has invested years developing millimeter wave expertise in a variety of industries and applications. If you’d like to discuss radar sensing and how it could work for you, please reach out! We have a landing page for millimeter wave radar where you can get started.

Tom Mayo

Lead Product Line Manager, D3