This blog post was originally published by D3. It is reprinted here with the permission of D3.

There are variety of applications where spatial sensing is beneficial. Applications such as automotive parking, robotics collision avoidance, and even people counting and tracking can benefit from detecting objects in the environment either around a vehicle or within a facility. D3 works with Texas Instruments millimeter wave radar devices for a multitude of applications like these.

These sensors work to detect objects using frequency modulated continuous wave (FMCW) transmissions mixed with the received signals to yield an intermediate frequency. This intermediate frequency (IF) is sampled with an integrated Analog to Digital converter, and then processed with Fourier transforms to detect the range and doppler speed of objects. With multiple input/multiple output (MIMO) antenna arrays, operations comparing the phases of the received signals from the different antennas can detect the angle to the the detected objects. With these abilities, a 3-dimensional point cloud is returned by the radar sensor to map out detections in the area and their doppler speed.

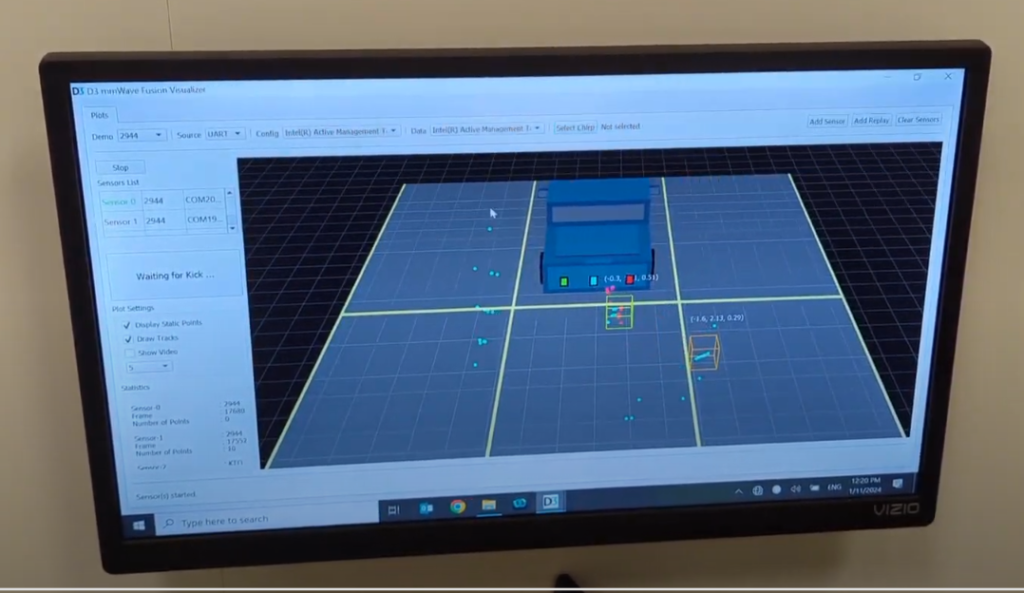

Another important operation that can be performed to make better sense of the objects in the scene is clustering and tracking of the point cloud returns. TI has implemented a processing library that works to group returns that belong to the same object, and then track this cluster from frame to frame. This library has been optimized to run on the microprocessor core within the SOC, and it can perform clustering and tracking in real time with the detected returns.

While clustering and tracking is useful within a single radar sensor, multiple millimeter wave radar devices can also work together to provide a more detailed vision of the environment by combining the computed point clouds from several independent devices into one representation of the scene. You can think of multiple flashlights in a dark room illuminating different parts at once so you can see the whole scene.

While you can combine the view of the room in your head without thinking in the case with multiple flashlights, it’s not the same when a processing chain is being used to make sense of the scene. There is hope, however, because aggregating points clouds is easy for a processor.

As you can imagine, there are a couple of operations that need to be performed for everything to make sense. First, the coordinate system of each sensor needs to be rotated and translated to the coordinate system of reference for the platform. For example, if there are radar sensors on the front, rear, and left and right sides of a vehicle, the point cloud return coordinates can be rotated and translated to appear in the front sensor’s frame of reference.

Recall that TI’s libraries can perform clustering and tracking of radar returns using the microprocessor within the radar device. While this is good for one device at a time, when we want to take the returns from multiple sensors together, it’s better to perform these algorithms on a common processor that considers all of the data together. At D3, we have implemented just such an aggregating clustering and tracking mechanism by porting the library to other platforms such as a PC and embedded automotive and robotics processors. We demonstrated this capability at Sensors Converge in Santa Clara in June 2023, and at CES in Las Vegas in January 2024.

D3 has worked with many product development customers to customize, optimize, and validate radar processing chains and algorithms. We’d be excited to work with you to help meet your radar and processing objectives. Contact us at [email protected] for more information.

Tom Mayo

Lead Product Line Manager, D3