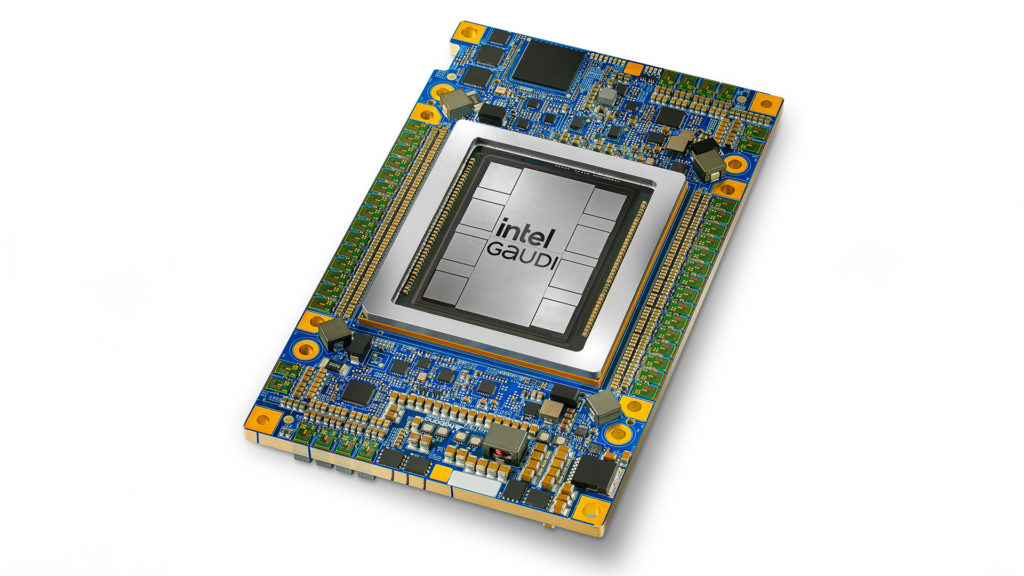

Intel Gaudi 3 AI accelerator brings global enterprises choice for generative AI, building on the performance and scalability of its Gaudi 2 predecessor.

What’s New: At Intel Vision, Intel introduces the Intel® Gaudi® 3 AI accelerator, which delivers 4x AI compute for BF16, 1.5x increase in memory bandwidth, and 2x networking bandwidth for massive system scale out compared to its predecessor – a significant leap in performance and productivity for AI training and inference on popular large language models (LLMs) and multimodal models. Building on the proven performance and efficiency of the Intel® Gaudi® 2 AI accelerator – the only MLPerf-benchmarked alternative for LLMs on the market – Intel gives customers a choice with open community-based software and industry-standard Ethernet networking to scale their systems more flexibly.

“In the ever-evolving landscape of the AI market, a significant gap persists in the current offerings. Feedback from our customers and the broader market underscores a desire for increased choice. Enterprises weigh considerations such as availability, scalability, performance, cost, and energy efficiency. Intel Gaudi 3 stands out as the GenAI alternative presenting a compelling combination of price performance, system scalability, and time-to-value advantage.”

Justin Hotard, Intel executive vice president and general manager of the Data Center and AI Group

Why It Matters: Today, enterprises across critical sectors such as finance, manufacturing and healthcare are rapidly seeking to broaden accessibility to AI and transitioning generative AI (GenAI) projects from experimental phases to full-scale implementation. To manage this transition, fuel innovation and realize revenue growth goals, businesses require open, cost-effective and more energy-efficient solutions and products that meet return-on-investment (ROI) and operational efficiency needs.

The Intel Gaudi 3 accelerator will meet these requirements and offer versatility through open community-based software and open industry-standard Ethernet, helping businesses flexibly scale their AI systems and applications.

How Custom Architecture Delivers GenAI Performance and Efficiency: The Intel Gaudi 3 accelerator, architected for efficient large-scale AI compute, is manufactured on a 5 nanometer (nm) process and offers significant advancements over its predecessor. It is designed to allow activation of all engines in parallel — with the Matrix Multiplication Engine (MME), Tensor Processor Cores (TPCs) and Networking Interface Cards (NICs) — enabling the acceleration needed for fast, efficient deep learning computation and scale. Key features include:

- AI-Dedicated Compute Engine: The Intel Gaudi 3 accelerator was purpose-built for high-performance, high-efficiency GenAI compute. Each accelerator uniquely features a heterogenous compute engine comprised of 64 AI-custom and programmable TPCs and eight MMEs. Each Intel Gaudi 3 MME is capable of performing an impressive 64,000 parallel operations, allowing a high degree of computational efficiency, making them adept at handling complex matrix operations, a type of computation that is fundamental to deep learning algorithms. This unique design accelerates speed and efficiency of parallel AI operations and supports multiple data types, including FP8 and BF16.

- Memory Boost for LLM Capacity Requirements: 128 gigabytes (GB) of HBMe2 memory capacity, 3.7 terabytes (TB) of memory bandwidth and 96 megabytes (MB) of on-board static random access memory (SRAM) provide ample memory for processing large GenAI datasets on fewer Intel Gaudi 3s, particularly useful in serving large language and multimodal models, resulting in increased workload performance and data center cost efficiency.

- Efficient System Scaling for Enterprise GenAI: Twenty-four 200 gigabit (Gb) Ethernet ports are integrated into every Intel Gaudi 3 accelerator, providing flexible and open-standard networking. They enable efficient scaling to support large compute clusters and eliminate vendor lock-in from proprietary networking fabrics. The Intel Gaudi 3 accelerator is designed to scale up and scale out efficiently from a single node to thousands to meet the expansive requirements of GenAI models.

- Open Industry Software for Developer Productivity: Intel Gaudi software integrates the PyTorch framework and provides optimized Hugging Face community-based models – the most-common AI framework for GenAI developers today. This allows GenAI developers to operate at a high abstraction level for ease of use and productivity and ease of model porting across hardware types.

- Gaudi 3 PCIe: New to the product line is the Gaudi 3 peripheral component interconnect express (PCIe) add-in card. Tailored to bring high efficiency with lower power, this new form factor is ideal for workloads such as fine-tuning, inference and retrieval-augmented generation (RAG). It is equipped as a full-height form factor at 600 watts, with a memory capacity of 128GB and a bandwidth of 3.7TB per second.

Intel Gaudi 3 accelerator will deliver significant performance improvements for training and inference tasks on leading GenAI models. Specifically, the Intel Gaudi 3 accelerator is projected to deliver on average versus Nvidia H100:

- 50% faster time-to-train1 across Llama2 7B and 13B parameters, and GPT-3 175B parameter models.

- 50% faster inference throughput2 and 40% greater inference power-efficiency3 across Llama 7B and 70B parameters, and Falcon 180B parameter models. An even greater inference performance advantage on longer input and output sequences.

- 30% faster inferencing4 on Llama 7B and 70B parameters, and Falcon 180B parameter models against Nvidia H200.

About Market Adoption and Availability: The Intel Gaudi 3 accelerator will be available to original equipment manufacturers (OEMs) in the second quarter of 2024 in industry-standard configurations of Universal Baseboard and open accelerator module (OAM). Among the notable OEM adopters that will bring Gaudi 3 to market are Dell Technologies, HPE, Lenovo and Supermicro. General availability of Intel Gaudi 3 accelerators is anticipated for the third quarter of 2024, and the Intel Gaudi 3 PCIe add-in card is anticipated to be available in the last quarter of 2024.

The Intel Gaudi 3 accelerator will also power several cost-effective cloud LLM infrastructures for training and inference, offering price-performance advantages and choices to organizations that now include NAVER.

Developers can get started today with access to Intel Gaudi 2-based instances on the developer cloud to learn, prototype, test, and run applications and workloads

What’s Next: Intel Gaudi 3 accelerators’ momentum will be foundational for Falcon Shores, Intel’s next-generation graphics processing unit (GPU) for AI and high-performance computing (HPC). Falcon Shores will integrate the Intel Gaudi and Intel® Xe intellectual property (IP) with a single GPU programming interface built on the Intel® oneAPI specification.

More Context: Intel Unleashes Enterprise AI with Gaudi 3, AI Open Systems Strategy and New Customer Wins (News) | Intel Gaudi 3 AI Accelerator (Product Page) | Intel Gaudi 3 AI Accelerator (White Paper) | Intel Gaudi 2 Remains Only Benchmarked Alternative to NV H100 for GenAI Performance (News)

The Small Print

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

1 NV H100 comparison based on: https://developer.nvidia.com/deep-learning-performance-training-inference/training, Mar 28th 2024 à “Large Language Model” tab Vs Intel® Gaudi® 3 projections for LLAMA2-7B, LLAMA2-13B & GPT3-175B as of 3/28/2024. Results may vary

2 NV H100 comparison based on https://nvidia.github.io/TensorRT-LLM/performance.html#h100-gpus-fp8 , Mar 28th, 2024. Reported numbers are per GPU. Vs Intel® Gaudi® 3 projections for LLAMA2-7B, LLAMA2-70B & Falcon 180B projections. Results may vary.

3 NV comparison based on https://nvidia.github.io/TensorRT-LLM/performance.html#h100-gpus-fp8 , Mar 28th, 2024. Reported numbers are per GPU. Vs Intel® Gaudi® 3 projections for LLAMA2-7B, LLAMA2-70B & Falcon 180B Power efficiency for both Nvidia and Gaudi 3 based on internal estimates. Results may vary.

4 NV H200 comparison based on https://nvidia.github.io/TensorRT-LLM/performance.html#h100-gpus-fp8 , Mar 28th, 2024. Reported numbers are per GPU.Vs Intel® Gaudi® 3 projections for LLAMA2-7B, LLAMA2-70B & Falcon 180B projections. Results may vary.

About Intel

Intel (Nasdaq: INTC) is an industry leader, creating world-changing technology that enables global progress and enriches lives. Inspired by Moore’s Law, we continuously work to advance the design and manufacturing of semiconductors to help address our customers’ greatest challenges. By embedding intelligence in the cloud, network, edge and every kind of computing device, we unleash the potential of data to transform business and society for the better. To learn more about Intel’s innovations, go to newsroom.intel.com and intel.com.