-

The tech industry’s self-congratulatory analogy of AI with electricity “is not absurd, but it’s too flawed to be useful,” says historian Peter Norton.

-

What’s at stake: In Silicon Valley, power prevails. In the short run, if your business and technology have the power to make money, move the market, and change the rules, you won’t even be criticized when you’re wrong. But no business is future-proof, especially if its power depends on a resource — namely electricity — facing increased scarcity.

The Bay Area just finished a week-long AI party, talking up advancements like Nvidia’s new supersized AI chips and the simulation and software tools, developed by companies such as Ansys and Synopsys, that enabled them.

The tech community is blithely comparing the anticipated spread of AI to electricity that everyone nowadays takes for granted.

Executives like to style themselves as creators of a brand-new industry, similar to the electrification wave that accelerated the Industrial Revolution.

AI factory

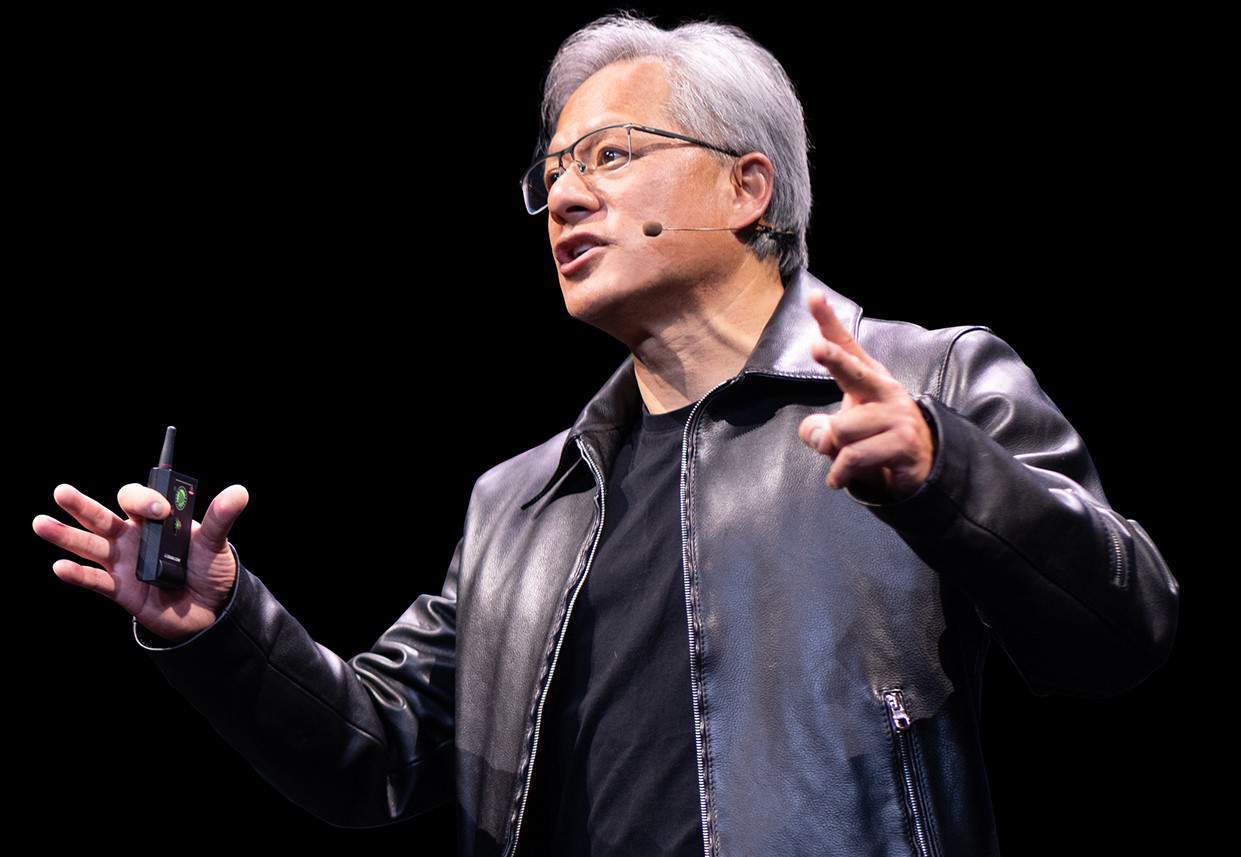

Jensen Huang is the CEO at Nvidia, whose company hit $2 trillion in valuation last month. That puts him in the category of executives who can do no wrong.

Hunag described a datacenter running AI models as an “AI factory.”

In his keynote speech at his company’s conference, GTC2024, he explained that the product of AC generators in factories during the Industrial Revolution was an “invisible valuable thing called electricity.” Today, coming out of AI factories are “tokens producing floating point numbers at very large scale … generating this new incredibly valuable thing called artificial intelligence.”

Jensen Huang, Nvidia CEO, at GTC2024 (image: Nvidia)

Nvidia isn’t alone in the AI-electricity comparison. Two days after Huang’s keynote, Microsoft Corp. vice president Saurabh Dighe, responsible for Azure Strategic Planning and Architecture, stood onstage at the Synopsys User Group (SNUG) event to sing a similar tune.

Dinghe told the audience — mostly software engineers — that AI will replicate the way “the electrification of our society brought disruptive innovations.”

He explained that the discovery of electricity by Benjamin Franklin in the 18th century led to Edison’s invention of the light bulb in the 19th century. In today’s world, AI already attained that “light bulb moment,” he declared, when ChatGPT hit 100 million monthly active users in January 2023.

The tech community’s message is loud and clear: Here comes another technological revolution that will trigger unimaginable economic and social change

‘AI-Electricity’ analogy

Comparing AI to electricity is the latest symptom among the egomaniacs of Silicon Valley. It’s understandable. Is there a technologist anywhere who could resist the notion that he would go down in history shoulder-to-shoulder with Franklin or Edison?

But this AI-electricity analogy didn’t sit well with me. It’s a faulty equivalence that conveniently negates a discussion on the explosive thirst for electricity by datacenters.

There is no AI without data. Neither will there be any AI optimization, without infrastructure capable of running diversified AI models. This demand for more AI models balloons the capital expenditure to support AI, and begs the question of how AI can be equitably delivered to the public.

My doubts about the AI-electricity myth led me to historian Peter Norton, who is uniquely positioned to analyze the intersection of history and technology. Professor Norton teaches tech history in the Department of Engineering and Society at the University of Virginia.

Norton responded to my query by saying, “The [AI-electricity] analogy is not absurd, but it’s too flawed to be useful.”

It’s not absurd because AI, like electricity, can distribute capacities formerly distant and concentrated, and can support innumerable specific applications. Also, he noted, some early responses to electricity were “as utopian and as panicky as those about AI.”

“But that’s about as far as this analogy works, and the differences are profound,” stressed Norton.

Following is my back and forth with Norton.

Is AI as essential as electricity?

Unlike AI, said Norton, electricity offered elemental basics: first light, then power. Electricity made lighting the dark affordable. It made power transmissible, distributing it far from power sources. Light and power are not so much tools as enablers of tools.

Unlike electricity, AI is not elemental. It actually depends on electric power — lots of it. This makes it secondary to power.

Define the difference between “tools” and “enablers of tools”?

Norton cited an analogy to “cooking.”’

The “electricity” elements of cooking (so to speak) are heat and water. Every kitchen needs both. In this analogy, AI is not heat or water, not even in their “next-generation.” AI is more like a well-equipped kitchen that offers more than the basics: precision control, greater convenience, wider versatility, and more choice.

The initial discovery of electrification didn’t readily result in the electricity we use every day. There were struggles and fights. What enabled it?

Norton cited three basic problems. The first, storage of a static charge, was solved by the Leyden jar. Then the voltaic pile — a battery — solved the problem of drawing a continuous electric current from a stored charge. Finally, there was the problem of generating significant electric power, solved by generators. By the mid-nineteenth century, these needs had all been met.

Norton added, “But then, more practically, there was the problem of distributing power economically.”

Proponents of direct current (DC) and alternating current (AC) engaged in a bitter rivalry much like those in the early history of AI. The AC/DC duel resembles the struggle between symbolic AI and those who favored neural networks. In both cases, said Norton, the winners—AC and neural; networks—rose from the disgrace of their opposition.

Are there other commonalities in the early histories of electric power and AI?

An example, said Norton, is that both have inspired utopian fascination and panicky dread. Like AI, electricity is imagined as delivering humanity from toil, disease and even mortality. Mary Shelley was never clear about what gave Frankenstein’s monster life, but she was inspired by “galvanism,” the notion that electricity is the vital element. She was warning that the people who can master the element have a tempting, godlike, but dangerous power that humans were not meant to have. The warnings about AI are similar.

Nvidia’s GTC keynote

Indeed, Huang started his keynote with a dramatic visual introduction that was studded with utopian claims. His keynote video went on to wax poetic about AI “illuminating galaxies to witness the birth of stars,” “sharpening our understanding of extreme weather events,” as able to guide “the blind through a crowded world,” “giving voice to those who cannot speak.”

Huang envisioned AI “harnessing gravity to store renewable power, and paving the way towards unlimited clean energy for us all,” “teaching robots to assist to watch out for danger,” “helping save lives as a healer providing a new generation of cures and new levels of patient care.” He called it a “navigator generating virtual scenarios to let us safely explore the real world,” “understand every decision,” “helping write the script, and breathing life into the words …”

Such descriptions of AI tempt the public, and some media, to see AI as able to magically generate new content in text, music or visual art.

“Companies promote this illusion,” Norton said. And that is misleading.

In fact, AI uses others’ work as raw material. This is problematic. When creative humans whose work is used are uncompensated, all over the world, millions of “taskers” label images so that AI can read them. Employees are treated as isolated contractors and have no means of negotiating for fair compensation.

Norton sees a similar problem with Huang’s concept of “AI factories.”

When Huang calls the datacenters of the future “AI factories,” he seems to dismiss the fact that AI depends upon vast numbers of meagerly paid laborers, noted Norton. The real AI factories are the scattered and isolated taskers engaged in digital piecework. Their labeled images, said Norton, are not “tokens of artificial intelligence” but quanta of human intelligence, supplied by poorly paid humans generating enormous wealth for companies and their investors.

What about energy consumption issues?

After the AI celebration last week, the hot button issue for me boiled down to the sheer absence of discussion on the unstoppable increase in electricity use by AI datacenters. I heard no one in the tech community prepared to step up and propose solutions.

There are apologists aplenty for corporations whose technology devours power. Their standard claim is that “some companies involved in AI could help with these problems.” AI, they say hopefully, will be a powerful tool advancing sustainability. They cite Nvidia’s Earth-2, a digital twin of our earth. Nvidia calls it “an open platform to accelerates climate and weather predictions.”

Also, I’ve seen stories like “What Nvidia’s new Blackwell chip says about AI’s carbon footprint problem.”

The argument goes that the faster processing speed of Nvidia’s new Blackwell chip reduces power consumption during training. “Training the latest ultra-large AI models using 2,000 Blackwell GPUs would use 4 megawatts of power over 90 days of training, compared to having to use 8,000 older GPUs for the same period of time, which would consume 15 megawatts of power,” said Huang.

However, improving the efficiency of a particular chip hardly addresses the crisis in datacenter power drain.

Microsoft’s Dighe was alone in citing the International Energy Agency’s latest report “Electricity 2024 – Analysis and Forecast to 2026”

The IEA report predicted that “after globally consuming an estimated 460 terawatt-hours (TWh) in 2022, datacenters’ total electricity consumption could reach more than 1,000 TWh in 2026.” IEA believes this demand roughly equals the electricity consumption of Japan. The Microsoft executive described this trend as “scary,” but suggested no remedy beyond vaguely proposing “a systems approach.”

I am aware that no single company can fix it. I also understand that we can’t just wish for private corporations to wave a magic wand.

So I end by citing Noah Smith, an economist, who was quoted in the latest AI-related story in the New York Times.

Asked whether human work might be replaced by AI, Smith said this future hinges on whether AI is allowed to consume all the energy that’s available. If it isn’t, “then humans will have some energy to consume.” He added: “From this line of reasoning we can see that if we want government to protect human jobs, we don’t need a thicket of job-specific regulations. All we need is ONE regulation — a limit on the fraction of energy that can go to data centers.”

Bottom line

If society must find ways to use less energy, the only effective government measure would be restricting energy use by datacenters, a truly “scary” scenario for a fiercely libertarian tech community.

Junko Yoshida

Editor in Chief, The Ojo-Yoshida Report

This article was published by the The Ojo-Yoshida Report. For more in-depth analysis, register today and get a free two-month all-access subscription.