This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

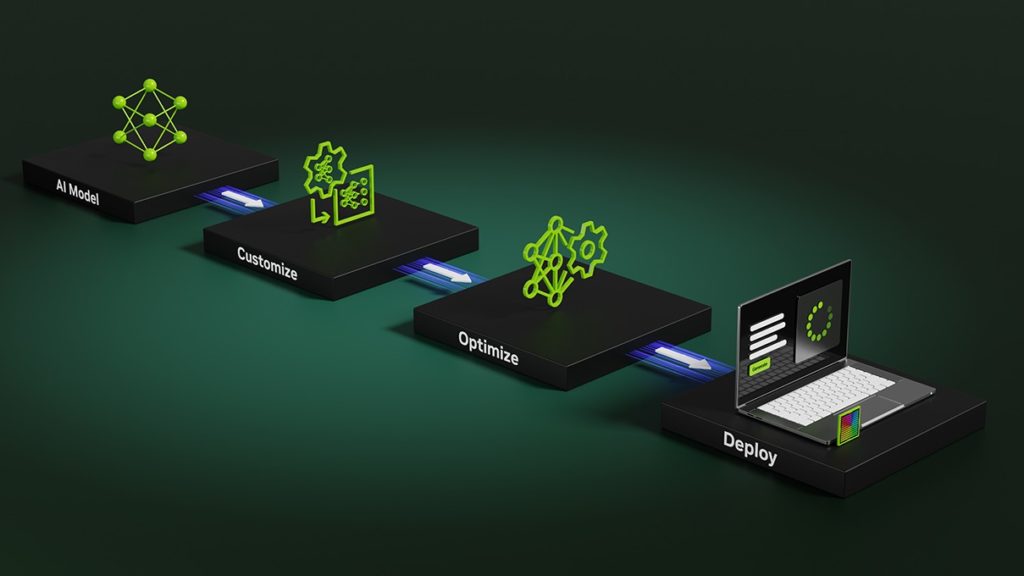

NVIDIA today launched the NVIDIA RTX AI Toolkit, a collection of tools and SDKs for Windows application developers to customize, optimize, and deploy AI models for Windows applications. It’s free to use, doesn’t require prior experience with AI frameworks and development tools, and delivers the best AI performance for both local and cloud deployments.

The wide availability of generative pretrained transformer (GPT) models creates big opportunities for Windows developers to integrate AI capabilities into apps. Yet shipping these features can still involve significant challenges. First, you need to customize the models to meet the specific needs of the application. Second, you need to optimize the models to fit on a wide range of hardware while still delivering the best performance. And third, you need an easy deployment path that works for both cloud and local AI.

The NVIDIA RTX AI Toolkit provides an end-to-end workflow for Windows app developers. You can leverage pretrained models from Hugging Face, customize them with popular fine-tuning techniques to meet application-specific requirements, and quantize them to fit on consumer PCs. You can then optimize them for the best performance across the full range of NVIDIA GeForce RTX GPUs, as well as NVIDIA GPUs in the cloud.

Watch an end-to-end walkthrough of the NVIDIA RTX AI Toolkit, from model development to application deployment

When it comes time to deploy, the RTX AI Toolkit enables several paths to match the needs of your applications, whether you choose to bundle optimized models with the application, download them at app install/update time, or stand up a cloud microservice. The toolkit also includes the NVIDIA AI Inference Manager (AIM) SDK that enables an app to run AI locally or in the cloud, depending on the user’s system configuration or even the current workload.

Powerful, customized AI for every application

Today’s generative models are trained on huge datasets. This can require several weeks, using hundreds of the world’s most powerful GPUs. While these computing resources are out of reach for most developers, open-source pretrained models give you access to powerful AI capabilities.

Pretrained foundation models, available as open source, are typically trained on generalized data sets. This enables them to deliver decent results across a wide range of tasks. However, applications often need specialized behavior. A game character needs to speak in a particular way, or a scientific writing assistant needs to understand industry-specific terms, for example.

Fine-tuning is a technique that further trains a pretrained model on additional data matching the needs of the application. For example, dialogue samples for a game character.

The RTX AI Toolkit includes tools to support fine-tuning, such as NVIDIA AI Workbench. Released earlier this year, AI Workbench is a tool for organizing and running model training, tuning, and optimization projects both on local RTX GPUs and in the cloud. RTX AI Toolkit also includes AI Workbench projects for fine-tuning using QLoRA, one of today’s most popular and effective techniques.

For parameter-efficient fine-tuning, the toolkit leverages QLoRA using the Hugging Face Transformer library, enabling customization while using less memory and can run efficiently on client devices with RTX GPUs.

Once fine-tuning is complete, the next step is optimization.

Optimized for PCs and the cloud

Optimizing AI models involves two main challenges. First, PCs have limited memory and compute resources for running AI models. Second, between PC and cloud, there’s a wide range of target hardware with different capabilities.

RTX AI Toolkit includes the following tools for optimizing AI models and preparing them for deployment.

NVIDIA TensorRT Model Optimizer: Even smaller LLMs can require 14 GB or more RAM. NVIDIA TensorRT Model Optimizer for Windows, with general availability starting today, provides tools to quantize models to be up to 3x smaller without a significant reduction in accuracy. It includes methods such as INT4 AWQ post-training quantization to facilitate running state-of-the-art LLMs on RTX GPUs. With this, not only can smaller models more easily fit in the GPU memory available on typical systems, it also improves performance by reducing memory bandwidth bottlenecks.

NVIDIA TensorRT Cloud: To get the best performance on every system, a model can be optimized specially for each GPU. NVIDIA TensorRT Cloud, available in developer preview, is a cloud service for building optimized model engines for RTX GPUs in PCs, as well as GPUs in the cloud. It also provides prebuilt, weight-stripped engines for popular generative AI models, which can be merged with fine-tuned weights to generate optimized engines. Engines that are built with TensorRT Cloud, and run with the TensorRT runtime, can achieve up to 4x faster performance compared to the pretrained model.

Once the fine-tuned models have been optimized, the next step is deployment.

Develop once, deploy anywhere

By giving your applications the ability to perform inference locally or in the cloud, you can deliver the best experience to the most users. Models deployed on-device can achieve lower latency and don’t require calls to the cloud at runtime, but have certain hardware requirements. Models deployed to the cloud can support an application running on any hardware, but have an ongoing operating cost for the service provider. Once the model is developed, you can deploy it anywhere with the RTX AI Toolkit, and it’s tools for both on-device and cloud paths with:

NVIDIA AI Inference Manager (AIM): AIM, available as early access, simplifies the complexity of AI integration for PC developers, and orchestrates AI inference seamlessly across PC and cloud. NVIDIA AIM pre-configures the PC environment with the necessary AI models, engines, and dependencies, and supports all major inference backends (TensorRT, ONNX Runtime, GGUF, Pytorch) across different accelerators including GPU, NPU and CPU. It also performs runtime compatibility checks to determine if the PC can run the model locally, or switch over to the cloud based on developer policies.

With NVIDIA AIM, developers can leverage NVIDIA NIM to deploy in the cloud, and tools like TensorRT for local on-device deployment.

NVIDIA NIM: NVIDIA NIM is a set of easy-to-use microservices designed to accelerate the deployment of generative AI models across the cloud, data center, and workstations. NIM is available as part of the NVIDIA AI Enterprise suite of software. RTX AI Toolkit provides the tools to package an optimized model with its dependencies, upload to a staging server, and then launch a NIM. This will pull in the optimized model and create an endpoint for applications to call.

Models can also be deployed on-device using the NVIDIA AI Inference Manager (AIM) plugin. This helps to manage the details of local and cloud inference, reducing the integration load for the developer.

NVIDIA TensorRT: NVIDIA TensorRT 10.0 and TensorRT-LLM inference backends offer best-in-class performance for NVIDIA GPUs with tensor cores. The newly released TensorRT 10.0 simplifies deployment of AI models into Windows applications. Weight-stripped engines enable compression of more than 99% of the compiled engine size, such that it can be refitted with model weights directly on end-user devices. Moreover, TensorRT offers software and hardware forward compatibility for AI models to work with newer runtimes or hardware. TensorRT-LLM includes dedicated optimizations for accelerating generative AI LLMs and SLMs on RTX GPUs, further accelerating LLM inferencing.

These tools enable developers to prepare models that are ready at application runtime.

RTX AI acceleration ecosystem

Top creative ISVs, including Adobe, Blackmagic Design, and Topaz Labs, are integrating NVIDIA RTX AI Toolkit into their applications to deliver AI-accelerated apps that run on RTX PCs, enhancing the user experience for millions of creators.

To build accelerated RAG-based and agent-based workflows on RTX PCs, you can now access the capabilities and components of the RTX AI Toolkit (such as TensorRT-LLM) through developer frameworks like LangChain and LlamaIndex. In addition, popular ecosystem tools (such as Automatic1111, Comfy.UI, Jan.AI, OobaBooga, and Sanctum.AI) are now accelerated with the RTX AI Toolkit. Through these integrations, you can easily build optimized AI-accelerated apps, deploy them to on-device and cloud GPUs, and enable hybrid capabilities within the app to run inference across local and cloud environments.

Bringing powerful AI to Windows applications

The NVIDIA RTX AI Toolkit provides an end-to-end workflow for Windows application developers to leverage pretrained models, customize and optimize them, and deploy them to run locally or in the cloud. Fast, powerful, hybrid AI enables AI-powered applications to scale quickly, while delivering the best performance on each system. The RTX AI Toolkit enables you to bring more AI-powered capabilities to more users so they can enjoy the benefits of AI across all of their activities, from gaming to productivity and content creation.

NVIDIA RTX AI Toolkit will be released soon for developer access.

Related resources

- DLI course: Building Conversational AI Applications

- GTC session: Accelerate Your Developer Journey with Dell AI-Ready Workstations and NVIDIA AI Workbench

- GTC session: Create purpose-built AI using vision and language With multi-modal Foundation Models

- GTC session: Accelerating Autonomous Vehicle Development With High-Performance AI Computing

- SDK: TAO Toolkit

- SDK: NVIDIA Tokkio

Jesse Clayton

Product Manager, Mobile Embedded, NVIDIA

Kedar Potdar

Senior Systems Software Engineer, NVIDIA

Annamalai Chockalingam

Product marketing manager, NeMo Megatron and NeMo NLP, NVIDIA