This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

The Low-Power Computer Vision Challenge (LPCVC) is an annual competition organized by the Institute of Electrical and Electronics Engineers (IEEE) to improve the energy efficiency of computer vision technologies for systems with constrained resources. Established in 2015 and led by Professor Richard Tzong-Han Tsai at Purdue University, LPCVC has attracted teams from around the world who submit innovative solutions to various vision problems.

This challenge has had a significant impact on both academic research and industry practices, as proved by the involvement of many renowned research groups and leading technology companies as participants or sponsors. These participations underscore the widespread recognition of LPCVC’s role in advancing energy-efficient computer vision solutions.

Introduction of LPCVC 2025

Qualcomm Technologies, known for its advancements in power-efficient and state-of-the-art edge AI technologies, powered the 2025 LPCVC to emphasize the importance of balancing accuracy and efficiency in Computer Vision (CV) solutions for edge devices with power constraints.

Qualcomm AI Hub is an online platform with a diverse range of advanced edge devices from Qualcomm to test your models on. The platform provides detailed performance options for model compilation, profiling, quantization and inference, making it the primary platform for model iteration and evaluation.

The collaboration between Qualcomm’s Computer Vision team and students from Purdue University and Lehigh University combines industry expertise with academic innovation.

Qualcomm’s Computer Vision team defined the tasks for each track, leveraging their extensive experience in cutting-edge technology. The students contributed by collecting testing data and managing the evaluation process, bringing fresh perspectives and rigorous academic methodologies to the challenge.

To cover different aspects of the research field, the 2025 LPCVC offered 3 tracks:

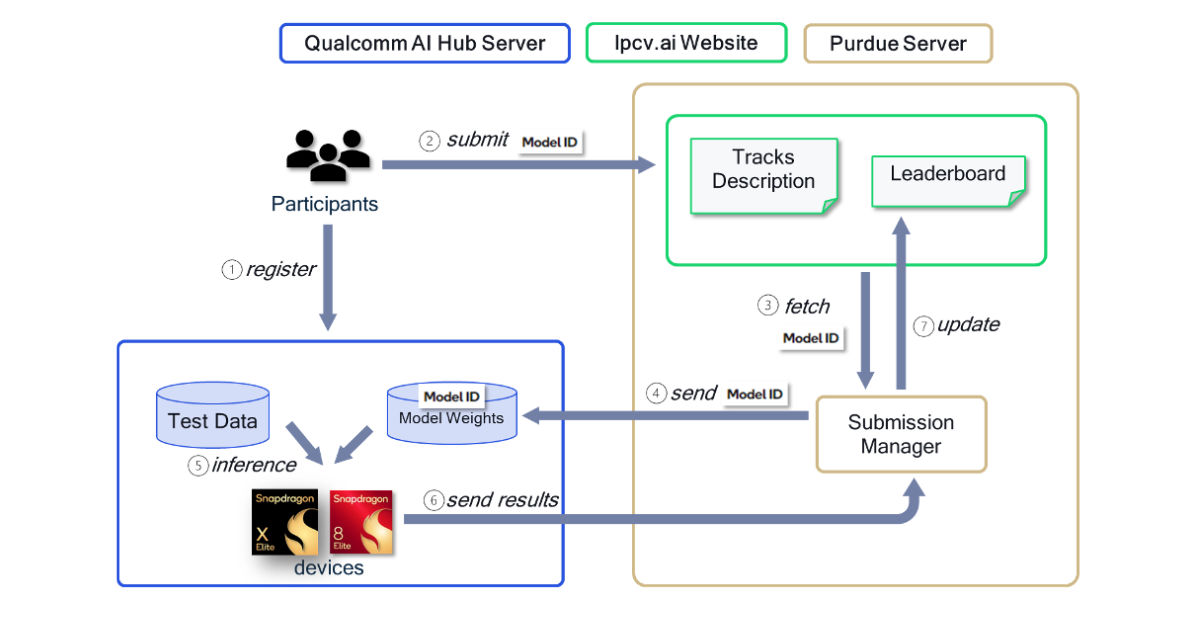

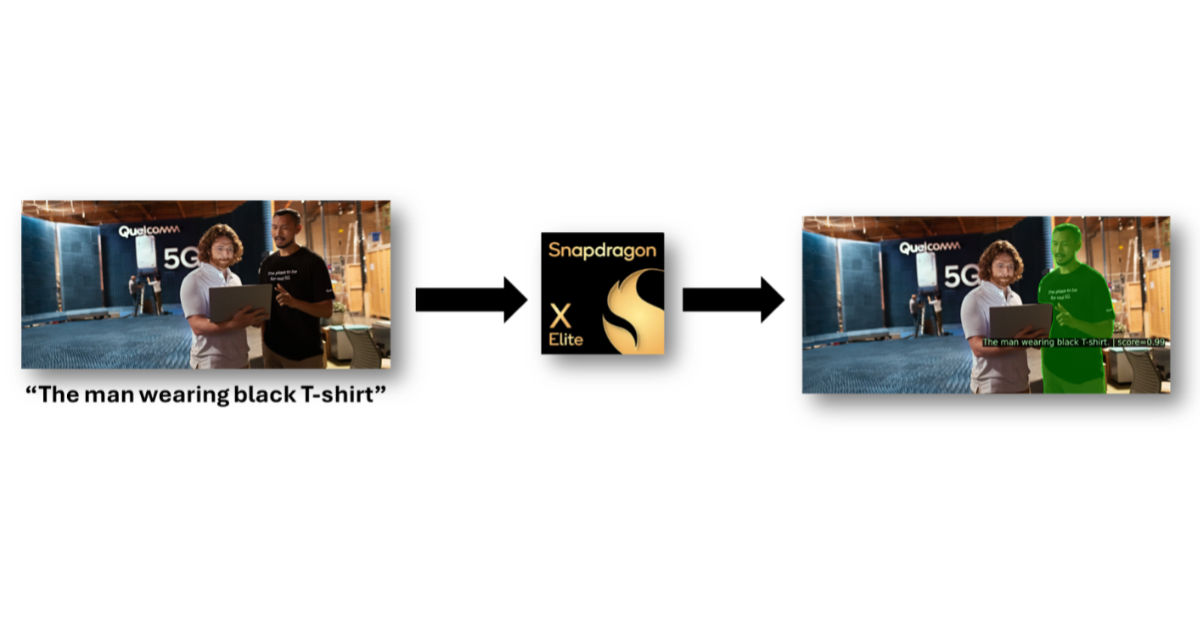

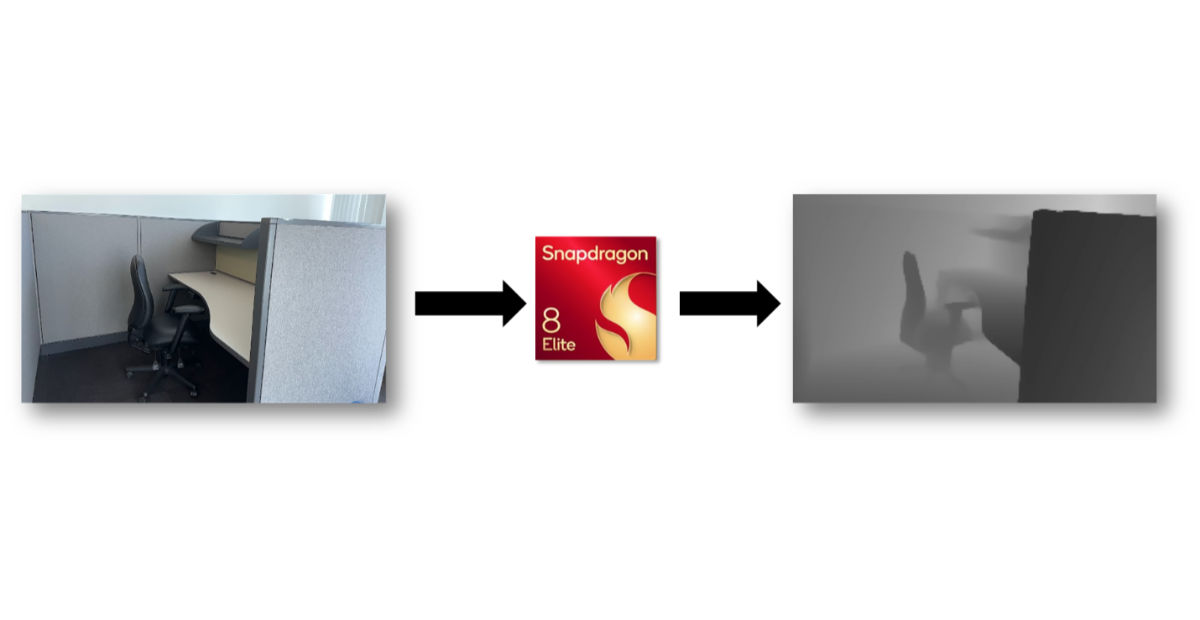

For each track, LPCVC provides baseline models for participants to experiment with. Each team is expected to develop their solutions using track-specific hardware (Snapdragon 8 Elite for track 1 and 3, while Snapdragon X Elite for track 2), via the Qualcomm AI Hub platform.

Once development was completed, the solution was submitted as a compiled job on Qualcomm AI Hub and shared with the LPCVC account as the submission manager. The submission manager will then extract the model ID from the compiled job to evaluate it on the interior testing dataset.

Finally, the evaluation scores will be updated on the leaderboard on the LPCVC website.

A complete workflow is as the following:

On top of the cash price and laptop powered by Snapdragon X Elite laptop provided on behalf of Qualcomm Technologies, the winners for each track are invited to our workshop – the 8th Efficient Deep Learning for Computer Vision – at the 2025 Conference on Computer Vision and Pattern Recognition (CVPR 2025) in June.

Participation Overview

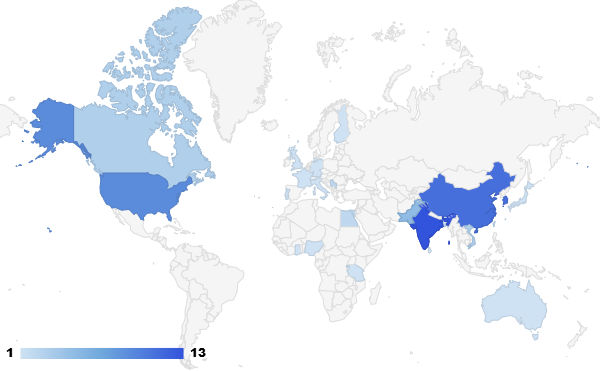

Similar to previous LPCVCs, the 2025 competition garnered substantial global interest from both academic and industrial sectors. A total of 77 teams had registered across all three tracks, representing 25 different countries and regions.

Notably, India, China, South Korea and US had the highest number of participating teams.

Submission Results

The winning solutions for each track of the Low-Power Computer Vision Challenge (LPCVC) significantly outperformed the baseline solutions, demonstrating remarkable advancements in accuracy and efficiency.

| Track 1 | Accuracy | Time |

| Winner | 0.97 | 1,961 μs |

| Baseline | 0.69 | 419 μs |

Track 1 focused on image classification and the winning solution achieved an impressive accuracy of 0.97, compared to the baseline solution’s accuracy of 0.69. This represents a substantial improvement of approximately 40.6% in the model’s ability to correctly classify images.

Given the highly saturated nature of the image classification task in computer vision, this level of improvement is particularly noteworthy.

Additionally, the winning solution completed its tasks in 1,961 microseconds, which is slower than the baseline’s 419 microseconds. Despite the increased time, the dramatic boost in accuracy highlights the effectiveness of the winning model.

| Track 2 | mIOU | Time |

| Winner | 0.61 | 515.18 ms |

| Baseline | 0.46 | 863.42 ms |

Track 2 involved open-vocabulary segmentation with text prompts. The winning solution achieved a mean Intersection over Union (mIOU) score of 0.61, surpassing the baseline solution’s score of 0.46. This indicates a significant enhancement in the model’s segmentation capabilities.

The winning solution completed its tasks in 515.18 milliseconds, which is faster than the baseline’s 863.42 milliseconds, showcasing both improved accuracy and efficiency.

| Track 3 | F-Score | Time |

| Winner | 83.80 | 29.79 ms |

| Baseline | 62.37 | 24.09 ms |

Track 3 focused on monocular relative depth estimation and the winning solution achieved an F-Score of 83.80, compared to the baseline solution’s score of 62.37. This demonstrates a notable improvement in the model’s ability to estimate depth accurately.

The winning solution also completed its tasks in 29.79 milliseconds, slightly slower than the baseline’s 24.09 milliseconds. Despite the marginal increase in time, the significant boost in the F-Score underscores the superior performance of the winning model.

Qualcomm AI Hub in Action

Once the 2025 competition for LPCVC was announced, there was immediate interest and participation. Qualcomm AI Hub’s Slack community where the team interface with customers of their platform and discuss AI on-device gained popularity from those participating in LPCVC.

During the duration of the competition, the #lpcvc channel gained great traction, increasing the overall Slack community engagement and discussions.

Qualcomm AI Hub is a platform aiming to lead the industry in on-device AI optimization. It has attracted over 10,000 users from 1,500 companies in just over a year since launching.

The 2025 LPCVC competition has expanded its use in academia, with more than 90% of participants coming from academic backgrounds.

Leveraging Qualcomm AI Hub not only benefits industry experts by providing cutting-edge tools to prepare models to be deployed on device, the platform and resources also fosters collaboration and innovation among researchers from academia.

Qualcomm AI Hub is open for all to use. Developers can simply sign up to get started and view all the available devices to test their AI model on.

Any developer can bring their own model to Qualcomm AI Hub to be optimized: compile and quantize, profile by running inference on real devices to ensure performance requirements are met and validate accuracy before downloading the optimized model to deploy.

Acknowledgement

We would like to express our sincere gratitude to Purdue University, Lehigh University, and Loyola University Chicago for collaborating with us in organizing the 2025 LPCVC.

We deeply appreciate the support provided by the Qualcomm University Relations program, Qualcomm AI Hub, and Qualcomm Computer Vision Team.

What’s Next?

Visit the Low-Power Computer Vision Challenge (LPCVC) website for more information.

Watch our broadcast introducing 2025 LPCVC at the OpenCV webinar on YouTube.

Sign up for Developer Newsletter to receive updates about future LPCVC challenges.

Xiao Hu

Senior Engineer in Computer Vision R&D, Qualcomm Technologies

Shuai Zhang

Senior Staff Engineer/Manager of Computer Vision R&D, Qualcomm Technologies