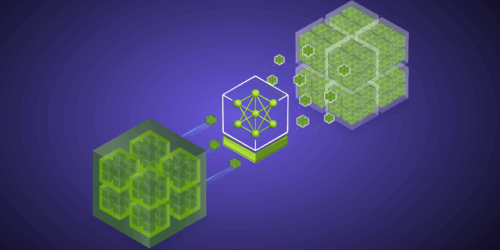

Optimizing LLMs for Performance and Accuracy with Post-training Quantization

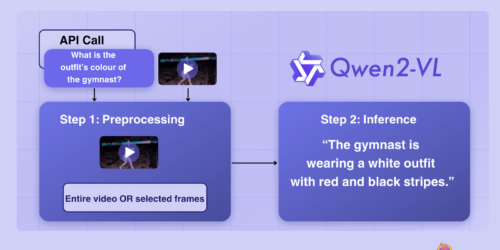

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Quantization is a core tool for developers aiming to improve inference performance with minimal overhead. It delivers significant gains in latency, throughput, and memory efficiency by reducing model precision in a controlled way—without requiring retraining. Today, most models […]

Optimizing LLMs for Performance and Accuracy with Post-training Quantization Read More +