Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

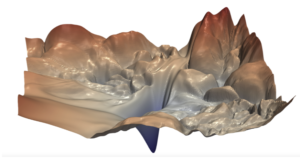

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

AI Chips and Chat GPT: Exploring AI and Robotics

AI chips can empower the intelligence of robotics, with future potential for smarter and more independent cars and robots. Alongside the uses of Chat GPT and chatting with robots at home, the potential for this technology to enhance working environments and reinvent socializing is promising. Cars that can judge the difference between people and signposts

Quantization of Convolutional Neural Networks: Quantization Analysis

See “Quantization of Convolutional Neural Networks: Model Quantization” for the previous article in this series. In the previous articles in this series, we discussed quantization schemes and the effect of different choices on model accuracy. The ultimate choice of quantization scheme depends on the available tools. TFlite and Pytorch are the most popular tools used

The Rising Threat of Deepfakes: A Ticking Time Bomb for Society

In an era where reality seems increasingly malleable, the emergence of deepfake technology has catapulted us into uncharted territory. Deepfakes, synthetic digital media generated by artificial intelligence to manipulate images, audio, or video, pose a profound threat to the fabric of truth and trust in our society. The allure of deepfake technology lies in its

Efficiently Packing Neural Network AI Model for the Edge

This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva. Packing applications into constrained on-chip memory is a familiar problem in embedded design, and is now equally important in compacting neural network AI models into a constrained storage. In some ways this problem is even more challenging

AI Decoded From GTC: The Latest Developer Tools and Apps Accelerating AI on PC and Workstation

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Next Chat with RTX features showcased, TensorRT-LLM ecosystem grows, AI Workbench general availability, and NVIDIA NIM microservices launched. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more

The Rise of Generative AI: A Timeline of Breakthrough Innovations

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Explore the most pivotal advancements that shaped the landscape of generative AI From Alan Turing’s pioneering work to the cutting-edge transformers of the present, the field of generative artificial intelligence (AI) has witnessed remarkable breakthroughs — and

Advantech Establishes Collaboration with Qualcomm to Shape the Future of the Edge

Taipei, Taiwan and Nuremberg, Germany, 10th April 2024 — Today, at Embedded World, Advantech proudly announced its strategic collaboration with Qualcomm Technologies, Inc. to revolutionize the edge computing landscape. This effort, combining AI expertise, high-performance computing, and industry-leading connectivity, is set to propel innovation for industrial computing. This collaboration establishes an open and diverse edge

Axelera Uses oneAPI Construction Kit to Rapidly Enable Open Standards Programming for the Metis AIPU

AI applications have an endless hunger for computational power. Currently, increasing the sizes of the models and cranking up the number of parameters has not quite yet reached the point of diminishing returns and thus the ever growing models still yield better performance than their predecessors. At the same time, new areas for application of