Software for Embedded Vision

SiMa.ai Announces First Integrated Capability with Synopsys to Accelerate Automotive Physical AI Development

San Jose, California – January 6, 2026 – SiMa.ai today announced the first integrated capability resulting from its strategic collaboration with Synopsys. The joint solution provides a blueprint to accelerate architecture exploration and early virtual software development for AI- ready, next-generation automotive SoCs that support applications such as Advanced Driver Assistance Systems (ADAS) and In-vehicle-Infotainment

NVIDIA DRIVE AV Software Debuts in All-New Mercedes-Benz CLA

This post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Production launch of enhanced level 2 driver-assistance system in the US this year signals start of broader rollout of NVIDIA’s full-stack software across the automotive industry. NVIDIA is enabling a new era of AI-defined driving, bringing its NVIDIA

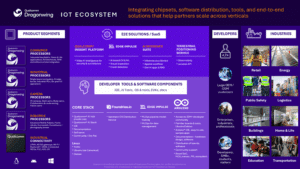

Qualcomm’s IE‑IoT Expansion Is Complete: Edge AI Unleashed for Developers, Enterprises & OEMs

Key Takeaways: Expanded set of processors, software, services, and developer tools including offerings and technologies from the five acquisitions of Augentix, Arduino, Edge Impulse, Focus.AI, and Foundries.io, positions the Company to help meet edge computing and AI needs for customers across virtually all verticals. Completed acquisition of Augentix, a leader in mass-market image processors, extends Qualcomm Technologies’ ability to provide system-on-chips tailored for intelligent IP cameras and vision systems. New Qualcomm Dragonwing™ Q‑7790 and Q‑8750 processors power security-focused on‑device AI across drones, smart cameras

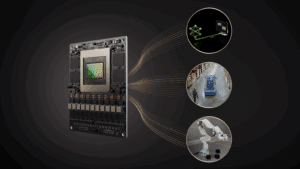

Accelerate AI Inference for Edge and Robotics with NVIDIA Jetson T4000 and NVIDIA JetPack 7.1

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA is introducing the NVIDIA Jetson T4000, bringing high-performance AI and real-time reasoning to a wider range of robotics and edge AI applications. Optimized for tighter power and thermal envelopes, T4000 delivers up to 1200 FP4 TFLOPs of AI compute and 64 GB of memory,

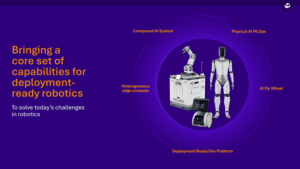

Qualcomm Introduces a Full Suite of Robotics Technologies, Powering Physical AI from Household Robots up to Full-Size Humanoids

Key Takeaways: Utilizing leadership in Physical AI with comprehensive stack systems built on safety-grade high performance SoC platforms, Qualcomm’s general-purpose robotics architecture delivers industry-leading power efficiency, and scalability, enabling capabilities from personal service robots to next generation industrial autonomous mobile robots and full-size humanoids that can reason, adapt, and decide. New end-to‑end architecture accelerates automation

TI Accelerates the Shift Toward Autonomous Vehicles with Expanded Automotive Portfolio

New analog and embedded processing technologies from TI enable automakers to deliver smarter, safer and more connected driving experiences across their entire vehicle fleet Key Takeaways: TI’s newest family of high-performance computing SoCs delivers safe, efficient edge AI performance up to 1200 TOPS with a proprietary NPU and chiplet-ready design. Automakers can simplify radar designs and

Synopsys Showcases Vision For AI-Driven, Software-Defined Automotive Engineering at CES 2026

Synopsys solutions accelerate innovation from systems to silicon, enabling more than 90% of the top 100 automotive suppliers to boost engineering productivity, predict system performance, and deliver safer, more sustainable mobility Key Takeaways: Synopsys will support the Fédération Internationale de l’Automobile (FIA), the global governing body for motorsport and the federation for mobility organizations

Ambarella Launches a Developer Zone to Broaden its Edge AI Ecosystem

SANTA CLARA, Calif., Jan. 6, 2026 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced during CES the launch of its Ambarella Developer Zone (DevZone). Located at developer.ambarella.com, the DevZone is designed to help Ambarella’s growing ecosystem of partners learn, build and deploy edge AI applications on a variety of edge systems with greater speed and clarity. It

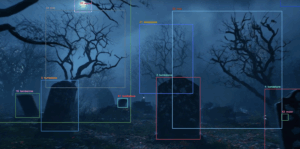

ChatTag: Bringing ChatGPT Vision to Image Annotation in OpenFilter

This blog post was originally published at Plainsight Technologies’ website. It is reprinted here with the permission of Plainsight Technologies. Image annotation has always been one of those tasks that’s both essential and tedious. Whether you’re labeling thousands of product photos for a retail model or identifying components in industrial footage, manual annotation is time-consuming and

Chips&Media and Visionary.ai Unveil the World’s First AI-Based Full Image Signal Processor, Redefining the Future of Image Quality

The collaboration marks a breakthrough in real-time video, bringing software-upgradable imaging to the edge. 5th January, 2026 Seoul, South Korea and Jerusalem, Israel — Chips&Media, Inc. (KOSDAQ:094360) a leading hardware IP provider with more than 20 years of leadership in the multimedia industry, and Visionary.ai, the Israeli startup pioneering AI software for image processing,

The Architecture Shift Powering Next-Gen Industrial AI

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. How Arm is powering the shift to flexible AI-ready, energy-efficient compute at the “Industrial Edge.” Industrial automation is undergoing a foundational shift. From industrial PC to edge gateways and smart sensors, compute needs at the edge are changing fast. AI is moving

Nota AI Signs Technology Collaboration Agreement with Samsung Electronics for Exynos AI Optimization “Driving the Popularization of On-Device Generative AI”

Nota AI’s optimization technology integrated into Samsung Electronics’ Exynos AI Studio, enhancing efficiency in on-device AI model development Seoul, South Korea Nov.26, 2025 — Nota AI, a company specializing in AI model compression and optimization, announced today that it has signed a collaboration agreement with Samsung Electronics’ System LSI Business to provide its AI

Introducing Gimlet Labs: AI Infrastructure for the Agentic Era

This blog post was originally published at Gimlet Labs’ website. It is reprinted here with the permission of Gimlet Labs. We’re excited to finally share what we’ve been building at Gimlet Labs. Our mission is to make AI workloads 10X more efficient by expanding the pool of usable compute and improving how it’s orchestrated. Over the

Au-Zone Technologies Expands EdgeFirst Studio Access

Proven MLOps Platform for Spatial Perception at the Edge Now Available CALGARY, AB – November 19, 2025 – Au-Zone Technologies today expands general access to EdgeFirst Studio™, the enterprise MLOps platform purpose-built for Spatial Perception at the Edge for machines and robotic systems operating in dynamic and uncertain environments. After six months of successful

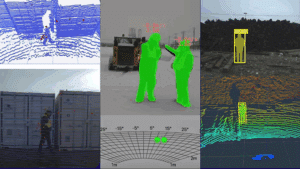

Enabling Autonomous Machines: Advancing 3D Sensor Fusion With Au-Zone

This blog post was originally published at NXP Semiconductors’ website. It is reprinted here with the permission of NXP Semiconductors. Smarter Perception at the Edge Dusty construction sites. Fog-covered fields. Crowded warehouses. Heavy rain. Uneven terrain. What does it take for an autonomous machine to perceive and navigate challenging real-world environments like these – reliably, in

The Role of Edge Computing in Video Intelligence

This blog post was originally published at Network Optix’ website. It is reprinted here with the permission of Network Optix. In many industries, decisions need to be made within seconds. Delayed responses can mean the difference between preventing an incident and simply reviewing it after the fact. Traditional video systems, which rely on transmitting data offsite

The Need for Continuous Training in Computer Vision Models

This blog post was originally published at Plainsight Technologies’ website. It is reprinted here with the permission of Plainsight Technologies. A computer vision model that works under one lighting condition, store layout, or camera angle can quickly fail as conditions change. In the real world, nothing is constant, seasons change, lighting shifts, new objects appear and

Why Openness Matters for AI at the Edge

This blog post was originally published at Synaptics’ website. It is reprinted here with the permission of Synaptics. Openness across software, standards, and silicon is critical for ensuring interoperability, flexibility, and the growth of AI at the edge AI continues to migrate towards the edge and is no longer confined to the datacenter. Edge AI brings