Software for Embedded Vision

Bringing Edge AI Performance to PyTorch Developers with ExecuTorch 1.0

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. ExecuTorch 1.0, an open source solution to training and inference on the Edge, becomes available to all developers Qualcomm Technologies contributed the ExecuTorch repository for developers to access Qualcomm® Hexagon™ NPU directly This streamlines the developer workflow

NVIDIA Contributes to Open Frameworks for Next-generation Robotics Development

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. At the ROSCon robotics conference, NVIDIA announced contributions to the ROS 2 robotics framework and the Open Source Robotics Alliance’s new Physical AI Special Interest Group, as well as the latest release of NVIDIA Isaac ROS. This

Unleash Real-time LiDAR Intelligence with BrainChip Akida On-chip AI

This blog post was originally published at BrainChip’s website. It is reprinted here with the permission of BrainChip. Accelerating LiDAR Point Cloud with BrainChip’s Akida™ PointNet++ Model. LiDAR (Light Detection and Ranging) technology is the key enabler for advanced Spatial AI—the ability of a machine to understand and interact with the physical world in three

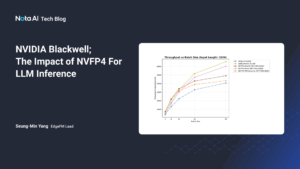

NVIDIA Blackwell: The Impact of NVFP4 For LLM Inference

This blog post was originally published at Nota AI’s website. It is reprinted here with the permission of Nota AI. With the introduction of NVFP4—a new 4-bit floating point data type in NVIDIA’s Blackwell GPU architecture—LLM inference achieves markedly improved efficiency. Blackwell’s NVFP4 format (RTX PRO 6000) delivers up to 2× higher LLM inference efficiency

Don’t Give Your Business Data to AI Companies

This blog post was originally published at Plainsight Technologies’ website. It is reprinted here with the permission of Plainsight Technologies. I have joined Plainsight Technologies as CEO to do something radical: not steal your data. Vision is our most powerful sense. We navigate the world, recognize faces, assess our surroundings, operate vehicles, and make split-second

NanoEdge AI Studio v5, the First AutoML Tool with Synthetic Data Generation

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. NanoEdge AI Studio v5 is the first AutoML tool for STM32 microcontrollers capable of generating anomaly data out of typical logs, thanks to a new feature we call Synthetic Data Generation. Additionally, the latest version makes it

“Three Big Topics in Autonomous Driving and ADAS,” an Interview with Valeo

Frank Moesle, Software Department Manager at Valeo, talks with Independent Journalist Junko Yoshida for the “Three Big Topics in Autonomous Driving and ADAS” interview at the May 2025 Embedded Vision Summit. In this on-stage interview, Moesle and Yoshida focus on trends and challenges in automotive technology, autonomous driving and ADAS.… “Three Big Topics in Autonomous

Task-specific AI vs Generic LLMs: Why Precision and Reliability Matter

This blog post was originally published at Rapidflare’s website. It is reprinted here with the permission of Rapidflare. Task-specific AI is redefining what’s possible in mission-critical industries. While generic large language models (LLMs) like ChatGPT excel at broad conversations, they often struggle with accuracy, consistency, and domain-specific context. In sectors where precision and reliability are

“Toward Hardware-agnostic ADAS Implementations for Software-defined Vehicles,” a Presentation from Valeo

Frank Moesle, Software Department Manager at Valeo, presents the “Toward Hardware-agnostic ADAS Implementations for Software-defined Vehicles” tutorial at the May 2025 Embedded Vision Summit. ADAS (advanced-driver assistance systems) software has historically been tightly bound to the underlying system-on-chip (SoC). This software, especially for visual perception, has been extensively optimized for… “Toward Hardware-agnostic ADAS Implementations for

Plainsight Appoints Venky Renganathan as Chief Technology Officer

Enterprise Technology Leader Brings Experience Leading Transformational Change as Computer Vision AI Innovator Moves to Next Phase of Platform Innovation SEATTLE — October 14, 2025 — Plainsight, a leader in computer vision AI solutions, today announced the appointment of Venky Renganathan as its new Chief Technology Officer (CTO). Renganathan is an enterprise technology leader with

Plainsight Names Jonathan Simkins as President & CFO

Proven enterprise leader brings expertise in managing privately-held companies during hyper-growth in machine learning, infrastructure software and open source. SEATTLE — September 30, 2025 — Plainsight, the leader in operationalizing computer vision through its pioneering modern computer vision infrastructure, today announced Jonathan Simkins as its President and CFO. In this role, Simkins will help scale

Snapdragon Stories: Four Ways AI Has Improved My Life

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. I’ve used AI chat bots here and there, mostly for relatively simple and very specific tasks. But, I was underutilizing — and underestimating — how AI can quietly yet significantly reshape everyday moments. I don’t want to

“Object Detection Models: Balancing Speed, Accuracy and Efficiency,” a Presentation from Union.ai

Sage Elliott, AI Engineer at Union.ai, presents the “Object Detection Models: Balancing Speed, Accuracy and Efficiency,” tutorial at the May 2025 Embedded Vision Summit. Deep learning has transformed many aspects of computer vision, including object detection, enabling accurate and efficient identification of objects in images and videos. However, choosing the… “Object Detection Models: Balancing Speed,

“Depth Estimation from Monocular Images Using Geometric Foundation Models,” a Presentation from Toyota Research Institute

Rareș Ambruș, Senior Manager for Large Behavior Models at Toyota Research Institute, presents the “Depth Estimation from Monocular Images Using Geometric Foundation Models” tutorial at the May 2025 Embedded Vision Summit. In this presentation, Ambruș looks at recent advances in depth estimation from images. He first focuses on the ability… “Depth Estimation from Monocular Images

Open-source Physics Engine and OpenUSD Advance Robot Learning

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The Newton physics engine and enhanced NVIDIA Isaac GR00T models enable developers to accelerate robot learning through unified OpenUSD simulation workflows. Editor’s note: This blog is a part of Into the Omniverse, a series focused on how

“Introduction to DNN Training: Fundamentals, Process and Best Practices,” a Presentation from Think Circuits

Kevin Weekly, CEO of Think Circuits, presents the “Introduction to DNN Training: Fundamentals, Process and Best Practices” tutorial at the May 2025 Embedded Vision Summit. Training a model is a crucial step in machine learning, but it can be overwhelming for beginners. In this talk, Weekly provides a comprehensive introduction… “Introduction to DNN Training: Fundamentals,

Upcoming Workshop Explores Deploying PyTorch on the Edge

On October 21, 2022 at noon PT, Alliance Member company Qualcomm, along with Amazon, will deliver the free (advance registration required) half-day in-person workshop “PyTorch on the Edge: Amazon SageMaker x Qualcomm® AI Hub” at the InterContinental Hotel in San Francisco, California. From the event page: Seamless cloud-to-edge AI development experience: Train on AWS, deploy

D3 Embedded, HTEC, Texas Instruments and Tobii Pioneer the Integration of Single-camera and Radar Interior Sensor Fusion for In-cabin Sensing

The companies joined forces to develop sensor fusion based interior sensing for enhanced vehicle safety, launching at the InCabin Europe conference on October 7-9. Rochester, NY – October 6, 2025 – Tobii, with its automotive interior sensing branch Tobii Autosense, together with D3 Embedded, and HTEC today announced the development of an interior sensing solution