Videos on Edge AI and Visual Intelligence

We hope that the compelling AI and visual intelligence case studies that follow will both entertain and inspire you, and that you’ll regularly revisit this page as new material is added. For more, monitor the News page, where you’ll frequently find video content embedded within the daily writeups.

Alliance Website Videos

SqueezeBits Demonstration of On-device LLM Inference, Running a 2.4B Parameter Model on the iPhone 14 Pro

Taesu Kim, CTO of SqueezeBits, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Kim demonstrates a 2.4-billion-parameter large language model (LLM) running entirely on an iPhone 14 Pro without server connectivity. The device operates in airplane mode, highlighting on-device inference using a hybrid approach that

Sony Semiconductor Demonstration of AI Vision Devices and Tools for Industrial Use Cases

Zachary Li, Product and Business Development Manager at Sony America, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Li demonstrates his company’s AITRIOS products and ecosystem. Powered by the IMX500 intelligent vision sensor, Sony AITRIOS collaborates with Raspberry Pi for development kits and with leading

Sony Semiconductor Demonstration of Its Open-source Edge AI Stack with the IMX500 Intelligent Sensor

JF Joly, Product Manager for the AITRIOS platform at Sony Semiconductor, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Joly demonstrates Sony’s fully open-source software stack that enables the creation of AI-powered cameras using the IMX500 intelligent vision sensor. In this demo, Joly illustrates how

Sony Semiconductor Demonstration of On-sensor YOLO Inference with the Sony IMX500 and Raspberry Pi

Amir Servi, Edge AI Product Manager at Sony Semiconductors, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Servi demonstrates the IMX500 — the first vision sensor with integrated edge AI processing capabilities. Using the Raspberry Pi AI Camera and Ultralytics YOLOv11n models, Servi showcases real-time

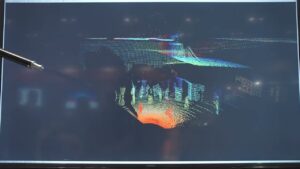

Namuga Vision Connectivity Demonstration of Compact Solid-state LiDAR for Automotive and Robotics Applications

Min Lee, Business Development Team Leader at Namuga Vision Connectivity, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Lee demonstrates a compact solid-state LiDAR solution tailored for automotive and robotics industries. This solid-state LiDAR features high precision, fast response time, and no moving parts—ideal for

Namuga Vision Connectivity Demonstration of an AI-powered Total Camera System for an Automotive Bus Solution

Min Lee, Business Development Team Leader at Namuga Vision Connectivity, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Lee demonstrates his company’s AI-powered total camera system. The system is designed for integration into public transportation, especially buses, enhancing safety and automation. It includes front-view, side-view,

Namuga Vision Connectivity Demonstration of a Real-time Eye-tracking Camera Solution with a Glasses-free 3D Display

Min Lee, Business Development Team Leader at Namuga Vision Connectivity, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Lee demonstrates a real-time eye-tracking camera solution that accurately detects the viewer’s eye position and angle. This data enables a glasses-free 3D display experience using an advanced

Micron Demonstration of Its Key Partner Enablement, Driving Solutions for AI and VLMs at the Edge

Wil Florentino, Senior Strategic Marketing Manager for Industrial at Micron, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Florentino demonstrates examples of its extensive partnerships and customers enabling artificial intelligence (AI) for the global market. Florentino showcases two specific demos with different use cases. The

Micron Demonstration of Memory in Automotive: Great Things Come in Small Packages

Bill Stafford, Marketing Solutions Director at Micron, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Stafford demonstrates his company’s collaboration with Infineon on the TRAVEO T2G CYT4EN graphics microcontroller teamed with Micron LPDDR4 and e.MMC, which give automotive clusters media rich content, high performance, and

Micron Demonstration of Memory in Artificial Intelligence: Leading from Performance to Safety

Bill Stafford, Marketing Solutions Director at Micron, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Stafford demonstrates his company’s key products in the artificial intelligence (AI) and computer vision markets. Micron manufactures one of the broadest portfolios of memory and storage solutions in the world.