| LETTER FROM THE EDITOR |

Dear Colleague,

Next Thursday, February 27, Jeff Bier, founder of the Edge AI and Vision Alliance, will present two sessions of a free one-hour webinar, “Algorithms, Processors and Tools for Visual AI: Analysis, Insights and Forecasts”. Every year since 2015, the Edge AI and Vision Alliance has surveyed developers of computer vision-based systems and applications to understand what chips and tools they use to build their visual AI products. The results from our most recent survey, conducted in October 2019, were derived from responses received from more than 700 computer vision developers across a wide range of industries, organizations, geographical locations and job types. In this webinar, Bier will provide insights into the popular hardware and software platforms being used for vision-enabled end products, derived from survey results. Bier will not only share results from this year’s survey but also compare and contrast them with past years’ survey data, identifying trends and extrapolating them to future results forecasts. The first webinar session will take place at 9 am Pacific Time (noon Eastern Time), timed for attendees in Europe and the Americas, while the second, at 6 pm Pacific Time (10 am China Standard Time on February 28), is intended for attendees in Asia. To register, please see the event page for the session you’re interested in. Registration for the May 18-21, 2020 Embedded Vision Summit, the preeminent conference on practical visual AI and computer vision, is now open. Be sure to register today with promo code SUPEREARLYBIRD20 to receive your Super Early Bird Discount, which ends this Friday! The Alliance is now also accepting applications for the 2020 Vision Product of the Year Awards competition. The Vision Product of the Year Awards are open to Member companies of the Alliance and celebrate the innovation of the industry’s leading companies that are developing and enabling the next generation of computer vision products. Winning a Vision Product of the Year award recognizes your leadership in computer vision as evaluated by independent industry experts; winners will be announced at the 2020 Embedded Vision Summit. For more information, and to enter, please see the program page. Brian Dipert |

| DEEP LEARNING SOFTWARE DEVELOPMENT |

|

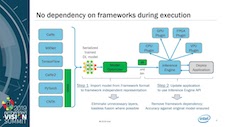

Performance Analysis for Optimizing Embedded Deep Learning Inference Software How to Get the Best Deep Learning Performance with the OpenVINO Toolkit |

| EVENT AND OBJECT DETECTION |

|

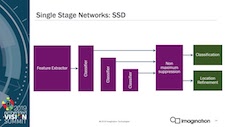

Using Deep Learning for Video Event Detection on a Compute Budget Object Detection for Embedded Markets |

| UPCOMING INDUSTRY EVENTS |

|

Yole Développement Webinar – 3D Imaging and Sensing: From Enhanced Photography to an Enabling Technology for AR and VR: February 19, 2020, 8:00 am PT Edge AI and Vision Alliance Webinar – Algorithms, Processors and Tools for Visual AI: Analysis, Insights and Forecasts: February 27, 2020, 9:00 am and 6:00 pm PT (two sessions) Embedded Vision Summit: May 18-21, 2020, Santa Clara, California |

| FEATURED NEWS |

|

New AI Technology from Arm Delivers On-device Intelligence for IoT Basler Introduces an Embedded Vision System for Cloud-based Machine Learning Applications Mobileye’s Global Ambitions Take Shape with New Deals in China and South Korea Allied Vision’s New Alvium CSI-2 Camera Enables Full HD Resolution for Embedded Vision A New OmniVision 48MP Image Sensor Provides High Dynamic Range and 4K Video Performance for Flagship Mobile Phones |