| LETTER FROM THE EDITOR |

Dear Colleague,

Intel, a Premier Sponsor of the upcoming Embedded Vision Summit, will be delivering three workshops during the event:

Check out the technology workshops page on the Summit website for more information and online registration on these three workshops, along with the workshop “Beyond 2020—Vision SoCs for the Edge” from Synopsys. And while you’re there, be sure to also register for the Embedded Vision Summit, taking place online September 15-25! On August 20, 2020, Jeff Bier, founder of the Edge AI and Vision Alliance, will present two sessions of a free one-hour webinar, “Key Trends in the Deployment of Edge AI and Computer Vision”. The first session will take place at 9 am PT (noon ET), timed for attendees in Europe and the Americas. The second session, at 6 pm PT (9 am China Standard Time on August 21), is timed for attendees in Asia. With so much happening in edge AI and computer vision applications and technology, and happening so fast, it can be difficult to see the big picture. This webinar from the Alliance will examine the four most important trends that are fueling the proliferation of edge AI and vision applications and influencing the future of the industry:

Bier will explain what’s fueling each of these key trends, and will highlight key implications for technology suppliers, solution developers and end-users. He will also provide technology and application examples illustrating each of these trends, including spotlighting the winners of the Alliance’s 2020 Vision Product of the Year Awards. A question-and-answer session will follow the presentation. See here for more information, including online registration. Brian Dipert |

| COVID-19 (THE NOVEL CORONAVIRUS) AND COMPUTER VISION |

|

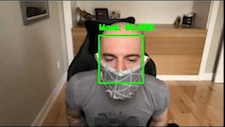

Face Mask Detection in Street Camera Video Streams Using AI: Behind the Curtain The pressure to show results as quickly as possible, along with inevitable AI “overpromising” by some developers and implementers, unfortunately often sends a misguided message: that solving these use cases is trivial thanks to AI’s “mighty powers”. To paint a more accurate and complete picture, in this technical article the company details the creative process behind a computer vision-based solution for one example application:

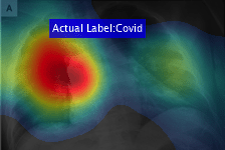

Deep Learning for Medical Imaging: COVID-19 Detection

Huiying Medical: Helping Combat COVID-19 with AI Technology |

| UPCOMING INDUSTRY EVENTS |

|

Edge AI and Vision Alliance Webinar – Key Trends in the Deployment of Edge AI and Computer Vision: August 20, 2020, 9:00 am PT and 6:00 pm PT Embedded Vision Summit: September 15-25, 2020 |

| FEATURED NEWS |

|

OpenCV.org Launches an Affordable Smart Camera with a Kickstarter Campaign STMicroelectronics Enables Innovative Social-Distancing Applications with FlightSense Time-of-Flight Proximity Sensors Upcoming Online Presentations from Codeplay Software Help You Develop SYCL Code Multiple Shipping Khronos OpenXR-conformant Systems Deliver XR Application Portability Allied Vision’s 12.2 Mpixel Alvium 1800 USB Camera Integrates a Rolling-shutter Small-pixel Sensor |

| VISION PRODUCT OF THE YEAR WINNER SHOWCASE |

|

Morpho Semantic Filtering (Best AI Software or Algorithm) Please see here for more information on Morpho and its Semantic Filtering. The Vision Product of the Year Awards are open to Member companies of the Edge AI and Vision Alliance and celebrate the innovation of the industry’s leading companies that are developing and enabling the next generation of computer vision products. Winning a Vision Product of the Year award recognizes leadership in computer vision as evaluated by independent industry experts. |

| EMBEDDED VISION SUMMIT SPONSOR SHOWCASE |

|

Attend the Embedded Vision Summit to meet this and other leading computer vision and edge AI technology suppliers! Microsoft M12 |