Felix Heide, Co-Founder and Chief Technology Officer at Algolux, presents the “Designing Cameras to Detect the “Invisible”: Handling Edge Cases Without Supervision” tutorial at the September 2020 Embedded Vision Summit.

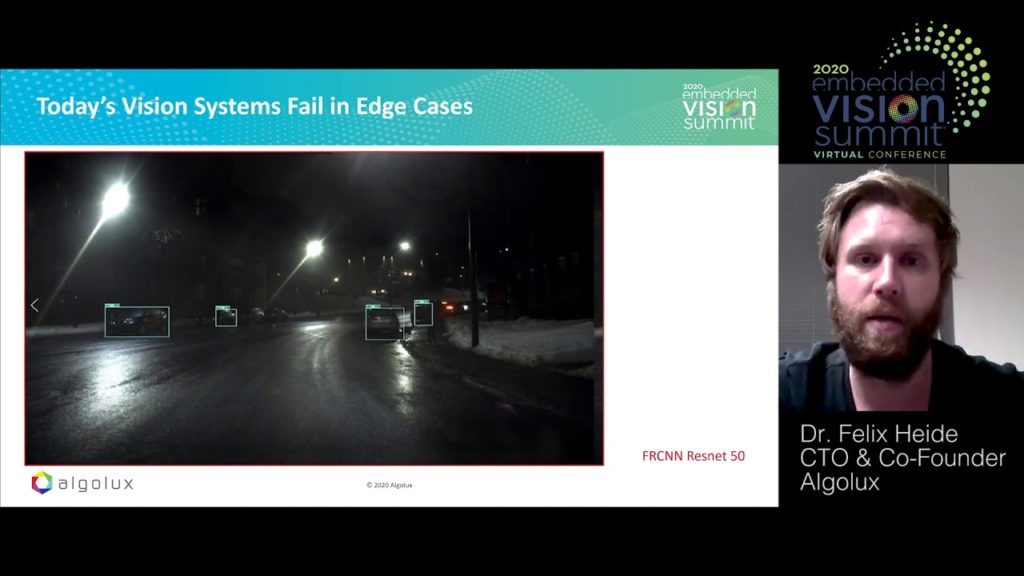

Vision systems play an essential role in safety-critical applications, such as advanced driver assistance systems, autonomous vehicles, video security, and fleet management. However, today’s imaging and vision stacks fundamentally rely on supervised training, making it challenging to handle the “edge cases” with datasets that are naturally biased—for example unusual scenes, object types, and environmental conditions, such as rare, dense fog and snow. In this talk, Heide introduces the computational imaging and computer vision approaches being used by Algolux to handle such edge cases.

Instead of relying purely on supervised downstream networks to become more robust by seeing more training data, Algolux rethinks the camera design itself and optimizes new processing stacks, from photon to detection, that jointly solve this problem. Heide shows how such co-designed cameras, using the company’s Eos camera stack, outperform public and commercial vision systems (Tesla’s latest OTA Model S Autopilot and Nvidia Driveworks). He also shows how the same approach can be applied to depth imaging, allowing Algolux to extract accurate, dense depth via low-cost CMOS gated imagers (beating scanning lidar, such as Velodyne’s HDL64).

See here for a PDF of the slides.