This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

NVIDIA CUDA-X AI are deep learning libraries for researchers and software developers to build high performance GPU-accelerated applications for conversational AI, recommendation systems and computer vision.

Learn what’s new in the latest releases of CUDA-X AI libraries.

Refer to each package’s release notes in documentation for additional information.

NVIDIA Jarvis Open Beta

NVIDIA Jarvis is an application framework for multimodal conversational AI services that delivers real-time performance on GPUs. This version of Jarvis includes:

- ASR, NLU, and TTS models trained on thousands of hours of speech data.

- Transfer Learning Toolkit with zero coding approach to re-train on custom data.

- Fully accelerated deep learning pipelines optimized to run as scalable services.

- End-to-end workflow and tools to deploy services using one line of code.

Transfer Learning Toolkit 3.0 Developer Preview

NVIDIA released new pre-trained models for computer vision and conversational AI that can be easily fine-tuned with Transfer Learning Toolkit (TLT) 3.0 with a zero-coding approach.

Key highlights:

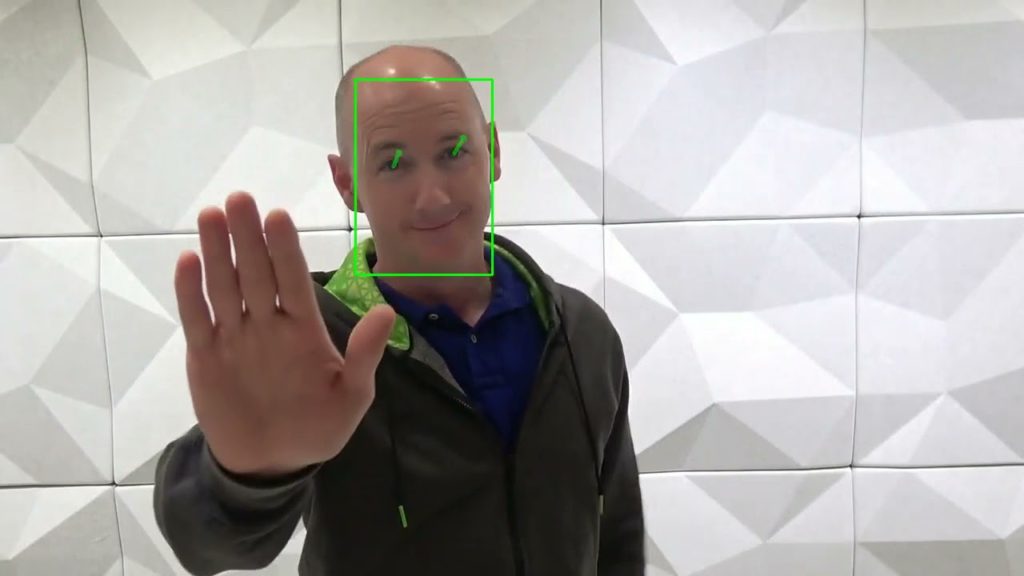

- New vision AI pre-trained models: license plate detection and recognition, heart rate monitoring, gesture recognition, gaze estimation, emotion recognition, face detection, and facial landmark estimation

- Newly added support for automatic speech recognition (ASR) and natural language processing (NLP)

- Choice of training with popular network architectures such as EfficientNet, YoloV4, and UNET

- Support for NVIDIA Ampere GPUs with third-generation tensor cores for performance boost

Triton Inference Server 2.7

Triton Inference Server is an open source multi-framework, cross platform inference serving software designed to simplify model production deployment. Version 2.7 includes:

- Model Analyzer – automatically finds best model configuration to maximize performance based on user-specified requirements

- Model Repo Agent API – enables custom operations to be performed to models being loaded (such as decrypting, checksumming, applying TF-TRT optimization, etc)

- Added support for ONNX Runtime backend in Triton Windows build

- Added an example Java and Scala client based on GRPC-generated API

Read full release notes here.

TensorRT 7.2 is Now Available

NVIDIA TensorRT is a platform for high-performance deep learning inference. This version of TensorRT includes:

- New Polygraphy toolkit, assists in prototyping and debugging deep learning models in various frameworks

- Support for Python 3.8

Merlin Open Beta

Merlin is an application framework and ecosystem that enables end-to-end development of recommender systems, accelerated on NVIDIA GPUs. Merlin Open Beta highlights include:

- NVTabular and HugeCTR inference support in Triton Inference Server

- Cloud configurations and cloud support (AWS/GCP)

- Dataset analysis and generation tools

- New PythonAPI for HugeCTR similar to Keras with no JSON configuration anymore

DeepStream SDK 5.1

NVIDIA DeepStream SDK is a streaming analytics toolkit for AI-based multi-sensor processing.

Key highlights for DeepStream SDK 5.1 (General Availability)

- New Python apps for using optical flow, segmentation networks, and analytics using ROI and line crossing

- Support for audio analytics with a sample application highlighting audio classifier usage

- Support for NVIDIA Ampere GPUs with third-generation tensor cores and various performance optimizations

nvJPEG2000 0.2

nvJPEG2000 is a new library for GPU-accelerated JPEG2000 image decoding. This version of nvJPEG2000 includes:

- Support for multi-tile and multi-layer decoding.

- Partial decode by specifying an area of interest for increased efficiency

- New API for commonly used GDAL interface for geospatial images

- 4x faster lossless decoding for 5-3 wavelet decoding and 7x faster loss decoding for 9-7 wavelet transform. Achieve further speed up by pipelining decoding of multiple images.

NVIDIA NeMo 1.0.0b4

NVIDIA NeMo is a toolkit to build, train and fine-tune state-of-the-art speech and language models easily. Highlights of this version include:

- Compatible with Jarvis 1.0.0b2 public beta and TLT 3.0 releases

Deep Learning Examples

Deep Learning Examples provide state-of-the-art reference examples that are easy to train and deploy, achieving the best reproducible accuracy and performance with NVIDIA CUDA-X AI software stack running on NVIDIA Volta, Turing, and Ampere GPUs.

New Model Scripts available from the NGC Catalog:

- nnUNet/PyT: A Self-adapting Framework for U-Net for state-of-the-art Segmentation across distinct entities, image modalities, image geometries, and dataset sizes, with no manual adjustments between datasets.

- Wide and Deep/TF2: Wide & Deep refers to a class of networks that use the output of two parts working in parallel – wide model and deep model – to make a binary prediction of CTR.

- EfficientNet PyT & TF2: A model that scales the depth, width, and resolution to achieve better performance across different datasets. EfficientNet B4 achieves state-of-the-art 82.78% top-1 accuracy on ImageNet, while being 8.4x smaller and 6.1x faster on inference than the best existing ConvNet.

- Electra: A novel pre-training method for language representations which outperforms existing techniques, given the same compute budget on a wide array of Natural Language Processing (NLP) tasks.

Brad Nemire

Developer Communications Team Lead, NVIDIA