The new Eos Robust Depth Perception Software resolves the range, resolution, cost, and robustness limitations of the latest lidar, radar, and camera based systems combined with a scalable and modular software perception suite.

MONTREAL, September 12, 2022 – Algolux Inc., the leading provider of robust and scalable depth perception solutions, has announced its Eos Robust Depth Perception Software. The expanded AI software offering builds on the company’s advanced perception portfolio to now deliver both industry-leading dense depth estimation and robust perception to further increase the safety of passenger and transport vehicles in all lighting and weather conditions.

This new offering addresses the cost and performance limitations in today’s automated driver assist systems (ADAS) and autonomous vehicle (AV) platforms by applying the same breakthrough deep learning approach used by Algolux’s award winning robust perception software.

“Mercedes-Benz has a track record as an industry leader delivering ADAS and autonomous driving systems, so we intimately understand the need to further improve the perception capabilities of these systems to enable safer operation of vehicles in all operating conditions,” said Werner Ritter, Manager, Vision Enhancement Technology Environment Perception, Mercedes-Benz AG. “Algolux has leveraged the novel AI technology in their camera-based Eos Robust Depth Perception Software to deliver dense depth and detection that provide next-generation range and robustness while addressing limitations of current lidar and radar based approaches for ADAS and autonomous driving.”

The ability to estimate the distance of an object is a fundamental capability for ADAS and automated driving. It allows the vehicle to understand where things are in its surroundings in order to know when to perform a lane change, help park a car, issue a warning to the driver, or automatically brake in an emergency situation such as for debris on the road. This is accomplished today by various types of sensors, such as lidar, radar, and stereo or mono cameras, and edge software that interprets the sensor information to determine distance to important objects, features, or obstacles in front and around the vehicle.

Unfortunately, each of the current approaches have limitations that hamper safe operation in all conditions. Lidar has limited effective range (up to 200m) due to decreasing point density the further away an object is, resulting in poor object detection capabilities, and low robustness in harsh weather conditions such as fog or rain due to backscatter of the laser. It is also costly, currently in the hundreds to thousands of dollars per sensor. Radar has good range and robustness but poor resolution, also limiting its ability to detect and classify objects. Today’s stereo camera approaches can do a good job of object detection but are hard to keep calibrated and have low robustness, and mono cameras have many issues resulting in poor depth estimation.

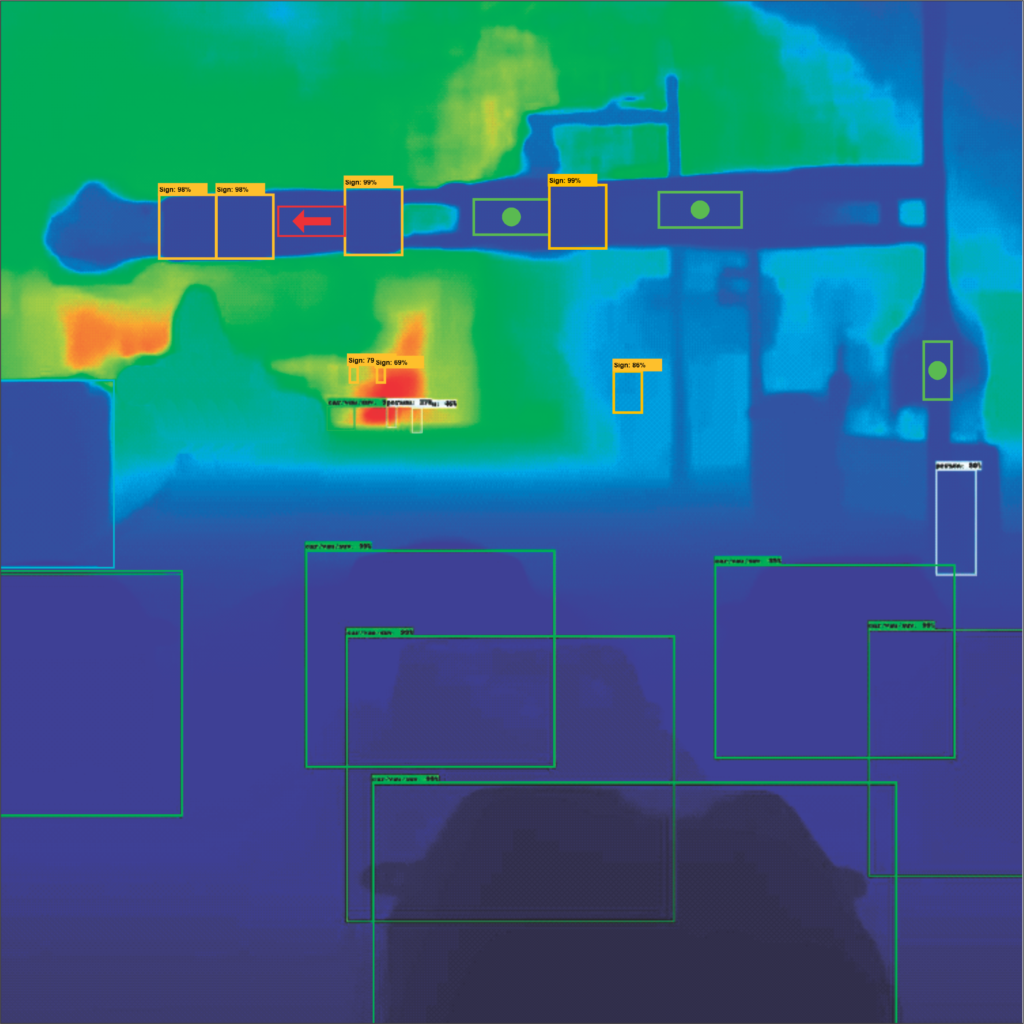

Eos Robust Depth Perception Software addresses these limitations by robustly providing dense depth together with accurate perception capabilities to determine distance and elevation even out to long distances (1km) and identify objects, pedestrians, or bicyclists, and even lost cargo or other hazardous road debris to further improve driving safety. These modular capabilities provide rich 3D scene reconstruction and provide a highly capable and cost-effective alternative to lidar, radar, and today’s stereo approaches.

Eos accomplishes this with:

- a multi-camera approach supporting a wide baseline between the cameras, even beyond 2m, especially useful for long-range applications such as trucking

- flexible support of up to 8MP automotive camera sensors and any field of view for forward, rear, and surround configurations

- real time adaptive calibration to address vibration and movement between the cameras or misalignments while driving, historically a key challenge for wide-baseline configurations

- an efficient embedded implementation of Algolux’s novel end-to-end deep learned architecture for both depth estimation and perception

- the ability to detect and determine distance for typical road objects and even unknown road debris

Algolux has proven its Eos depth perception performance in OEM and Tier 1 engagements involving both trucking and automotive applications in North America and Europe. Visit www.algolux.com to learn more and schedule an evaluation.

About Algolux

Algolux is a globally recognized computer vision company addressing the critical issue of safety for advanced driver assistance systems and autonomous vehicles. Our machine-learning tools and embedded AI software products enable existing and new camera designs to achieve industry-leading depth perception performance across all driving conditions. Founded on groundbreaking research at the intersection of deep learning, computer vision, and computational imaging, Algolux has been repeatedly recognized at industry and academic conferences and has been named to the 2021 CB Insights AI 100 List of the world’s most innovative artificial intelligence startups. Learn more at www.algolux.com.