Automotive Applications for Embedded Vision

Vision products in automotive applications can make us better and safer drivers

Vision products in automotive applications can serve to enhance the driving experience by making us better and safer drivers through both driver and road monitoring.

Driver monitoring applications use computer vision to ensure that driver remains alert and awake while operating the vehicle. These systems can monitor head movement and body language for indications that the driver is drowsy, thus posing a threat to others on the road. They can also monitor for driver distraction behaviors such as texting, eating, etc., responding with a friendly reminder that encourages the driver to focus on the road instead.

In addition to monitoring activities occurring inside the vehicle, exterior applications such as lane departure warning systems can use video with lane detection algorithms to recognize the lane markings and road edges and estimate the position of the car within the lane. The driver can then be warned in cases of unintentional lane departure. Solutions exist to read roadside warning signs and to alert the driver if they are not heeded, as well as for collision mitigation, blind spot detection, park and reverse assist, self-parking vehicles and event-data recording.

Eventually, this technology will to lead cars with self-driving capability; Google, for example, is already testing prototypes. However many automotive industry experts believe that the goal of vision in vehicles is not so much to eliminate the driving experience but to just to make it safer, at least in the near term.

From ADAS to Robotaxi: How to Overcome the Major Vision Challenges

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why does robotaxi vision need more than task-driven ADAS sensing? Impact of long-duty operation and changing lighting on perception reliability Challenges faced across vehicles, cities, and operating conditions How visual data continuity affects

CES 2026: Physical AI moves from concept to system architecture

This market analysis was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The world’s largest consumer electronics conference demonstrated the technical synergies between automotive and robotics. At CES 2026, there was a clear cross-sector message: Physical AI is the common language across the automotive, robotaxi

How Lenovo is scaling Level 4 autonomous robotaxis on Arm

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. As L4 robotaxis shift from pilot to production, Arm offers the compute foundation needed to deliver end-to-end physical AI that scales across vehicle fleets. After years of autonomous driving pilots and controlled trials, the automotive industry is moving toward the production-scale deployment of Level 4 (L4) robotaxis. This marks

What Does a GPU Have to Do With Automotive Security?

This blog post was originally published at Imagination Technologies’ website. It is reprinted here with the permission of Imagination Technologies. The automotive industry is undergoing the most significant transformation since the advent of electronics in cars. Vehicles are becoming software-defined, connected, AI-driven, and continuously updated. This evolution brings extraordinary new capability – but it also brings

Accelerating next-generation automotive designs with the TDA5 Virtualizer™ Development Kit

This blog post was originally published at Texas Instruments’ website. It is reprinted here with the permission of Texas Instruments. Introduction Continuous innovation in high-performance, power-efficient systems-on-a-chip (SoCs) is enabling safer, smarter and more autonomous driving experiences in even more vehicles. As another big step forward, Texas Instruments and Synopsys developed a Virtualizer Development Kit™ (VDK) for the

Into the Omniverse: OpenUSD and NVIDIA Halos Accelerate Safety for Robotaxis, Physical AI Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Editor’s note: This post is part of Into the Omniverse, a series focused on how developers, 3D practitioners and enterprises can transform their workflows using the latest advancements in OpenUSD and NVIDIA Omniverse. New NVIDIA safety

What Sensor Fusion Architecture Offers for NVIDIA Orin NX-Based Autonomous Vision Systems

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why multi-sensor timing drift weakens edge AI perception How GNSS-disciplined clocks align cameras, LiDAR, radar, and IMUs Role of Orin NX as a central timing authority for sensor fusion Operational gains from unified time-stamping

Driving the Future of Automotive AI: Meet RoX AI Studio

This blog post was originally published at Renesas’ website. It is reprinted here with the permission of Renesas. In today’s automotive industry, onboard AI inference engines drive numerous safety-critical Advanced Driver Assistance Systems (ADAS) features, all of which require consistent, high-performance processing. Given that AI model engineering is inherently iterative (numerous cycles of ‘train, validate, and

Why Scalable High-Performance SoCs are the Future of Autonomous Vehicles

This blog post was originally published at Texas Instruments’ website. It is reprinted here with the permission of Texas Instruments. Summary The automotive industry is ascending to higher levels of vehicle autonomy with the help of central computing platforms. SoCs like the TDA5 family offer safe, efficient AI performance through an integrated C7™ NPU and

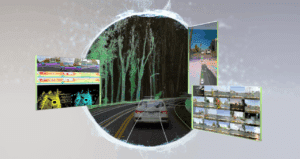

How to Enhance 3D Gaussian Reconstruction Quality for Simulation

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Building truly photorealistic 3D environments for simulation is challenging. Even with advanced neural reconstruction methods such as 3D Gaussian Splatting (3DGS) and 3D Gaussian with Unscented Transform (3DGUT), rendered views can still contain artifacts such as blurriness, holes, or