Cameras and Sensors for Embedded Vision

WHILE ANALOG CAMERAS ARE STILL USED IN MANY VISION SYSTEMS, THIS SECTION FOCUSES ON DIGITAL IMAGE SENSORS

While analog cameras are still used in many vision systems, this section focuses on digital image sensors—usually either a CCD or CMOS sensor array that operates with visible light. However, this definition shouldn’t constrain the technology analysis, since many vision systems can also sense other types of energy (IR, sonar, etc.).

The camera housing has become the entire chassis for a vision system, leading to the emergence of “smart cameras” with all of the electronics integrated. By most definitions, a smart camera supports computer vision, since the camera is capable of extracting application-specific information. However, as both wired and wireless networks get faster and cheaper, there still may be reasons to transmit pixel data to a central location for storage or extra processing.

A classic example is cloud computing using the camera on a smartphone. The smartphone could be considered a “smart camera” as well, but sending data to a cloud-based computer may reduce the processing performance required on the mobile device, lowering cost, power, weight, etc. For a dedicated smart camera, some vendors have created chips that integrate all of the required features.

Cameras

Until recent times, many people would imagine a camera for computer vision as the outdoor security camera shown in this picture. There are countless vendors supplying these products, and many more supplying indoor cameras for industrial applications. Don’t forget about simple USB cameras for PCs. And don’t overlook the billion or so cameras embedded in the mobile phones of the world. These cameras’ speed and quality have risen dramatically—supporting 10+ mega-pixel sensors with sophisticated image processing hardware.

Consider, too, another important factor for cameras—the rapid adoption of 3D imaging using stereo optics, time-of-flight and structured light technologies. Trendsetting cell phones now even offer this technology, as do latest-generation game consoles. Look again at the picture of the outdoor camera and consider how much change is about to happen to computer vision markets as new camera technologies becomes pervasive.

Sensors

Charge-coupled device (CCD) sensors have some advantages over CMOS image sensors, mainly because the electronic shutter of CCDs traditionally offers better image quality with higher dynamic range and resolution. However, CMOS sensors now account for more 90% of the market, heavily influenced by camera phones and driven by the technology’s lower cost, better integration and speed.

e-con Systems to Launch Darsi Pro, an NVIDIA Jetson-Powered AI Compute Box for Advanced Vision Applications

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. This blog offers expert insights into Darsi Pro, how it delivers a unified vision solution, and what it brings to alleviate modern workloads. Darsi Pro comes with GMSL camera options, rugged design, OTA support, and

New IDTechEx Report: Sensor Market 2026-2036

This article was originally published at IDTechEx’s website. It is reprinted here with the permission of IDTechEx. IDTechEx forecasts that the global sensor market will reach US$250B by 2036 as global mega-trends in mobility, AI, robotics, 6G connectivity and IoT drive sensor demand. IDTechEx’s newly updated “Sensor Market 2026-2036: Technologies, Trends, Players, Forecasts” report provides extensive

Sony’s Large Format Sensors: A Look at Global Shutter and Rolling Shutter

On December 4, 2025, at 10:00 am CET (4:00 am EST) Alliance Member companies Sony and RESTAR FRAMOS will deliver a 30-minute technical session “Sony’s Large Format Sensors: A Look at Global Shutter and Rolling Shutter” From the event page: Join experts from Sony, FRAMOS, and RESTAR FRAMOS for a focused, 30-minute technical session examining

VC MIPI Cameras & ADLINK i.MX 8M Plus: Full Driver Support

Ettlingen, November 18, 2025 — For a medical imaging project, ADLINK wanted to integrate VC MIPI Cameras with its I-Pi SMARC IMX8M Plus Development Kit. Vision Components adapted the standard driver for the NXP i.MX 8M Plus processor platform to the ADLINK board, including full ISP support, for features such as color tuning etc. As

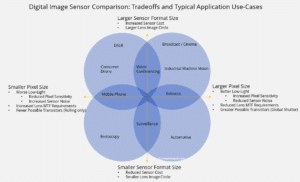

The Image Sensor Size and Pixel Size of a Camera is Critical to Image Quality

This blog post was originally published at Commonlands’ website. It is reprinted here with the permission of Commonlands. The sensor format size and pixel size of digital camera impacts nearly every performance attribute of a camera. The format size is a key element that contributes to system constraints across the low-light performance, dynamic range, size,

Upcoming Webinar Explores Real-time AI Sensor Fusion

On December 17, 2025, at 9:00 am PST (12:00 pm EST), Alliance Member companies e-con Systems, Lattice Semiconductor, and NVIDIA will deliver a joint webinar “Real-Time AI Sensor Fusion with e-con Systems’ Holoscan Camera Solutions using Lattice FPGA for NVIDIA Jetson Thor Platform.” From the event page: Join an exclusive webinar hosted by e-con Systems®,

PiezoMEMS: A Market Poised to Outpace MEMS Growth by 2030

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Yole Group unveils two new reports, PiezoMEMS Technologies 2025 and PiezoMEMS Comparison 2025, providing market ranking, technology insights and cost analyses. Key Takeaways The piezoMEMS market will reach nearly US$5.7 billion by 2030,

STMicroelectronics Empowers Data-Hungry Industrial Transformation with Unique Dual-Range Motion Sensor

Nov 6, 2025 Geneva, Switzerland Unique MEMS innovation means deeper contextual awareness for monitoring and safety equipment also in harsh environments Richly detailed motion and event tracking with simultaneous, independent sensing in high-g and low-g ranges Integrates ST’s advanced in-sensor edge AI for autonomy, performance, and power saving STMicroelectronics (NYSE: STM), a global semiconductor leader serving customers

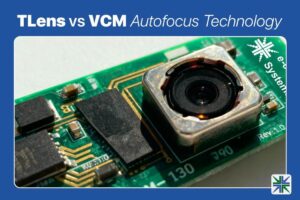

TLens vs VCM Autofocus Technology

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, we’ll walk you through how TLens technology differs from traditional VCM autofocus, how TLens combined with e-con Systems’ Tinte ISP enhances camera performance, key advantages of TLens over mechanical autofocus systems, and applications

Unlocking Smarter, More Efficient Computer Vision Systems: A Technical Deep Dive into ST’s New 5MP CMOS Image Sensors

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. STMicroelectronics is officially unveiling its newest 5-megapixel image sensor series – comprising the VB1943, VB5943, VD1943, and VD5943 models part of ST BrightSense portfolio – raising the bar for applications such as security, robotics and machine vision.