Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

Top Python Libraries of 2025

This article was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. Welcome to the 11th edition of our yearly roundup of the Python libraries! If 2025 felt like the year of Large Language Models (LLMs) and agents, it’s because it truly was. The ecosystem expanded at incredible speed, with new models,

How to Enhance 3D Gaussian Reconstruction Quality for Simulation

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Building truly photorealistic 3D environments for simulation is challenging. Even with advanced neural reconstruction methods such as 3D Gaussian Splatting (3DGS) and 3D Gaussian with Unscented Transform (3DGUT), rendered views can still contain artifacts such as blurriness, holes, or

Deep Learning Vision Systems for Industrial Image Processing

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Deep learning vision systems are often already a central component of industrial image processing. They enable precise error detection, intelligent quality control, and automated decisions – wherever conventional image processing methods reach their limits. We show how a

Free Webinar Examines Autonomous Imaging for Environmental Cleanup

On March 3, 2026 at 9 am PT (noon ET), The Ocean Cleanup’s Robin de Vries, ADIS (Autonomous Debris Imaging System) Lead, will present the free hour webinar “Cleaning the Oceans with Edge AI: The Ocean Cleanup’s Smart Camera Transformation,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration

Commonlands Demonstration of Field of View & Distortion Visualization for M12 Lenses & S-Mount Lenses

Max Henkart, Founder and Optical Engineer at Commonlands, demonstrates the company’s latest products at the December 2025 Edge AI and Vision Alliance Forum. Specifically, Henkart demonstrates Commonlands’ new real-time tool for visualizing field of view and distortion characteristics of M12/S-Mount lenses across different sensor formats. This demo covers: FOV calculation for focal lengths from 0.8mm

SiMa.ai Announces First Integrated Capability with Synopsys to Accelerate Automotive Physical AI Development

San Jose, California – January 6, 2026 – SiMa.ai today announced the first integrated capability resulting from its strategic collaboration with Synopsys. The joint solution provides a blueprint to accelerate architecture exploration and early virtual software development for AI- ready, next-generation automotive SoCs that support applications such as Advanced Driver Assistance Systems (ADAS) and In-vehicle-Infotainment

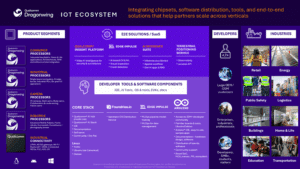

Qualcomm’s IE‑IoT Expansion Is Complete: Edge AI Unleashed for Developers, Enterprises & OEMs

Key Takeaways: Expanded set of processors, software, services, and developer tools including offerings and technologies from the five acquisitions of Augentix, Arduino, Edge Impulse, Focus.AI, and Foundries.io, positions the Company to help meet edge computing and AI needs for customers across virtually all verticals. Completed acquisition of Augentix, a leader in mass-market image processors, extends Qualcomm Technologies’ ability to provide system-on-chips tailored for intelligent IP cameras and vision systems. New Qualcomm Dragonwing™ Q‑7790 and Q‑8750 processors power security-focused on‑device AI across drones, smart cameras

NXP and GE HealthCare Accelerate AI Innovation in Acute Care

NXP and GE HealthCare announced a collaboration to pioneer new advancements in edge AI innovation, beginning with new anesthesia and neonatal concepts showcased at CES 2026. LAS VEGAS and CHICAGO, Illinois, January 6, 2026 — NXP Semiconductors N.V. (NASDAQ: NXPI) and GE HealthCare (NASDAQ: GEHC) today announced a collaboration to pioneer new advancements in