Development Tools for Embedded Vision

ENCOMPASSING MOST OF THE STANDARD ARSENAL USED FOR DEVELOPING REAL-TIME EMBEDDED PROCESSOR SYSTEMS

The software tools (compilers, debuggers, operating systems, libraries, etc.) encompass most of the standard arsenal used for developing real-time embedded processor systems, while adding in specialized vision libraries and possibly vendor-specific development tools for software development. On the hardware side, the requirements will depend on the application space, since the designer may need equipment for monitoring and testing real-time video data. Most of these hardware development tools are already used for other types of video system design.

Both general-purpose and vender-specific tools

Many vendors of vision devices use integrated CPUs that are based on the same instruction set (ARM, x86, etc), allowing a common set of development tools for software development. However, even though the base instruction set is the same, each CPU vendor integrates a different set of peripherals that have unique software interface requirements. In addition, most vendors accelerate the CPU with specialized computing devices (GPUs, DSPs, FPGAs, etc.) This extended CPU programming model requires a customized version of standard development tools. Most CPU vendors develop their own optimized software tool chain, while also working with 3rd-party software tool suppliers to make sure that the CPU components are broadly supported.

Heterogeneous software development in an integrated development environment

Since vision applications often require a mix of processing architectures, the development tools become more complicated and must handle multiple instruction sets and additional system debugging challenges. Most vendors provide a suite of tools that integrate development tasks into a single interface for the developer, simplifying software development and testing.

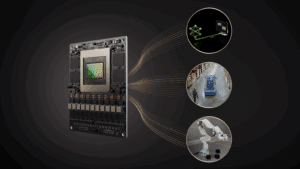

Accelerate AI Inference for Edge and Robotics with NVIDIA Jetson T4000 and NVIDIA JetPack 7.1

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA is introducing the NVIDIA Jetson T4000, bringing high-performance AI and real-time reasoning to a wider range of robotics and edge AI applications. Optimized for tighter power and thermal envelopes, T4000 delivers up to 1200 FP4 TFLOPs of AI compute and 64 GB of memory,

NVIDIA Unveils New Open Models, Data and Tools to Advance AI Across Every Industry

This post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Expanding the open model universe, NVIDIA today released new open models, data and tools to advance AI across every industry. These models — spanning the NVIDIA Nemotron family for agentic AI, the NVIDIA Cosmos platform for physical AI, the new NVIDIA Alpamayo family for autonomous vehicle

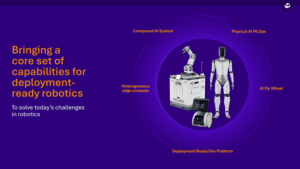

Qualcomm Introduces a Full Suite of Robotics Technologies, Powering Physical AI from Household Robots up to Full-Size Humanoids

Key Takeaways: Utilizing leadership in Physical AI with comprehensive stack systems built on safety-grade high performance SoC platforms, Qualcomm’s general-purpose robotics architecture delivers industry-leading power efficiency, and scalability, enabling capabilities from personal service robots to next generation industrial autonomous mobile robots and full-size humanoids that can reason, adapt, and decide. New end-to‑end architecture accelerates automation

NXP Advances Edge AI Leadership with New eIQ Agentic AI Framework

Key Takeaways: New eIQ Agentic AI Framework enables autonomous agentic intelligence at the edge, adding a new pillar to NXP’s edge AI platform Brings agentic AI to the edge, delivering real-time autonomous decision making for use cases requiring low latency, high reliability and data privacy Trusted foundation for both experienced and new developers to rapidly

Ambarella Launches a Developer Zone to Broaden its Edge AI Ecosystem

SANTA CLARA, Calif., Jan. 6, 2026 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced during CES the launch of its Ambarella Developer Zone (DevZone). Located at developer.ambarella.com, the DevZone is designed to help Ambarella’s growing ecosystem of partners learn, build and deploy edge AI applications on a variety of edge systems with greater speed and clarity. It

Grounded AI Starts Here: Rapid Customization for RAG and Context Engineering

This blog post was originally published in expanded form at RapidFire AI’s website. It is reprinted here with the permission of RapidFire AI. Building a reliable Retrieval Augmented Generation (RAG) pipeline should not feel like guesswork. Yet for most AI developers, it still does. According to a recent MIT study on enterprise AI adoption, around 95%

Lattice Enhances sensAI Solution Stack with Edge AI Performance, Efficiency, and Ease of Use

Latest Lattice sensAI™ solution stack delivers industry-leading power efficiency, expanded AI model support, and flexible deployment tools for next-generation edge applications HILLSBORO, Ore. – Dec. 18, 2025 – Lattice Semiconductor (NASDAQ: LSCC), the low power programmable leader, today announced the latest release of the Lattice sensAI™ solution stack delivering expanded model support, enhanced AI performance, and greater deployment

97% Smaller, Just as Smart: Scaling Down Networks with Structured Pruning

This article was originally published at Analog Devices’ website. It is reprinted here with the permission of Analog Devices. Why Smaller Models Matter Shrinking AI models isn’t just a nice-to-have—it’s a necessity for bringing powerful, real-time intelligence to edge devices. Whether it’s smartphones, wearables, or embedded systems, these platforms operate with strict memory, compute, and