Software for Embedded Vision

Breaking Free from the CUDA Lock-in

This blog post was originally published at SiMa.ai’s website. It is reprinted here with the permission of SiMa.ai. The AI hardware landscape is dominated by one uncomfortable truth: most teams feel trapped by CUDA. You trained your models on NVIDIA GPUs, deployed them with TensorRT, and now the thought of switching hardware feels like rewriting

Upcoming Seminar Explores the Latest Innovations in Mobile Robotics

On October 22, 2022 at 9:00 am PT, Alliance Member company NXP Semiconductors, along with Avnet, will deliver a free (advance registration required) half-day in-person robotics seminar at NXP’s office in San Jose, California. From the event page: Join us for a free in-depth seminar exploring the latest innovations in mobile robotics with a focus

Free Webinar Explores Edge AI-enabled Microcontroller Capabilities and Trends

On November 18, 2025 at 9 am PT (noon ET), the Yole Group’s Tom Hackenberg, principal analyst for computing, will present the free hour webinar “How AI-enabled Microcontrollers Are Expanding Edge AI Opportunities,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Running AI inference at the edge,

“Lessons Learned Building and Deploying a Weed-killing Robot,” a Presentation from Tensorfield Agriculture

Xiong Chang, CEO and Co-founder of Tensorfield Agriculture, presents the “Lessons Learned Building and Deploying a Weed-Killing Robot” tutorial at the May 2025 Embedded Vision Summit. Agriculture today faces chronic labor shortages and growing challenges around herbicide resistance, as well as consumer backlash to chemical inputs. Smarter, more sustainable approaches… “Lessons Learned Building and Deploying

Using the Qualcomm AI Inference Suite from Google Colab

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Building off of the blog post here, which shows how easy it is to call the Cirrascale AI Inference Cloud using the Qualcomm AI Inference Suite, we’ll use Google Colab to show the same scenario. In the previous blog

“Transformer Networks: How They Work and Why They Matter,” a Presentation from Synthpop AI

Rakshit Agrawal, Principal AI Scientist at Synthpop AI, presents the “Transformer Networks: How They Work and Why They Matter” tutorial at the May 2025 Embedded Vision Summit. Transformer neural networks have revolutionized artificial intelligence by introducing an architecture built around self-attention mechanisms. This has enabled unprecedented advances in understanding sequential… “Transformer Networks: How They Work

Deploy High-performance AI Models in Windows Applications on NVIDIA RTX AI PCs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Microsoft is now making Windows ML available to developers. Windows ML enables C#, C++ and Python developers to optimally run AI models locally across PC hardware from CPU, NPU and GPUs. On NVIDIA RTX GPUs, it utilizes

“Understanding Human Activity from Visual Data,” a Presentation from Sportlogiq

Mehrsan Javan, Chief Technology Officer at Sportlogiq, presents the “Understanding Human Activity from Visual Data” tutorial at the May 2025 Embedded Vision Summit. Activity detection and recognition are crucial tasks in various industries, including surveillance and sports analytics. In this talk, Javan provides an in-depth exploration of human activity understanding,… “Understanding Human Activity from Visual

“Multimodal Enterprise-scale Applications in the Generative AI Era,” a Presentation from Skyworks Solutions

Mumtaz Vauhkonen, Senior Director of AI at Skyworks Solutions, presents the “Multimodal Enterprise-scale Applications in the Generative AI Era” tutorial at the May 2025 Embedded Vision Summit. As artificial intelligence is making rapid strides in use of large language models, the need for multimodality arises in multiple application scenarios. Similar… “Multimodal Enterprise-scale Applications in the

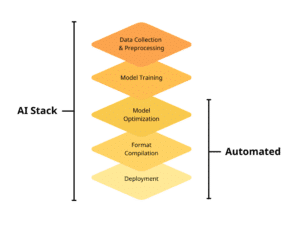

How CLIKA’s Automated Hardware-aware AI Compression Toolkit Efficiently Enables Scalable Deployment of AI on Any Target Hardware

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Organisations are making a shift from experimental spending on AI to long-term investments in this new technology but there are challenges involved. Here’s how CLIKA can help. With democratising AI and greater access to open-source AI models,

Using the Qualcomm AI Inference Suite Directly from a Web Page

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Applying the Qualcomm AI Inference Suite directly from a web page using JavaScript makes it easy to create and understand how AI inference works in in web solutions. Qualcomm Technologies in collaboration with Cirrascale has a free-to-try

“Real-world Deployment of Mobile Material Handling Robotics in the Supply Chain,” a Presentation from Pickle Robot Company

Peter Santos, Chief Operating Officer of Pickle Robot Company, presents the “Real-World Deployment of Mobile Material Handling Robotics in the Supply Chain” tutorial at the May 2025 Embedded Vision Summit. More and more of the supply chain needs to be, and can be, automated. Demographics, particularly in the developed world,… “Real-world Deployment of Mobile Material

“Developing a GStreamer-based Custom Camera System for Long-range Biometric Data Collection,” a Presentation from Oak Ridge National Laboratory

Gavin Jager, Researcher and Lab Space Manager at Oak Ridge National Laboratory, presents the “Developing a GStreamer-based Custom Camera System for Long-range Biometric Data Collection” tutorial at the May 2025 Embedded Vision Summit. In this presentation, Jager describes Oak Ridge National Laboratory’s work developing software for a custom camera system… “Developing a GStreamer-based Custom Camera

“Sensors and Compute Needs and Challenges for Humanoid Robots,” a Presentation from Agility Robotics

Vlad Branzoi, Perception Sensors Team Lead at Agility Robotics, presents the “Sensors and Compute Needs and Challenges for Humanoid Robots” tutorial at the September 2025 Edge AI and Vision Innovation Forum.

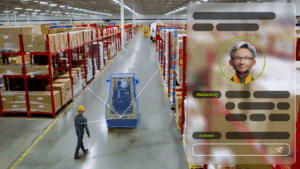

How to Integrate Computer Vision Pipelines with Generative AI and Reasoning

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Generative AI is opening new possibilities for analyzing existing video streams. Video analytics are evolving from counting objects to turning raw video content footage into real-time understanding. This enables more actionable insights. The NVIDIA AI Blueprint for

“Scaling Artificial Intelligence and Computer Vision for Conservation,” a Presentation from The Nature Conservancy

Matt Merrifield, Chief Technology Officer at The Nature Conservancy, presents the “Scaling Artificial Intelligence and Computer Vision for Conservation” tutorial at the May 2025 Embedded Vision Summit. In this presentation, Merrifield explains how the world’s largest environmental nonprofit is spearheading projects to scale the use of edge AI and vision… “Scaling Artificial Intelligence and Computer

“A Lightweight Camera Stack for Edge AI,” a Presentation from Meta

Jui Garagate, Camera Software Engineer, and Karthick Kumaran, Staff Software Engineer, both of Meta, co-present the “Lightweight Camera Stack for Edge AI” tutorial at the May 2025 Embedded Vision Summit. Electronic products for virtual and augmented reality, home robots and cars deploy multiple cameras for computer vision and AI use… “A Lightweight Camera Stack for

“Unlocking Visual Intelligence: Advanced Prompt Engineering for Vision-language Models,” a Presentation from LinkedIn Learning

Alina Li Zhang, Senior Data Scientist and Tech Writer at LinkedIn Learning, presents the “Unlocking Visual Intelligence: Advanced Prompt Engineering for Vision-language Models” tutorial at the May 2025 Embedded Vision Summit. Imagine a world where AI systems automatically detect thefts in grocery stores, ensure construction site safety and identify patient… “Unlocking Visual Intelligence: Advanced Prompt