On-Device LLMs in 2026: What Changed, What Matters, What’s Next

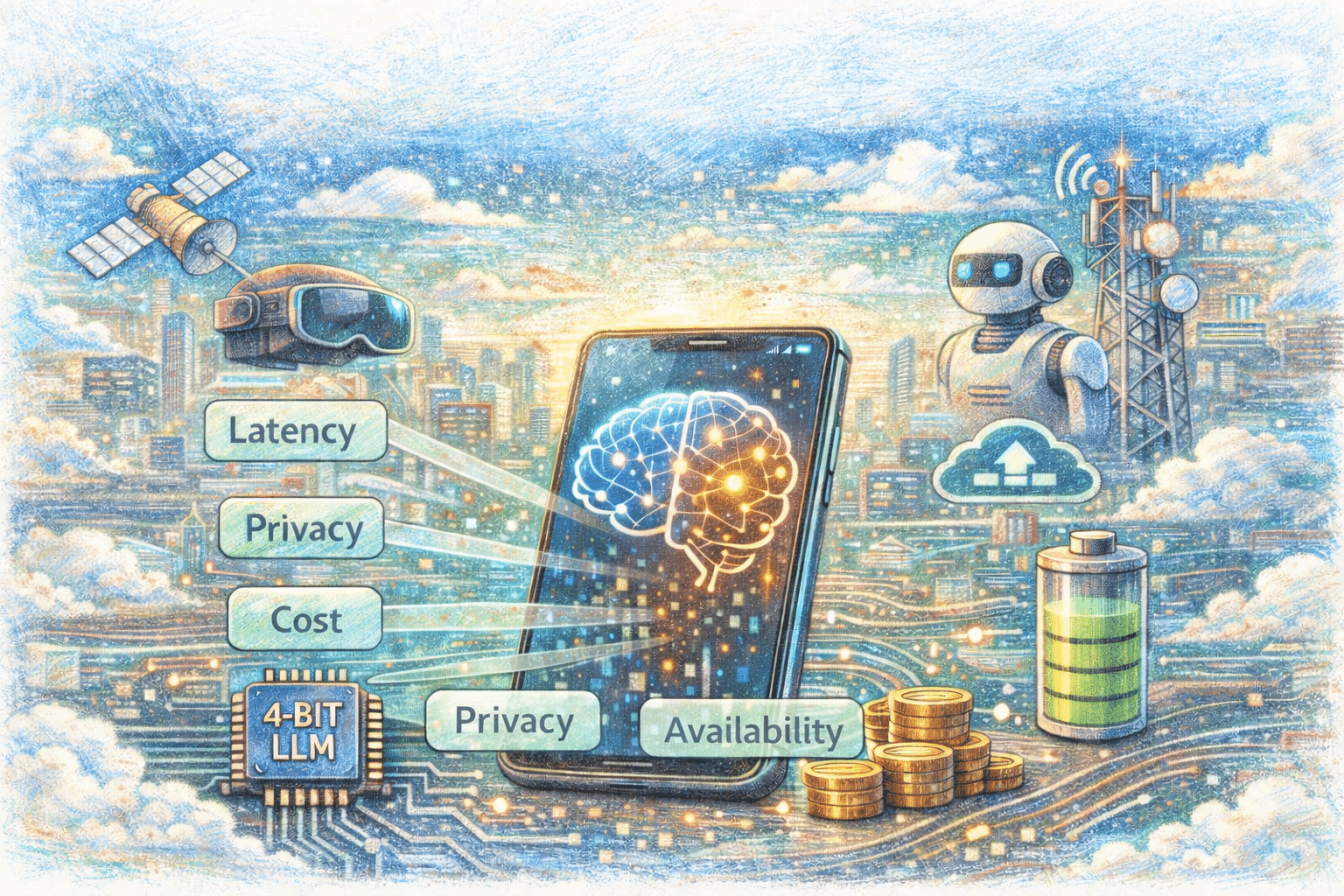

In On-Device LLMs: State of the Union, 2026, Vikas Chandra and Raghuraman Krishnamoorthi explain why running LLMs on phones has moved from novelty to practical engineering, and why the biggest breakthroughs came not from faster chips but from rethinking how models are built, trained, compressed, and deployed. Why run LLMs locally? Four reasons: latency (cloud […]

On-Device LLMs in 2026: What Changed, What Matters, What’s Next Read More +