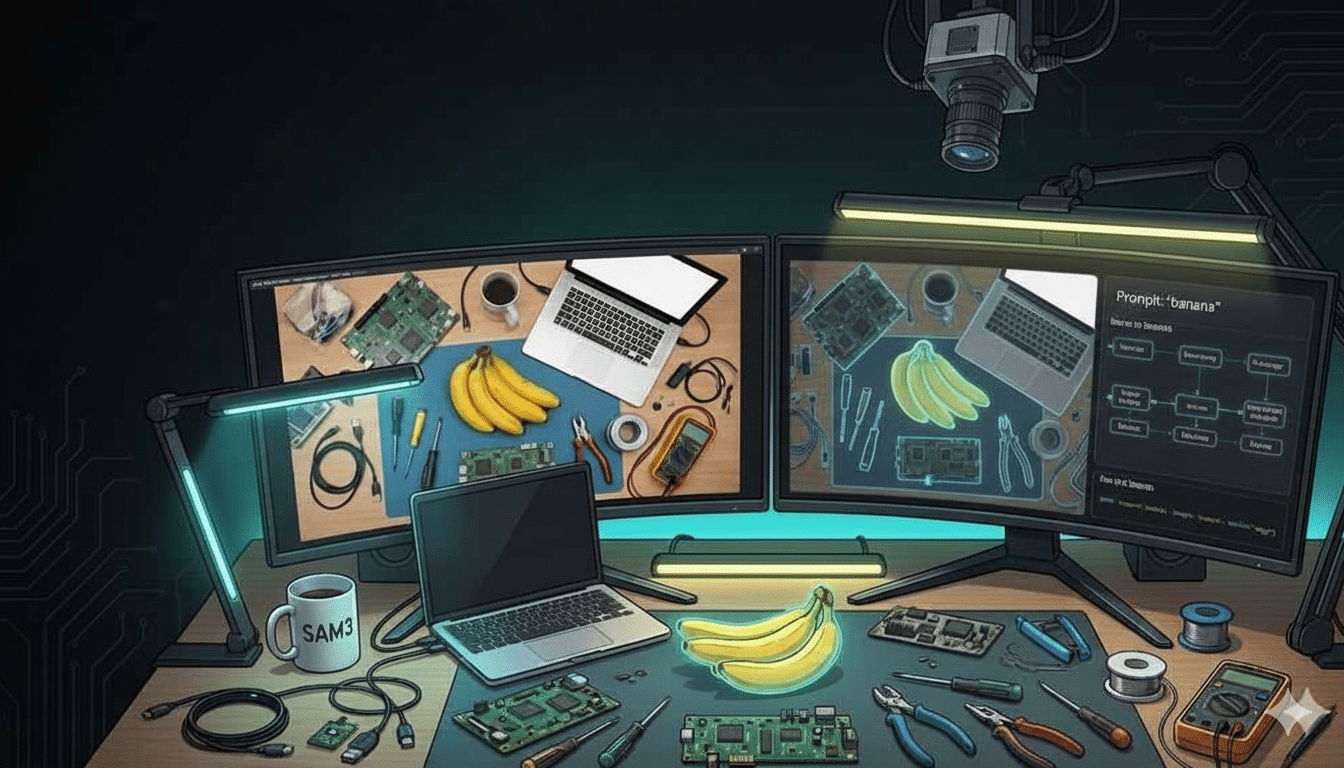

SAM3: A New Era for Open‑Vocabulary Segmentation and Edge AI

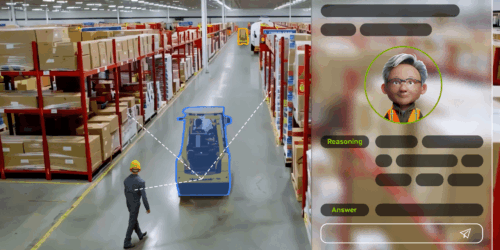

Quality training data – especially segmented visual data – is a cornerstone of building robust vision models. Meta’s recently announced Segment Anything Model 3 (SAM3) arrives as a potential game-changer in this domain. SAM3 is a unified model that can detect, segment, and even track objects in images and videos using both text and visual […]

SAM3: A New Era for Open‑Vocabulary Segmentation and Edge AI Read More +