Implementing Multimodal GenAI Models on Modalix

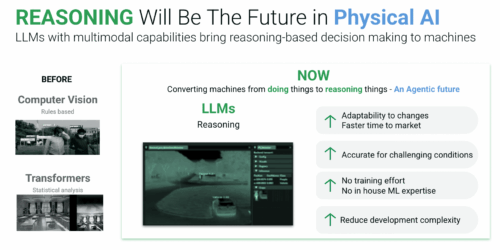

This blog post was originally published at SiMa.ai’s website. It is reprinted here with the permission of SiMa.ai. It has been our goal since starting SiMa.ai to create one software and hardware platform for the embedded edge that empowers companies to make their AI/ML innovations come to life. With the rise of Generative AI already […]

Implementing Multimodal GenAI Models on Modalix Read More +