Resources

In-depth information about the edge AI and vision applications, technologies, products, markets and trends.

The content in this section of the website comes from Edge AI and Vision Alliance members and other industry luminaries.

All Resources

Upcoming Webinar on CSI-2 over D-PHY & C-PHY

On February 24, 2026, at 9:00 am PST (12:00 pm EST) MIPI Alliance will deliver a webinar “MIPI CSI-2 over D-PHY & C-PHY: Advancing Imaging Conduit Solutions” From the event page: MIPI CSI-2®, together with

What’s New in MIPI Security: MIPI CCISE and Security for Debug

This blog post was originally published at MIPI Alliance’s website. It is reprinted here with the permission of MIPI Alliance. As the need for security becomes increasingly more critical, MIPI Alliance has continued to broaden its

Production-Ready, Full-Stack Edge AI Solutions Turn Microchip’s MCUs and MPUs Into Catalysts for Intelligent Real-Time Decision-Making

Chandler, Ariz., February 10, 2026 — A major next step for artificial intelligence (AI) and machine learning (ML) innovation is moving ML models from the cloud to the edge for real-time inferencing and decision-making applications in

Accelerating next-generation automotive designs with the TDA5 Virtualizer™ Development Kit

This blog post was originally published at Texas Instruments’ website. It is reprinted here with the permission of Texas Instruments. Introduction Continuous innovation in high-performance, power-efficient systems-on-a-chip (SoCs) is enabling safer, smarter and more autonomous driving

Into the Omniverse: OpenUSD and NVIDIA Halos Accelerate Safety for Robotaxis, Physical AI Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Editor’s note: This post is part of Into the Omniverse, a series focused on how developers,

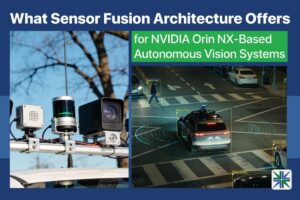

What Sensor Fusion Architecture Offers for NVIDIA Orin NX-Based Autonomous Vision Systems

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why multi-sensor timing drift weakens edge AI perception How GNSS-disciplined clocks align cameras, LiDAR,

Enhancing Images: Adaptive Shadow Correction Using OpenCV

This blog post was originally published at OpenCV’s website. It is reprinted here with the permission of OpenCV. Imagine capturing the perfect landscape photo on a sunny day, only to find harsh shadows obscuring key

Driving the Future of Automotive AI: Meet RoX AI Studio

This blog post was originally published at Renesas’ website. It is reprinted here with the permission of Renesas. In today’s automotive industry, onboard AI inference engines drive numerous safety-critical Advanced Driver Assistance Systems (ADAS) features, all

Upcoming Webinar on Industrial 3D Vision with iToF Technology

On February 18, 2026, at 9:00 am PST (12:00 pm EST), and on February 19, 2026 at 11:00 am CET, Alliance Member company e-con Systems in partnership with onsemi will deliver a webinar “Enabling Reliable

Technologies

TI Accelerates the Next Generation of Physical AI with NVIDIA

News highlights: TI and NVIDIA are collaborating to accelerate the path from simulation to the safe deployment of humanoid robots in the real world. As part of this collaboration, TI integrated its mmWave radar technology with NVIDIA Jetson Thor and NVIDIA Holoscan to enable low-latency 3D perception and safety awareness for physical AI applications. TI

ModelCat AI Announces AI Model Portability Across Silicon Devices

An industry first, ModelCat’s Agentic AI generates models for new chips using a user’s current production models, dramatically accelerating inferencing to the edge. SUNNYVALE, Calif., March 5, 2026 /PRNewswire/ — ModelCat, the creator of the world’s first fully autonomous AI model builder, today announced its latest innovative platform capability: Model Retargeting (Patent Pending). Using Model Retargeting, ModelCat customers gain model

STM32U3B5/U3C5: Bringing High-Performance DSP & Edge AI to Ultralow Power Designs

Built on the Arm® Cortex®‑M33 core, the STM32U3B5/U3C5 MCUs combine up to 2 Mbytes of dual‑bank flash memory with 640 Kbytes of RAM and are available in packages from 48 to 144 pins (UFQFPN, WLCSP, LQFP, and UFBGA). The lines introduce a hardware signal processor (HSP) to the STM32U3 portfolio, offloading complex DSP and edge‑AI workloads and

Applications

TI Accelerates the Next Generation of Physical AI with NVIDIA

News highlights: TI and NVIDIA are collaborating to accelerate the path from simulation to the safe deployment of humanoid robots in the real world. As part of this collaboration, TI integrated its mmWave radar technology with NVIDIA Jetson Thor and NVIDIA Holoscan to enable low-latency 3D perception and safety awareness for physical AI applications. TI

From ADAS to Robotaxi: How Vision Systems Must Level Up to Meet New Mobility Use Cases (Part 2)

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways How urban lighting and motion define robotaxi imaging needs Which camera features support reliable perception during day and night operation Why unified AI vision boxes reduce latency and coordination gaps How integrated vision platforms

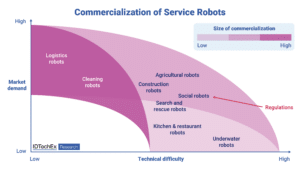

Which Service Robots Will Dominate the Market in the Next 10 Years?

Logistics robots and cleaning robots both benefit from high market demand and relatively low technical barriers, compared to kitchen and restaurant robots or underwater robots. Source: Service Robots 2026-2036: Technologies, Players and Markets This blog post was originally published at IDTechEx’s website. It is reprinted here with the permission of IDTechEx. The service robotics industry has grown

Functions

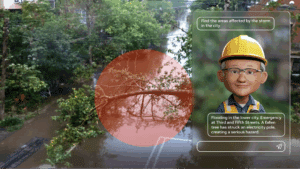

AI On: 3 Ways to Bring Agentic AI to Computer Vision Applications

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Learn how to integrate vision language models into video analytics applications, from AI-powered search to fully automated video analysis. Today’s computer vision systems excel at identifying what happens in physical spaces and processes, but lack the abilities to explain the

SAM3: A New Era for Open‑Vocabulary Segmentation and Edge AI

Quality training data – especially segmented visual data – is a cornerstone of building robust vision models. Meta’s recently announced Segment Anything Model 3 (SAM3) arrives as a potential game-changer in this domain. SAM3 is a unified model that can detect, segment, and even track objects in images and videos using both text and visual

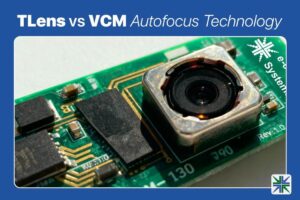

TLens vs VCM Autofocus Technology

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, we’ll walk you through how TLens technology differs from traditional VCM autofocus, how TLens combined with e-con Systems’ Tinte ISP enhances camera performance, key advantages of TLens over mechanical autofocus systems, and applications