Cameras and Sensors for Embedded Vision

WHILE ANALOG CAMERAS ARE STILL USED IN MANY VISION SYSTEMS, THIS SECTION FOCUSES ON DIGITAL IMAGE SENSORS

While analog cameras are still used in many vision systems, this section focuses on digital image sensors—usually either a CCD or CMOS sensor array that operates with visible light. However, this definition shouldn’t constrain the technology analysis, since many vision systems can also sense other types of energy (IR, sonar, etc.).

The camera housing has become the entire chassis for a vision system, leading to the emergence of “smart cameras” with all of the electronics integrated. By most definitions, a smart camera supports computer vision, since the camera is capable of extracting application-specific information. However, as both wired and wireless networks get faster and cheaper, there still may be reasons to transmit pixel data to a central location for storage or extra processing.

A classic example is cloud computing using the camera on a smartphone. The smartphone could be considered a “smart camera” as well, but sending data to a cloud-based computer may reduce the processing performance required on the mobile device, lowering cost, power, weight, etc. For a dedicated smart camera, some vendors have created chips that integrate all of the required features.

Cameras

Until recent times, many people would imagine a camera for computer vision as the outdoor security camera shown in this picture. There are countless vendors supplying these products, and many more supplying indoor cameras for industrial applications. Don’t forget about simple USB cameras for PCs. And don’t overlook the billion or so cameras embedded in the mobile phones of the world. These cameras’ speed and quality have risen dramatically—supporting 10+ mega-pixel sensors with sophisticated image processing hardware.

Consider, too, another important factor for cameras—the rapid adoption of 3D imaging using stereo optics, time-of-flight and structured light technologies. Trendsetting cell phones now even offer this technology, as do latest-generation game consoles. Look again at the picture of the outdoor camera and consider how much change is about to happen to computer vision markets as new camera technologies becomes pervasive.

Sensors

Charge-coupled device (CCD) sensors have some advantages over CMOS image sensors, mainly because the electronic shutter of CCDs traditionally offers better image quality with higher dynamic range and resolution. However, CMOS sensors now account for more 90% of the market, heavily influenced by camera phones and driven by the technology’s lower cost, better integration and speed.

Commonlands Demonstration of Field of View & Distortion Visualization for M12 Lenses & S-Mount Lenses

Max Henkart, Founder and Optical Engineer at Commonlands, demonstrates the company’s latest products at the December 2025 Edge AI and Vision Alliance Forum. Specifically, Henkart demonstrates Commonlands’ new real-time tool for visualizing field of view and distortion characteristics of M12/S-Mount lenses across different sensor formats. This demo covers: FOV calculation for focal lengths from 0.8mm

D3 Embedded Showcases Camera/Radar Fusion, ADAS Cameras, Driver Monitoring, and LWIR solutions at CES

Las Vegas, NV, January 7, 2026 — D3 Embedded is showcasing a suite of technology solutions in partnership with fellow Edge AI and Vision Alliance Members HTEC, STMicroelectronics and Texas Instruments at CES 2026. Solutions include driver and in-cabin monitoring, ADAS, surveillance, targeting and human tracking – and will be viewable at different locations within

TI Accelerates the Shift Toward Autonomous Vehicles with Expanded Automotive Portfolio

New analog and embedded processing technologies from TI enable automakers to deliver smarter, safer and more connected driving experiences across their entire vehicle fleet Key Takeaways: TI’s newest family of high-performance computing SoCs delivers safe, efficient edge AI performance up to 1200 TOPS with a proprietary NPU and chiplet-ready design. Automakers can simplify radar designs and

How Embedded Vision Is Helping Modernize and Future-Proof Retail Operations

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Physical stores are becoming intelligent environments. Embedded vision turns every critical touchpoint into a source of real-time insight, from shelves and kiosks to checkout zones and digital signages. With cameras analyzing activity as it happens, retailers

The Coming Robotics Revolution: How AI and Macnica’s Capture, Process, Communicate Philosophy Will Define the Next Industrial Era

This blog post was originally published at Macnica’s website. It is reprinted here with the permission of Macnica. Just as networking and fiber-optic infrastructure quietly laid the groundwork for the internet economy, fueling the rise of Amazon, Facebook, and the digital platforms that redefined commerce and communication, today’s breakthroughs in artificial intelligence are setting the stage

Red Light Cameras vs. Traffic Sensors: The Ultimate Guide for Traffic Enforcement

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Intersections create the toughest mix of crashes, congestion, and violations, so cities rely on imaging to bring order and proof. Red light cameras and traffic sensors operate in the same geography yet serve different goals,

Drones Market 2026-2036: Technologies, Markets, and Opportunities

This article was originally published at IDTechEx’s website. It is reprinted here with the permission of IDTechEx. Global Drone Market Set to Reach US$147.8 Billion by 2036, Driven by Commercial Expansion, Regulatory Maturity, and Sensor Proliferation Over the past decade, drones have moved from experimental tools into critical infrastructure across agriculture, logistics, energy, security, and public-sector

poLight ASA Collaborates with Image Quality Labs on M12-based Raspberry Pi TLens Studio for AI-driven Industrial Machine Vision Applications

TØNSBERG, Norway–(BUSINESS WIRE) — poLight ASA (OSE: PLT) and Image Quality Labs (IQL) today announced the development of an M12-based Raspberry Pi TLens® Studio evaluation and development platform, utilizing the new line of TLens® off-the-shelf (OTS) focusing camera lens. This platform enables machine vision design engineers to quickly and easily evaluate high speed, constant field-of-view

How FPGA-Based Frame Grabbers Are Powering Next-Gen Multi-Camera Systems

This article was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. FPGA-based frame grabbers are redefining multi-camera vision by enabling synchronized aggregation of up to eight GMSL streams for autonomous driving, robotics, and industrial automation. They overcome bandwidth and latency limits of USB and Ethernet by using PCIe

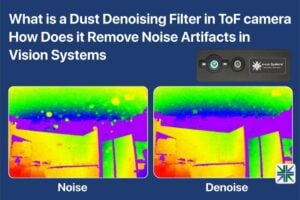

What is a Dust Denoising Filter in TOF Camera, and How Does it Remove Noise Artifacts in Vision Systems?

This article was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Time-of-Flight (ToF) cameras with IR sensors are susceptible to performance variations caused by environmental dust. This dust can create ‘dust noise’ in the output depth map, directly impacting camera accuracy and, consequently, the reliability of critical