Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

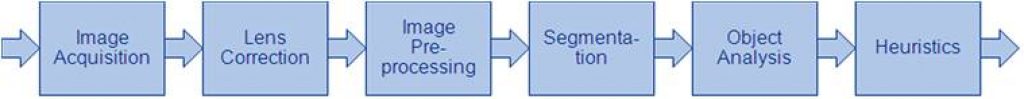

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

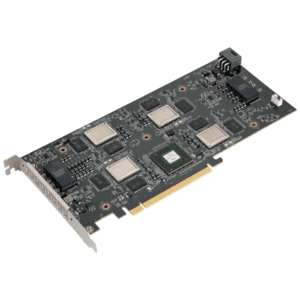

The New Metis PCIe with 4 Quad-Core AIPUs is Here

Big update from the Metis world: the epic new 4-core PCIe card is now available to order over on the store. Huge performance shift that opens up seriously scalable edge AI. Key specs: 856 TOPS (INT8) Choice of 16GB and 64GB DRAM ~12,800 FPS on ResNet-50 Runs dozens of streams or parallel models per board

How Semiconductor Equipment Makers Will Drive the Next $1 Trillion Wave

This blog post was originally published at HCLTech’s website. It is reprinted here with the permission of HCLTech. Key takeaways AI, mobility and cloud are the growth engines: They’re pushing chips toward a $1 trillion market by 2030 and forcing fabs to invest in sub-3nm nodes and advanced packaging Chips are bigger and more complex: Larger dies and

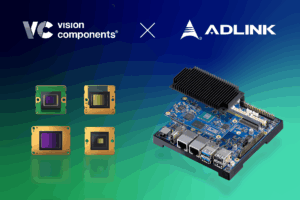

VC MIPI Cameras & ADLINK i.MX 8M Plus: Full Driver Support

Ettlingen, November 18, 2025 — For a medical imaging project, ADLINK wanted to integrate VC MIPI Cameras with its I-Pi SMARC IMX8M Plus Development Kit. Vision Components adapted the standard driver for the NXP i.MX 8M Plus processor platform to the ADLINK board, including full ISP support, for features such as color tuning etc. As

STMicroelectronics Introduces New Battery-Saving Wireless Microcontrollers Optimized for Remote Controls

Nov 18, 2025 Geneva, Switzerland STM32WL3R MCUs add flexible features to low-power radio for superior user experiences in consumer electronics and home automation Integrated in new RF remotes from building-automation leader Somfy STMicroelectronics has introduced the STM32WL3R, a wireless microcontroller (MCU) optimized for remote control units in consumer products and home automation. Evolved from the proven STM32WL3

Trends in Embedded AI: Designing Hardware for Machine Learning on the Edge

This blog post was originally published at Tessolve’s website. It is reprinted here with the permission of Tessolve. The world is increasingly becoming connected, intelligent, and autonomous. At the core of this transformation is Artificial Intelligence (AI), which is swiftly transitioning from the cloud to the edge, nearer to where data is generated and actions are

Semiconductor Industry 2025: Worldwide Dynamics and China’s Strategic Rise Unveiled

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The market research & strategy consulting company, Yole Group, delivers a dual perspective on global semiconductor dynamics and China’s fast-evolving industry landscape. Key Takeaways Global semiconductor device revenues are set to reach

10 Tips for First-Pass Silicon Success in AI Chip Development

This blog post was originally published at Synopsys’s website. It is reprinted here with the permission of Synopsys. As the saying goes, with great risk comes great reward. Because of the profound complexity and intricate dependencies between hardware and software, developing a custom AI chip is one of the most capital-intensive and high-risk endeavors in

Upcoming Webinar Explores Real-time AI Sensor Fusion

On December 17, 2025, at 9:00 am PST (12:00 pm EST), Alliance Member companies e-con Systems, Lattice Semiconductor, and NVIDIA will deliver a joint webinar “Real-Time AI Sensor Fusion with e-con Systems’ Holoscan Camera Solutions using Lattice FPGA for NVIDIA Jetson Thor Platform.” From the event page: Join an exclusive webinar hosted by e-con Systems®,

The Benefits of System-in-Package

This blog post was originally published at Efinix’s website. It is reprinted here with the permission of Efinix. In the rapidly evolving world of microelectronics, there is a relentless push to reduce size and power consumption while increasing compute capability and throughput. Smart edge devices, the internet of things and now Edge AI have only

Enabling Autonomous Machines: Advancing 3D Sensor Fusion With Au-Zone

This blog post was originally published at NXP Semiconductors’ website. It is reprinted here with the permission of NXP Semiconductors. Smarter Perception at the Edge Dusty construction sites. Fog-covered fields. Crowded warehouses. Heavy rain. Uneven terrain. What does it take for an autonomous machine to perceive and navigate challenging real-world environments like these – reliably, in

Cadence Welcomes ChipStack

This article was originally published at Cadence’s website. It is reprinted here with the permission of Cadence. ChipStack, a leading startup providing agentic AI solutions for chip verification, and Cadence have announced an agreement for ChipStack to join the agentic AI team at Cadence. Founded by technologists with deep expertise in both AI and semiconductor

AI at the Edge: Low Power, High Stakes

This blog post was originally published at Woodside Capital’s website. It is reprinted here with the permission of Woodside Capital. Palo Alto – November 3, 2025 – Woodside Capital Partners (WCP) is pleased to release our Digital Advertising Quarterly Sector Update for Q3 2025, authored by senior bankers Alain Bismuth and George Jones. Introduction: Intelligence on the Edge Edge

Microchip Technology Unveils Model Context Protocol (MCP) Server to Power AI-Driven Product Data Access

Server enables access to trusted product information across AI platforms to simplify workflows, accelerate design and boost productivity CHANDLER, Ariz., November 6, 2025 — Further demonstrating its commitment to developing AI-enabled solutions for embedded engineers, Microchip Technology (Nasdaq: MCHP) has announced the launch of its Model Context Protocol (MCP) Server. An AI interface, the MCP Server connects directly with

Smarter Smartphone Photography: Unlocking the Power of Neural Camera Denoising with Arm SME2

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. Discover how SME2 brings flexible, high-performance AI denoising to mobile photography for sharper, cleaner low-light images. Every smartphone photographer has seen it. Images that look sharp in daylight but fall apart in dim lighting. This happens because

BrainChip Unveils Breakthrough AKD1500 Edge AI Co-Processor at Embedded World North America

Laguna Hills, Calif. — November 4th, 2025 — BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), a global leader in ultra-low power, fully digital, event-based neuromorphic AI, today announced the launch of its AKD1500, a neuromorphic Edge AI accelerator co-processor chip, at Embedded World North America. Designed to deliver exceptional performance with minimal power consumption, the AKD1500 achieves 800 giga operations

Why Openness Matters for AI at the Edge

This blog post was originally published at Synaptics’ website. It is reprinted here with the permission of Synaptics. Openness across software, standards, and silicon is critical for ensuring interoperability, flexibility, and the growth of AI at the edge AI continues to migrate towards the edge and is no longer confined to the datacenter. Edge AI brings

NXP Completes Acquisitions of Aviva Links and Kinara to Advance Automotive Connectivity and AI at the Intelligent Edge

EINDHOVEN, The Netherlands, Oct. 28, 2025 (GLOBE NEWSWIRE) — NXP Semiconductors N.V. (NASDAQ: NXPI) has announced the completion of its acquisitions of Aviva Links, and Kinara. On October 24, 2025, NXP closed the previously announced acquisition of Aviva Links for $243 million in cash before closing adjustments. Aviva Links is a provider of Automotive SerDes Alliance (ASA)

Bringing Edge AI Performance to PyTorch Developers with ExecuTorch 1.0

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. ExecuTorch 1.0, an open source solution to training and inference on the Edge, becomes available to all developers Qualcomm Technologies contributed the ExecuTorch repository for developers to access Qualcomm® Hexagon™ NPU directly This streamlines the developer workflow

Axelera Announces Europa AIPU, Setting New Industry Benchmark for AI Accelerator Performance, Power Efficiency and Affordability

Delivers 629 TOPs processing power for multi-modal AI applications from the edge to the data center. Eindhoven, NL – October 21, 2025 – Axelera AI, the leading provider of purpose-built AI hardware acceleration technology, today announced Europa™, an AI processor unit (AIPU) that sets a new performance/price standard for multi-user generative AI and computer vision applications.

NVIDIA Contributes to Open Frameworks for Next-generation Robotics Development

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. At the ROSCon robotics conference, NVIDIA announced contributions to the ROS 2 robotics framework and the Open Source Robotics Alliance’s new Physical AI Special Interest Group, as well as the latest release of NVIDIA Isaac ROS. This