Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

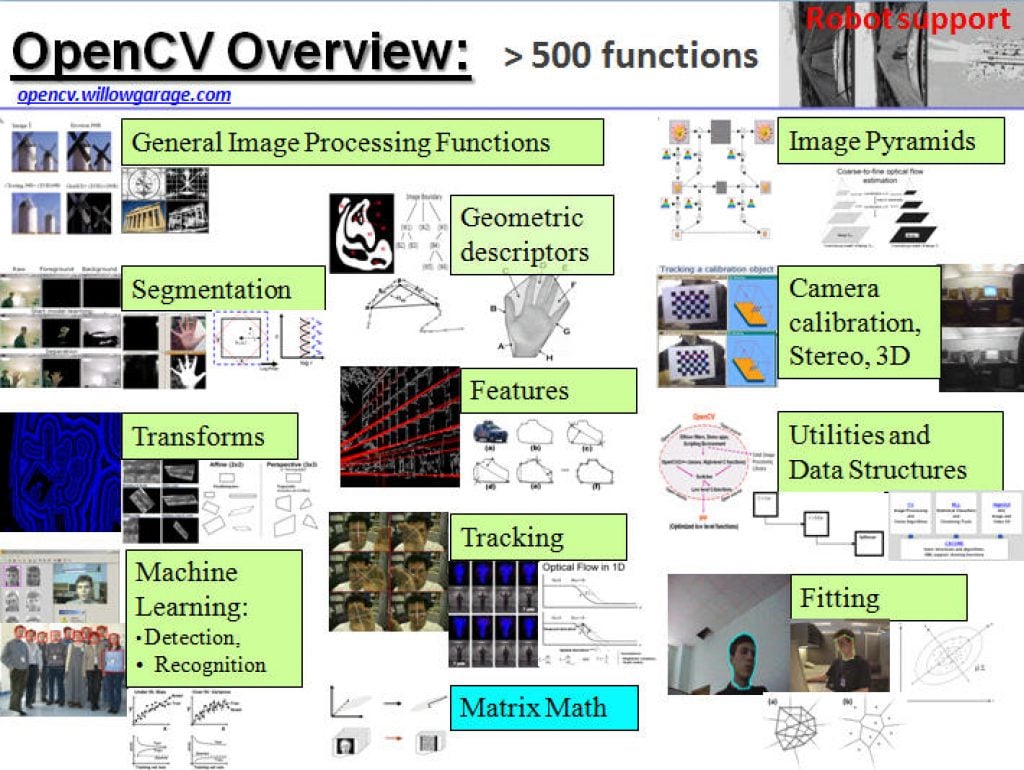

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

How to Enable Efficient Generative AI for Images and Videos

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Find out how Qualcomm AI Research reduced the latency of generative AI Generative artificial intelligence (AI) is rapidly changing the way we create images, videos, and even three-dimensional (3D) content. Leveraging the power of machine learning, generative

AI Decoded: Demystifying AI and the Hardware, Software and Tools That Power It

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. RTX AI PCs and workstations deliver exclusive AI capabilities and peak performance for gamers, creators, developers and everyday PC users. With the 2018 launch of RTX technologies and the first consumer GPU built for AI — GeForce

Where Nvidia’s Huang Went Wrong on Thomas Edison vs. AI

The tech industry’s self-congratulatory analogy of AI with electricity “is not absurd, but it’s too flawed to be useful,” says historian Peter Norton. What’s at stake: In Silicon Valley, power prevails. In the short run, if your business and technology have the power to make money, move the market, and change the rules, you won’t

Unlocking Peak Generations: TensorRT Accelerates AI on RTX PCs and Workstations

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. TensorRT extension for Stable Diffusion WebUI now supports ControlNets, performance showcased in new benchmark. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and which showcases

What is an NPU, and Why is It Key to Unlocking On-device Generative AI?

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The NPU is built for AI and complements the other processors to accelerate generative AI experiences The generative artificial intelligence (AI) revolution is here. With the growing demand for generative AI use cases across verticals with diverse

Lemur Imaging Demonstration of High Quality Compression Compared to Standard Quantization Techniques

Noman Hashim, CEO of Lemur Imaging, demonstrates the company’s latest edge AI and vision technologies and products at the March 2024 Edge AI and Vision Alliance Forum. Specifically, Hashim demonstrates Lemur Imaging’s high-quality memory reduction (LMR) image compression technology on different networks, showing that compression outperforms quantization in edge AI subsystems. The image quality delivered

Enabling the Generative AI Revolution with Intelligent Computing Everywhere

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. This post originally appeared on World Economic Forum on January 15, 2024. Generative artificial intelligence is era-defining and could benefit the global economy to the tune of $2.6 to $4.4 trillion annually. To realize the full potential of

Blaize Releases Picasso Analytics

A new easy to use framework and toolkit to empower developers and accelerate advanced analytics for edge AI applications El Dorado Hills, CA, March 20, 2024 — Blaize, a leading provider of full-stack artificial intelligence (AI) solutions for automotive and edge computing in multiple large and rapidly growing markets, announced today the availability of a

Calculating Video Quality Using NVIDIA GPUs and VMAF-CUDA

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Video quality metrics are used to evaluate the fidelity of video content. They provide a consistent quantitative measurement to assess the performance of the encoder. Peak signal-to-noise ratio (PSNR): A long-established quality metric that compares the pixel

How LiDAR Helps Quick Service Restaurants Enhance Operations and Customer Experience

This blog post was originally published at Outsight’s website. It is reprinted here with the permission of Outsight. The Quick Service Retail (QSR) industry is perpetually evolving, with technological advancements playing a pivotal role in shaping customer experiences and operational efficiencies. The Quick Service Restaurant (QSR) industry, a vibrant and ever-evolving segment of the modern

Visidon Presents Enhanced Object Detection at Embedded World 2024

Visidon has been invited to present on empowering AI to enhance object detection in extreme low-light environments at this year’s Embedded World Conference in Nuremberg, Germany, taking place from April 9th to 11th. The presentation by Mr. Sami Pietilä, Head of Embedded Video at Visidon, will be held on April 9th at 11 AM. Additionally,

The Power to Transform Retail with On-device Generative AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. NRF 2024 — Retail’s Big Show — is just a few days away as I write these words, and Qualcomm Technologies, Inc., alongside our customers, are excited to demonstrate how we are bringing to life digital retail

Vision Transformers vs CNNs at the Edge

This blog post was originally published at Embedl’s website. It is reprinted here with the permission of Embedl. “The Transformer has taken over AI”, says Andrej Karpathy, (Former) Director of AI at Tesla, in a recent episode on the popular Lex Fridman podcast. The seminal paper “Attention is All You Need” by Vaswani and 7

ProHawk AI Unveils AI Computer Vision Solutions at NVIDIA GTC

Sponsoring the largest technology and AI events, to be held March 18-21 in San Jose, California Unveiling ProHawk’s edge to cloud solutions embedded across the suite of NVIDIA platforms Also demonstrating integrated solutions with BCD, Dedicated Computing, Network Optix, and Onyx Healthcare LAKE MARY, FLORIDA, March 12, 2024 – ProHawk Technology Group (ProHawk AI), a

Visidon AI-powered Low-light Video Enhancement Selected for Hailo-15 AI Vision Processor

The CNN-based technology developed by Visidon allows for significant improvement to video analytics accuracy in low-light environments, marking a new era for intelligent cameras deployed in public spaces, smart cities, factories, buildings, retail locations, and more. March 12th 2024 – The leading AI chipmaker Hailo has selected Visidon CNN-powered low-light video enhancement for their Hailo-15

AI, Telehealth and Communications: Digital Healthcare

Software reading scans and online management of mental health are examples of digital healthcare that keep up with the modernization of the world, where efficiency and patient satisfaction are two key benefits. The weight of increasing demand for services could be lifted as more options become available for patients to seek treatment, and communication across