Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

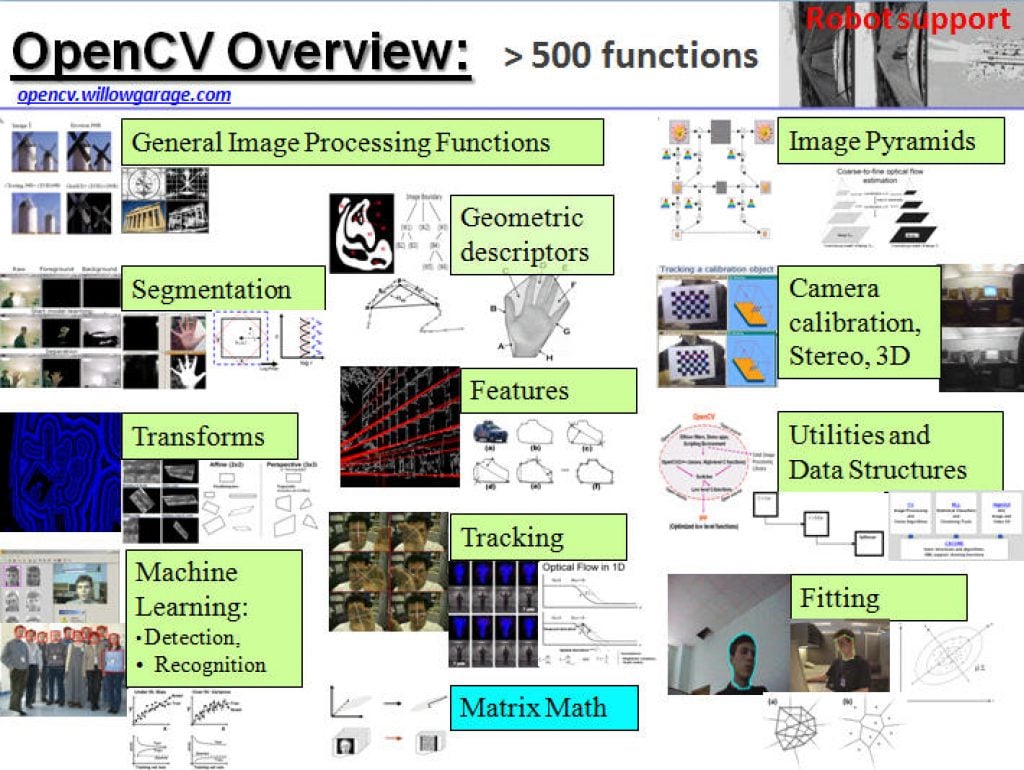

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

Balancing Safety with Efficiency: How LiDAR Transforms Airport Passenger Flow

This blog post was originally published at Outsight’s website. It is reprinted here with the permission of Outsight. Airports, as critical transportation hubs, play a dual role in ensuring passenger safety and maintaining a smooth flow of travelers. Balancing these two aspects is a challenging endeavor, especially in the face of evolving security threats and

Navigating the Ethical Labyrinth: Unraveling the Complexities of AI Ethics

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. Our world is experiencing rapid evolution, particularly in the realm of technology. AI stands out as one of the fastest-growing technologies, capturing the imagination not only of developers and scientists, but also of ordinary people. While science-fiction

AI on the Edge: Generative AI Technology Impacts, Insights and Predictions

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Explore topics about on-device generative AI in 2023 with our subject matter experts Welcome back to AI on the Edge. In our last roundup of posts, we introduced our new series with topics relating to the ever-growing

Prompt Engineering in Vision

This blog post was originally published at Embedl’s website. It is reprinted here with the permission of Embedl. Recently, we have all witnessed the stunning success of prompt engineering in natural language processing (NLP) applications like ChatGPT: one supplies a text prompt to a large language model (LLM) like GPT-4 and the result is a

FRAMOS Announces a New Strategic Partnership with RidgeRun to Deliver Cutting-edge Vision Solutions

6th of March 2024 – FRAMOS, a leading imaging company, is excited to announce a new strategic partnership with software-only company RidgeRun. RidgeRun is a software-only company focused on embedded software development for multiple system on chips. RidgeRun has more than 20 years of experience in video and audio solutions, from Linux driver development to

The Role of 3D LiDAR Technology in ITS

This blog post was originally published at Outsight’s website. It is reprinted here with the permission of Outsight. Intelligent Transportation Systems (ITS) are crucial for enhancing road safety and efficiency. LiDAR brings these systems to a whole new level. With the integration of advanced technologies like 3D LiDAR, ITS are undergoing a transformation, offering unprecedented

How is AI Transforming the Semiconductor Industry?

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. The semiconductor industry stands as a driving force behind technological advancements, powering the devices that have become integral to modern life. As the demand for faster, smaller and more energy-efficient chips continues to grow, the industry faces

5 Generative AI Use Cases Impacting Our Lives

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Generative AI has unlocked the power of AI, and the potential is unlimited Since the introduction of ChatGPT in November 2022, the world has become enamored with the potential for artificial intelligence (AI). ChatGPT ushered in the

Detecting Real-time Waste Contamination Using Edge Computing and Video Analytics

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The past few decades have witnessed a surge in rates of waste generation, closely linked to economic development and urbanization. This escalation in waste production poses substantial challenges for governments worldwide in terms of efficient processing and

What Do Vision Transformers See?

This blog post was originally published at Embedl’s website. It is reprinted here with the permission of Embedl. CNNs have long been the workhorses of vision ever since they achieved the dramatic breakthroughs of super-human performance with AlexNet in 2012. But recently, the vision transformer (ViT) is changing the picture. CNNs have a an inductive

ProHawk Gains NVIDIA Preferred Partner Status for its AI-enabled Computer Vision Solutions

ProHawk solutions validated in NVIDIA’s labs over the past 18 months, and now embedded with NVIDIA Metropolis, Holoscan, and Jetson platforms, with more integrations coming. LAKE MARY, FLORIDA, February 27, 2024 — ProHawk Technology Group (ProHawk AI), a leading AI-enabled computer vision company, today announced that it has been selected as a Preferred partner within

Intel Announces New Edge Platform for Scaling AI Applications

The edge-native software platform simplifies development, deployment and management of edge AI applications. What’s New: At MWC 2024, Intel announced its new Edge Platform, a modular, open software platform enabling enterprises to develop, deploy, run, secure, and manage edge and AI applications at scale with cloud-like simplicity. Together, these capabilities will accelerate time-to-scale deployment for

Edge AI in Medical Applications

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. A practical use for object detection based on Convolutional Neural Networks is in devices which can support people with impaired vision. An embedded device which runs object-detection models can make everyday life easier for users with such

Qualcomm Continues to Bring the Generative AI Revolution to Devices and Empowers Developers with Qualcomm AI Hub

Highlights: Qualcomm now enables at-scale on-device AI commercialization across next-generation PCs, smartphones, software-defined vehicles, XR devices, IoT and more – bringing intelligent computing everywhere. Qualcomm AI Hub offers 75+ optimized AI models for Snapdragon and Qualcomm platforms, reducing time-to-market for developers and unlocking the benefits of on-device AI for their apps. These models are also

Optimizing Generative AI for Edge Devices

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. How pruning, quantization and knowledge distillation help fit generative AI into resource-limited edge devices As of April 2023, one in three U.S. adults have used generative artificial intelligence (AI): Are you among them?1 When OpenAI unveiled ChatGPT

Shining Brighter Together: Google’s Gemma Optimized to Run on NVIDIA GPUs

New open language models from Google accelerated by TensorRT-LLM across NVIDIA AI platforms — including local RTX AI PCs. NVIDIA, in collaboration with Google, today launched optimizations across all NVIDIA AI platforms for Gemma — Google’s state-of-the-art new lightweight 2 billion– and 7 billion-parameter open language models that can be run anywhere, reducing costs and