Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

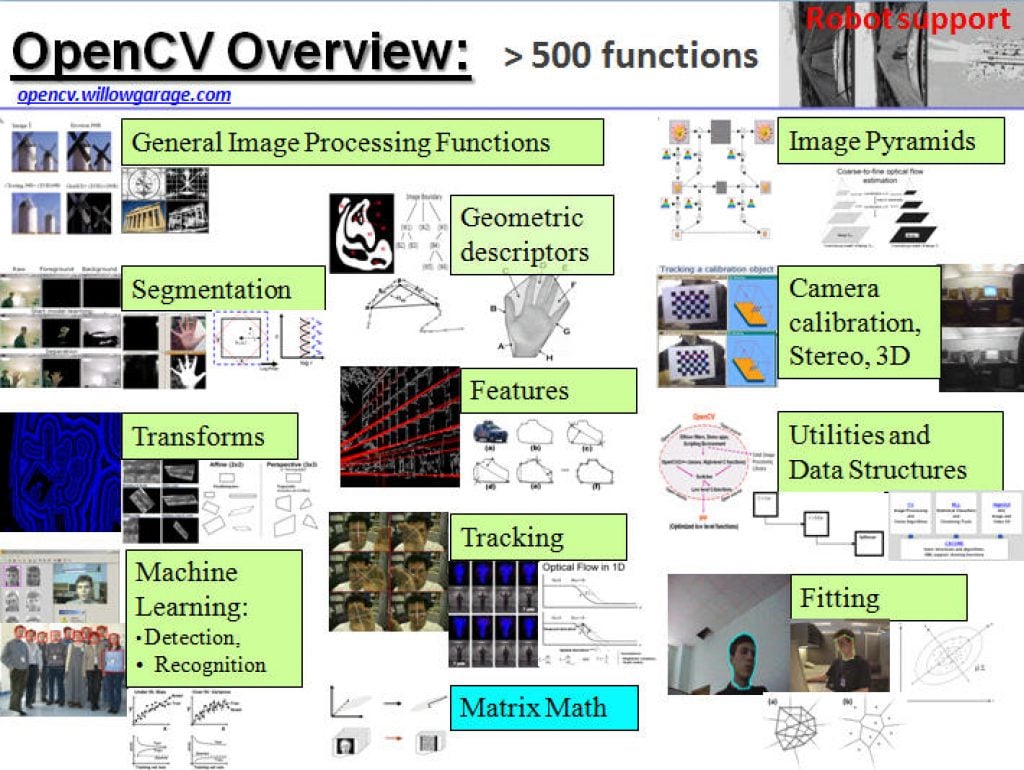

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

“Improved Navigation Assistance for the Blind via Real-time Edge AI,” a Presentation from Tesla

Aishwarya Jadhav, Software Engineer in the Autopilot AI Team at Tesla, presents the “Improved Navigation Assistance for the Blind via Real-time Edge AI,” tutorial at the May 2024 Embedded Vision Summit. In this talk, Jadhav presents recent work on AI Guide Dog, a groundbreaking research project aimed at providing navigation… “Improved Navigation Assistance for the

Qualcomm Announces Multi-year Strategic Collaboration with Google to Deliver Generative AI Digital Cockpit Solutions

Highlights: Qualcomm and Google will leverage Snapdragon Digital Chassis and Google’s in-vehicle technologies to produce a standardized reference framework for development of generative AI-enabled digital cockpits and software-defined vehicles (SDV). Qualcomm to lead go-to-market efforts for scaling and customization of joint solution with the broader automotive ecosystem. Companies’ collaboration demonstrates power of co-innovation, empowering automakers

Flux and Furious: New Image Generation Model Runs Fastest on RTX AI PCs and Workstations

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Black Forest Labs’ latest models generate high-quality images and are highly performant on NVIDIA RTX GPUs. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and

“Using Vision Systems, Generative Models and Reinforcement Learning for Sports Analytics,” a Presentation from Sportlogiq

Mehrsan Javan, Chief Technology Officer at Sportlogiq, presents the “Using Vision Systems, Generative Models and Reinforcement Learning for Sports Analytics” tutorial at the May 2024 Embedded Vision Summit. At a high level, sport analytics systems can be broken into two components: sensory data collection and analytical models that turn sensory… “Using Vision Systems, Generative Models

Exploring the Next Frontier of AI: Multimodal Systems and Real-time Interaction

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Discover the state of the art in large multimodal models with Qualcomm AI Research In the realm of artificial intelligence (AI), the integration of senses — seeing, hearing and interacting — represents a frontier that is rapidly

“Diagnosing Problems and Implementing Solutions for Deep Neural Network Training,” a Presentation from Sensor Cortek

Fahed Hassanat, COO and Head of Engineering at Sensor Cortek, presents the “Deep Neural Network Training: Diagnosing Problems and Implementing Solutions” tutorial at the May 2024 Embedded Vision Summit. In this presentation, Hassanat delves into some of the most common problems that arise when training deep neural networks. He provides… “Diagnosing Problems and Implementing Solutions

Smart Glasses for the Consumer Market

There are currently about 250 companies in the head mounted wearables category and these companies in aggregate have received over $5B in funding. $700M has been invested in this category just since the beginning of the year. On the M&A front, there have already been a number of significant acquisitions in the space, notably the

Orchestrating Innovation at Scale with NVIDIA Maxine and Texel

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The NVIDIA Maxine AI developer platform is a suite of NVIDIA NIM microservices, cloud-accelerated microservices, and SDKs that offer state-of-the-art features for enhancing real-time video and audio. NVIDIA partners use Maxine features to create better virtual interaction

“Transformer Networks: How They Work and Why They Matter,” a Presentation from Ryddle AI

Rakshit Agrawal, Co-Founder and CEO of Ryddle AI, presents the “Transformer Networks: How They Work and Why They Matter” tutorial at the May 2024 Embedded Vision Summit. Transformer neural networks have revolutionized artificial intelligence by introducing an architecture built around self-attention mechanisms. This has enabled unprecedented advances in understanding sequential… “Transformer Networks: How They Work

“Removing Weather-related Image Degradation at the Edge,” a Presentation from Rivian

Ramit Pahwa, Machine Learning Scientist at Rivian, presents the “Removing Weather-related Image Degradation at the Edge” tutorial at the May 2024 Embedded Vision Summit. For machines that operate outdoors—such as autonomous cars and trucks—image quality degradation due to weather conditions presents a significant challenge. For example, snow, rainfall and raindrops… “Removing Weather-related Image Degradation at

“Seeing the Invisible: Unveiling Hidden Details through Advanced Image Acquisition Techniques,” a Presentation from Qualitas Technologies

Raghava Kashyapa, CEO of Qualitas Technologies, presents the “Seeing the Invisible: Unveiling Hidden Details through Advanced Image Acquisition Techniques” tutorial at the May 2024 Embedded Vision Summit. In this presentation, Kashyapa explores how advanced image acquisition techniques reveal previously unseen information, improving the ability of algorithms to provide valuable insights.… “Seeing the Invisible: Unveiling Hidden

Qualcomm Introduces Industrial-grade IQ Series and IoT Solutions Framework to Usher in New Era of Industrial Intelligence

Highlights: Qualcomm introduces a new portfolio for industrial IoT for the AI era, leading the transition to connected intelligent end points and empowering developers and enterprises to build the next generation of edge AI solutions across industries. The Qualcomm IoT Solutions Framework aids enterprises in solving the most daunting business challenges by tailoring platforms that

Bringing Mixed Reality to the Masses with Meta Quest 3S Powered by Snapdragon

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. In a Nutshell: Snapdragon XR Platforms power the latest commercial augmented, virtual and mixed reality devices from Meta including the just announced Quest 3S. Qualcomm Technologies, Inc. and Meta continue their strong collaboration and are powering XR

“Data-efficient and Generalizable: The Domain-specific Small Vision Model Revolution,” a Presentation from Pixel Scientia Labs

Heather Couture, Founder and Computer Vision Consultant at Pixel Scientia Labs, presents the “Data-efficient and Generalizable: The Domain-specific Small Vision Model Revolution” tutorial at the May 2024 Embedded Vision Summit. Large vision models (LVMs) trained on a large and diverse set of imagery are revitalizing computer vision, just as LLMs… “Data-efficient and Generalizable: The Domain-specific

“Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence,” a Presentation from Omnilert

Chad Green, Director of Artificial Intelligence at Omnilert, presents the “Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence” tutorial at the May 2024 Embedded Vision Summit. In the United States in 2023, there were 658 mass shootings, and 42,996 people lost their lives to gun violence. Detecting and… “Omnilert Gun Detect: Harnessing Computer

Accelerating LLMs with llama.cpp on NVIDIA RTX Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The NVIDIA RTX AI for Windows PCs platform offers a thriving ecosystem of thousands of open-source models for application developers to leverage and integrate into Windows applications. Notably, llama.cpp is one popular tool, with over 65K GitHub