Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

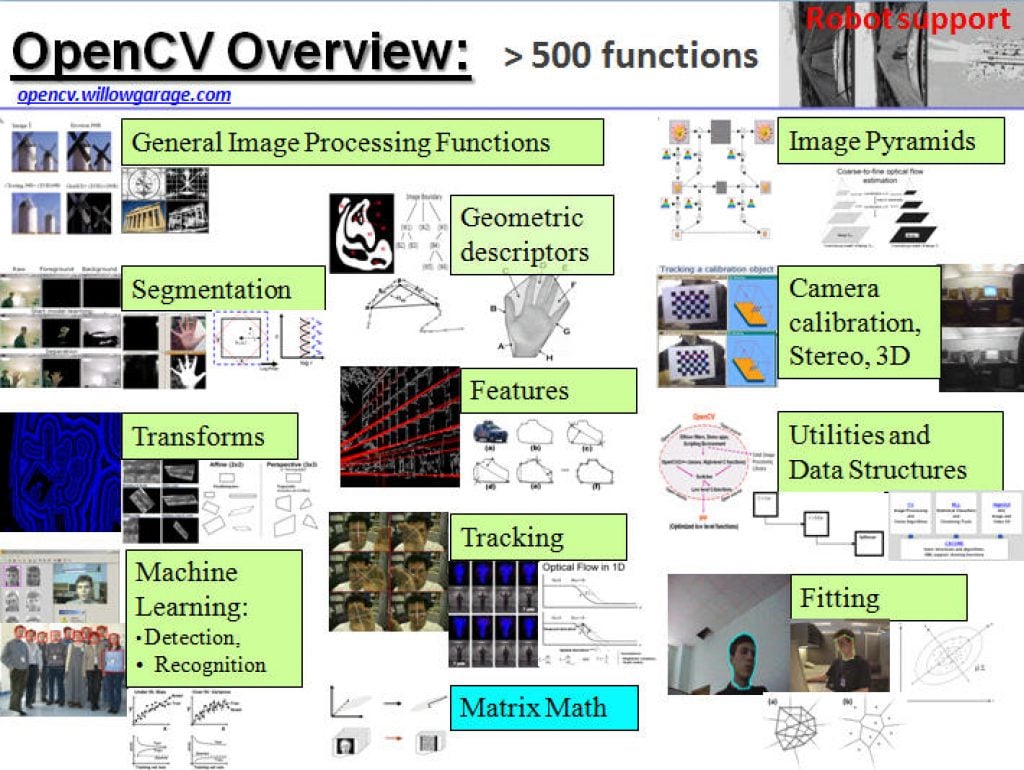

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

Improving Perception with Multiple Radar Sensors

This blog post was originally published by D3. It is reprinted here with the permission of D3. There are variety of applications where spatial sensing is beneficial. Applications such as automotive parking, robotics collision avoidance, and even people counting and tracking can benefit from detecting objects in the environment either around a vehicle or within

Free Webinar Explores How to Accelerate Edge AI Development With Microservices For NVIDIA Jetson

On March 5, 2024 at 8 am PT (11 am ET), NVIDIA senior product manager Chintan Shah will present the free hour webinar “Accelerate Edge AI Development With Microservices For NVIDIA Jetson,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Building vision AI applications for the edge

The Generative AI Economy: Worth Up to $7.9T

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The potential for generative AI is unlimited Generative artificial intelligence (AI) is so powerful, it’s impacting aspects of the human condition, driving innovation across industries, creating new consumer and enterprise applications, and stimulating revenue growth. And as

Outsight and PreAct Technologies Partner to Break New Ground in People Flow Monitoring

New affordable high-resolution technology for smart infrastructure applications set to disrupt LiDAR market January 9, 2024 – Outsight, the leading innovator in 3D Spatial AI Software Solutions, has joined forces with PreAct Technologies, a trailblazer in near-field flash LiDAR technology, to revolutionize the fields of Smart Infrastructure and People Flow Monitoring. PreAct’s unique approach has

Outsight Attains ISO 27001 Certification

We are thrilled to announce the achievement of ISO 27001 Certification, marking a commitment to top-tier information security. January 8, 2024 – We’re proud to announce the attainment of ISO 27001 Certification! This certification marks an important moment in our commitment to providing rock-solid processes and an Information Security Management Solution (ISMS) that goes beyond

Improving Productivity with AI

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. Application of Computer Vision in the Industrial Sector Inventory management is a key process for all industrial companies, but the inventory process is both time-consuming and error-prone. Mistakes can be very costly, and it is highly undesirable

The State of AI and Where It’s Heading in 2024

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. An interview with Qualcomm Technologies’ SVP, Durga Malladi, about AI benefits, challenges, use cases and regulations It’s an exciting and dynamic time as the adoption of on-device generative artificial intelligence (AI) grows, driving the democratization of AI

Feeding the World with AI: A Study In the Use of Synthetic Data

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. Increasing yields has been a key goal for farmers since the dawn of agriculture. People have continually looked for ways to maximise food production from the land available to them. Until recently, land management techniques such as

Announcing NVIDIA Metropolis Microservices for Jetson for Rapid Edge AI Development

Building vision AI applications for the edge often comes with notoriously long and costly development cycles. At the same time, quickly developing edge AI applications that are cloud-native, flexible, and secure has never been more important. Now, a powerful yet simple API-driven edge AI development workflow is available with the new NVIDIA Metropolis microservices. NVIDIA

Build Vision AI Applications at the Edge with NVIDIA Metropolis Microservices and APIs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Metropolis microservices provide powerful, customizable, cloud-native APIs and microservices to develop vision AI applications and solutions. The framework now includes NVIDIA Jetson, enabling developers to quickly build and productize performant and mature vision AI applications at

Advancing Perception Across Modalities with State-of-the-art AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Qualcomm AI Research papers at ICCV, Interspeech and more The last few months have been exciting for the Qualcomm AI Research team, with the opportunity to present our latest papers and artificial intelligence (AI) demos at conferences

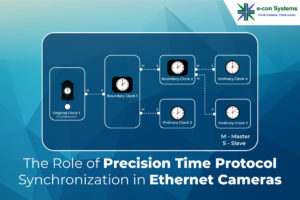

The Role of Precision Time Protocol Synchronization in Ethernet Cameras

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Ethernet cameras rely on precise timing and synchronization for accurate data transmission. The Precision Time Protocol (PTP) ensures sub-microsecond accuracy, synchronizing time clocks among IP-connected devices. Gain expert insights into how precise timing and synchronization

How to Find the Best “Food” for Your AI Model

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. When you want to take the best possible care of your brain, certain things are recommended, such as fatty fish, vegetables, doing some brain exercises, and learning new things. But what about artificial neural networks? Of course,

The US$1.6 Trillion Future of the Automotive Tech Opportunity

Autonomous driving, electric vehicles, connected and software-defined vehicles, and in-cabin monitoring are all megatrends reshaping the automotive industry. Together, these technologies combine to form a US$1.6 trillion opportunity by 2034, nearly a 10-fold increase compared to 2023. The new report from IDTechEx, “Future Automotive Technologies 2024-2034: Applications, Megatrends, Forecasts“, brings together a portfolio of IDTechEx’s

Fast-track Computer Vision Deployments with NVIDIA DeepStream and Edge Impulse

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. AI-based computer vision (CV) applications are increasing, and are particularly important for extracting real-time insights from video feeds. This revolutionary technology empowers you to unlock valuable information that was once impossible to obtain without significant operator intervention,

The Positive Social Impact of AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Harnessing AI for the greater good and to improve our world Artificial intelligence (AI) has the potential to improve productivity, boost creativity and enhance the human experience. Although AI may create a sense of uncertainty about the