| LETTER FROM THE EDITOR |

Dear Colleague,

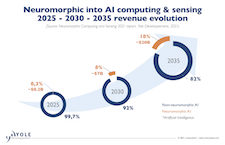

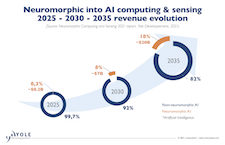

On November 16 at 9 am PT, Yole Développement will deliver the free webinar “Neuromorphic Sensing and Computing: Compelling Options for a Host of AI Applications” in partnership with the Edge AI and Vision Alliance. AI is transforming the way we design processors and accompanying sensors, as well as how we develop systems based on them. Deep learning, currently the predominant paradigm, leverages ever-larger neural networks, initially built with vast amounts of training information and subsequently fed with large sets of inference data from image and other sensors. This brute force approach is increasingly limited by cost, size, power consumption and other constraints. Today’s AI accelerators are already highly optimized, with next-generation gains potentially obtained via near or in-memory compute…but what after that? Brain-inspired, asynchronous, energy-efficient neuromorphic sensing and computing aspires to be the long-term solution for a host of AI applications. Yole Développement analysts Pierre Cambou and Adrien Sanchez will begin with an overview of neuromorphic sensing and computing concepts, followed by a review of target applications. Next, they will evaluate the claims made by neuromorphic technology developers and product suppliers, comparing and contrasting them with the capabilities and constraints of mainstream approaches both now and as both technologies evolve in the future. For more information and to register, please see the event page.

And on December 9 at 9 am PT, BrainChip will deliver the free webinar “Developing Intelligent AI Everywhere with BrainChip’s Akida” in partnership with the Edge AI and Vision Alliance. Implementing “smart” sensors at the edge using traditional machine learning is extremely challenging, requiring real-time processing and the management of both low power consumption and and low latency requirements. BrainChip’s Akida neural processor unit (NPU) brings intelligent AI to the edge with ease. The BrainChip Akida NPU leverages advanced neuromorphic computing as its processing “engine”. Akida addresses critical requirements such as privacy, security, latency and power consumption, delivering key features such as one-shot learning and on-device computing with no “cloud” dependencies. With Akida, BrainChip is delivering on next-generation demands by achieving efficient, effective and straightforward AI functionality. In this session, you’ll learn how to easily develop efficient AI in edge devices by implementing Akida IP either into your SoC or as standalone silicon. For more information and to register, please see the event page.

Brian Dipert

Editor-In-Chief, Edge AI and Vision Alliance |

| DEVELOPMENT TOOL INNOVATIONS |

|

The Data-Driven Engineering Revolution

In this talk, IoT industry pioneer and Edge Impulse co-founder and CEO Zach Shelby shares insights about how machine learning is revolutionizing embedded engineering. Advances in silicon and deep learning are enabling embedded machine learning (TinyML) to be deployed where data is born, from industrial sensor data to audio and video. Shelby explains the new paradigm of data-driven engineering with ML, showing how developers are using data instead of code to drive algorithm innovation. To support widespread deployment, ML workloads need to run on embedded computing targets from MCUs to GPUs, with MLOps processes to support efficient development and deployment. Industrial, logistics and health markets are particularly ripe to deploy this data-driven approach, and Shelby highlights several exciting case studies.

Accelerating Edge AI Solution Development with Pre-validated Hardware-Software Kits

When developing a new edge AI solution, you want to focus on your system’s unique functionality. In this presentation, Daniel Tsui, Foundational Developer Kit Product Manager at Intel, shares the different foundational developer kits available from Intel’s partners to help speed your edge AI solution development. These kits include industrial-grade hardware that’s ready to deploy and that can be purchased easily using a credit card. These systems come with Intel’s Edge Insights for Vision software package, a set of pre-validated software modules for orchestration and cloud support, and the Intel Distribution of OpenVINO toolkit for computer vision and deep learning applications. Watch and learn how these robust, pre-validated hardware and software resources can accelerate the development of your edge AI solution.

|

| AI EVOLUTIONS AND REVOLUTIONS |

|

What We Need to Transform Lives and Industries with On-Device AI, Cloud and 5G

Every day, AI-enabled systems enhance how we live and work—and we’ve barely scratched the surface! These systems are poised to create new industries and dramatically improve the effectiveness, efficiency and safety of existing industries. In many cases, AI functionality will run at the edge—on small, inexpensive, battery-powered devices—creating an inexhaustible appetite for efficient AI processing. Qualcomm is answering this demand with leading-edge AI processing and 5G connectivity features in its latest Snapdragon 888 processor powerhouse. In this talk, Ziad Asghar, Vice President of Product Management at Qualcomm, highlights key advances in the Snapdragon 888 and the capabilities they enable for emerging use cases in cloud-connected edge AI.

Software-Defined Cameras for Edge Computing of the Future

Computer-vision-enabled cameras have demonstrated the potential to bring compelling functionality to numerous applications. But to realize the full potential and assist with the growth of AI-enabled cameras, it’s necessary to drastically simplify the work of developing, deploying and maintaining these cameras. Hardware and firmware standards are key to accomplishing this. In this presentation, Parag Beeraka, Head of the Smart Camera and Vision Business at Arm, introduces Arm’s vision for a set of hardware and software standards addressing four key elements of software-defined cameras: security, machine learning, cloud enablement and software portability. For example, smart camera machine learning workloads can run on a variety of processing engines. Common frameworks are needed so that these workloads can be seamlessly and efficiently mapped onto the available processing engines without requiring that the camera developer delve into processing engine details. Similarly, most smart cameras are starting to rely on cloud services for storage as well as model and software updates. Standardizing interfaces to these key elements will give smart camera developers the ability to quickly integrate the cloud services best matched to their needs, without having to master the details of those elements.

|

| UPCOMING INDUSTRY EVENTS |

|

Neuromorphic Sensing and Computing: Compelling Options for a Host of AI Applications – Yole Développement Webinar: November 16, 2021, 9:00 am PT

Developing Intelligent AI Everywhere with BrainChip’s Akida – BrainChip Webinar: December 9, 2021, 9:00 am PT

More Events

|

| FEATURED NEWS |

|

Syntiant’s TinyML Platform Brings AI Development to Everyone, Everywhere

The Latest Version of Lattice Semiconductor’s mVision Solution Stack Enables 4K Video Processing at Low Power Consumption for Embedded Vision Applications

Teledyne e2v Introduces Compact 2MP & 1.5MP CMOS Sensors Featuring a Low-noise Global Shutter Pixel

Synopsys Expands its Processor IP Product Line with Additional ARC DSP IP Solutions for Low-Power Embedded SoCs

GrAI Matter Labs is Partnering with Fellow Alliance Member Companies ADLINK Technology on a Platform Based on ADLINK’s SMARC I-Pi Development Kits and GML’s Vision Inference Processor and FRAMOS on AI for 3D Industrial Vision, and with EZ-WHEEL on an AI-powered Wheel Drive for Autonomous Mobile Robots

More News

|

EDGE AI AND

VISION PRODUCT OF THE YEAR WINNER SHOWCASE |

|

BlinkAI Technologies Night Video (Best Edge AI Software or Algorithm)

BlinkAI Technologies’ Night Video is the 2021 Edge AI and Vision Product of the Year Award Winner in the Edge AI Software and Algorithms category. Night Video is the world’s first AI-based night video solution for smartphones. BlinkAI’s deep learning software elevates the low-light performance of smartphone native camera hardware to bring unprecedented detail and vibrancy to smartphone nighttime videos. BlinkAI’s proprietary solution is able to instantly enhance each short-exposure, high-noise video frame, using a unique spatiotemporal neural network that is exceptionally power efficient, only consuming 250 mW when deployed on neural network hardware accelerators. By enabling any camera to see at 5-10X lower illumination than previously possible, BlinkAI Night Video also dramatically improves downstream computer vision tasks such as object detection in low-light conditions. And beyond the smartphone market, BlinkAI is also actively collaborating with leading companies in the automotive, robotics, and surveillance industries interested in applying Night Video to their computer vision tasks.

Please see here for more information on BlinkAI Technologies’ Night Video. The Edge AI and Vision Product of the Year Awards celebrate the innovation of the industry’s leading companies that are developing and enabling the next generation of edge AI and computer vision products. Winning a Product of the Year award recognizes a company’s leadership in edge AI and computer vision as evaluated by independent industry experts. |