Qualcomm to Acquire Arduino—Accelerating Developers’ Access to its Leading Edge Computing and AI

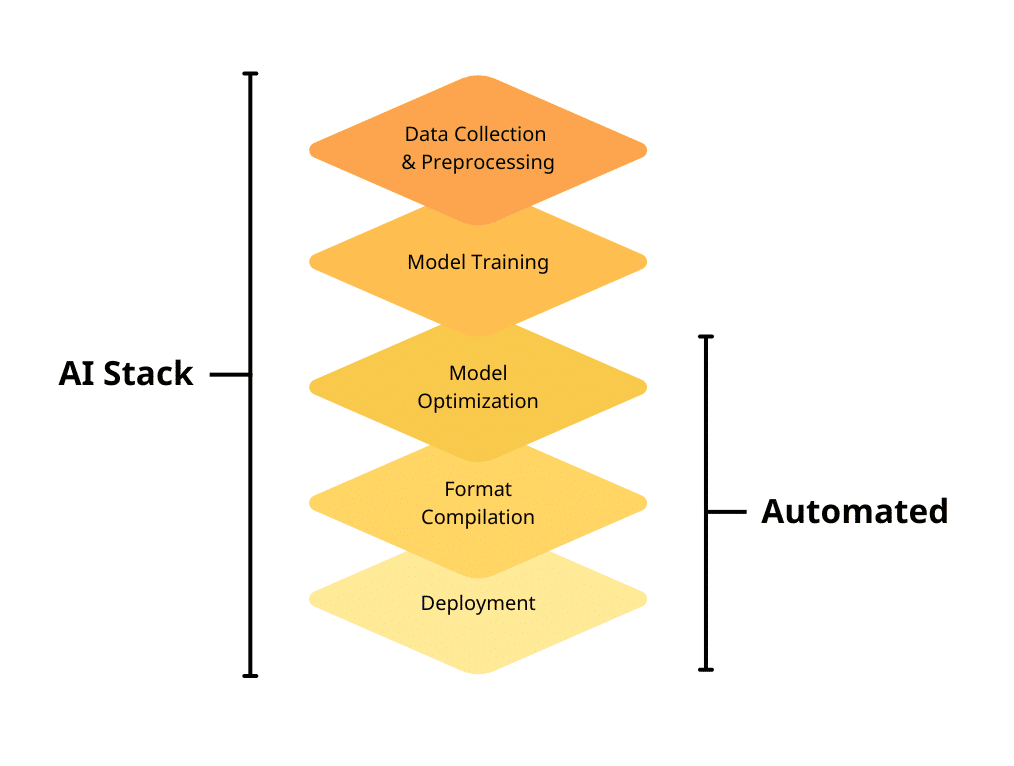

New Arduino UNO Q and Arduino App Lab to Enable Millions of Developers with the Power of Qualcomm Dragonwing Processors Highlights: Acquisition to combine Qualcomm’s leading-edge products and technologies with Arduino’s vast ecosystem and community to empower businesses, students, entrepreneurs, tech professionals, educators and enthusiasts to quickly and easily bring ideas to life. New Arduino […]