Nota AI Demonstration of Machine Learning at the Edge on Arm Platforms with NetsPresso AutoML

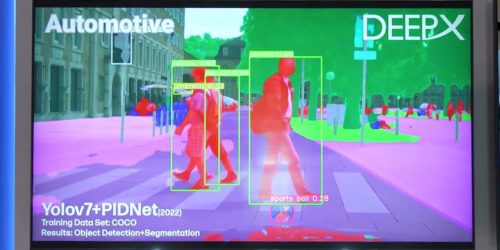

Won Eui Hong, Research Engineer at Nota AI, demonstrates the company’s latest edge AI and vision technologies and products in Arm’s booth at the 2023 Embedded Vision Summit. Specifically, Hong demonstrates how Nota AI’s NetsPresso AutoML solutions enable machine learning at the edge on Arm platforms. It is very critical to enable a vibrant machine […]