Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

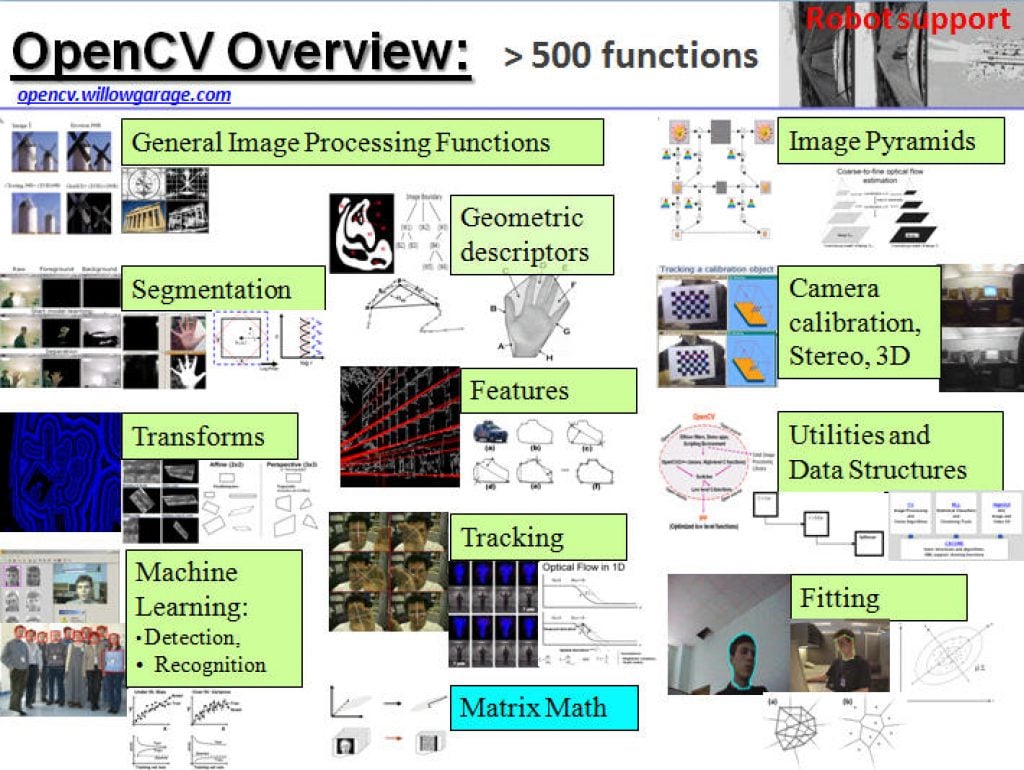

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

Arm at NeurIPS 2025: How AI Research is Shaping the Future of Intelligent Computing

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. NeurIPS 2025 provided Arm with a unique opportunity to share the latest technical trends and insights with the global AI research community. NeurIPS is one of the world’s leading AI research conferences, acting as a thriving global hub for

Low-Light Image Enhancement: YUV vs RAW – What’s the Difference?

This blog post was originally published at Visidon’s website. It is reprinted here with the permission of Visidon. In the world of embedded vision—whether for mobile phones, surveillance systems, or smart edge devices—image quality in low-light conditions can make or break user experience. That’s where advanced AI-based denoising algorithms come into play. At our company, we

NVIDIA-Accelerated Mistral 3 Open Models Deliver Efficiency, Accuracy at Any Scale

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The new Mistral 3 open model family delivers industry-leading accuracy, efficiency, and customization capabilities for developers and enterprises. Optimized from NVIDIA GB200 NVL72 to edge platforms, Mistral 3 includes: One large state-of-the-art sparse multimodal and multilingual mixture of

NVIDIA Advances Open Model Development for Digital and Physical AI

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA releases new AI tools for speech, safety and autonomous driving — including NVIDIA DRIVE Alpamayo-R1, the world’s first open industry-scale reasoning vision language action model for mobility — and a new independent benchmark recognizes the openness and

Let’s Visit the Zoo

This blog post was originally published at Quadric’s website. It is reprinted here with the permission of Quadric. The term “model zoo” first gained prominence in the world of AI / machine learning beginning in the 2016-2017 timeframe. Originally used to describe open-source public repositories of working AI models – the most prominent of which today

Small Models, Big Heat — Conquering Korean ASR with Low-bit Whisper

This blog post was originally published at ENERZAi’ website. It is reprinted here with the permission of ENERZAi. Today, we’ll share results where we re-trained the original Whisper for optimal Korean ASR(Automatic Speech Recognition), applied Post-Training Quantization (PTQ), and provided a richer Pareto analysis so customers with different constraints and requirements can pick exactly what

STMicroelectronics Introduces the Industry’s Largest MCU Model Zoo to Accelerate Physical AI Time to Market

Nov 18, 2025 Geneva, Switzerland — STMicroelectronics (NYSE: STM), a global semiconductor leader serving customers across the spectrum of electronics applications, has unveiled new models and enhanced project support for its STM32 AI Model Zoo to accelerate the prototyping and development of embedded AI applications. This marks a significant expansion for what is already the industry’s largest

SAM3: A New Era for Open‑Vocabulary Segmentation and Edge AI

Quality training data – especially segmented visual data – is a cornerstone of building robust vision models. Meta’s recently announced Segment Anything Model 3 (SAM3) arrives as a potential game-changer in this domain. SAM3 is a unified model that can detect, segment, and even track objects in images and videos using both text and visual

Smarter Smartphone Photography: Unlocking the Power of Neural Camera Denoising with Arm SME2

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. Discover how SME2 brings flexible, high-performance AI denoising to mobile photography for sharper, cleaner low-light images. Every smartphone photographer has seen it. Images that look sharp in daylight but fall apart in dim lighting. This happens because

The Need for Continuous Training in Computer Vision Models

This blog post was originally published at Plainsight Technologies’ website. It is reprinted here with the permission of Plainsight Technologies. A computer vision model that works under one lighting condition, store layout, or camera angle can quickly fail as conditions change. In the real world, nothing is constant, seasons change, lighting shifts, new objects appear and

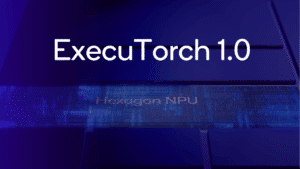

Bringing Edge AI Performance to PyTorch Developers with ExecuTorch 1.0

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. ExecuTorch 1.0, an open source solution to training and inference on the Edge, becomes available to all developers Qualcomm Technologies contributed the ExecuTorch repository for developers to access Qualcomm® Hexagon™ NPU directly This streamlines the developer workflow

NVIDIA Contributes to Open Frameworks for Next-generation Robotics Development

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. At the ROSCon robotics conference, NVIDIA announced contributions to the ROS 2 robotics framework and the Open Source Robotics Alliance’s new Physical AI Special Interest Group, as well as the latest release of NVIDIA Isaac ROS. This

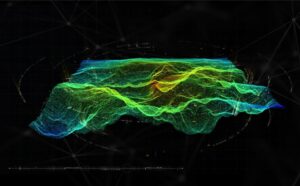

Unleash Real-time LiDAR Intelligence with BrainChip Akida On-chip AI

This blog post was originally published at BrainChip’s website. It is reprinted here with the permission of BrainChip. Accelerating LiDAR Point Cloud with BrainChip’s Akida™ PointNet++ Model. LiDAR (Light Detection and Ranging) technology is the key enabler for advanced Spatial AI—the ability of a machine to understand and interact with the physical world in three

NVIDIA Blackwell: The Impact of NVFP4 For LLM Inference

This blog post was originally published at Nota AI’s website. It is reprinted here with the permission of Nota AI. With the introduction of NVFP4—a new 4-bit floating point data type in NVIDIA’s Blackwell GPU architecture—LLM inference achieves markedly improved efficiency. Blackwell’s NVFP4 format (RTX PRO 6000) delivers up to 2× higher LLM inference efficiency

Don’t Give Your Business Data to AI Companies

This blog post was originally published at Plainsight Technologies’ website. It is reprinted here with the permission of Plainsight Technologies. I have joined Plainsight Technologies as CEO to do something radical: not steal your data. Vision is our most powerful sense. We navigate the world, recognize faces, assess our surroundings, operate vehicles, and make split-second

NanoEdge AI Studio v5, the First AutoML Tool with Synthetic Data Generation

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. NanoEdge AI Studio v5 is the first AutoML tool for STM32 microcontrollers capable of generating anomaly data out of typical logs, thanks to a new feature we call Synthetic Data Generation. Additionally, the latest version makes it