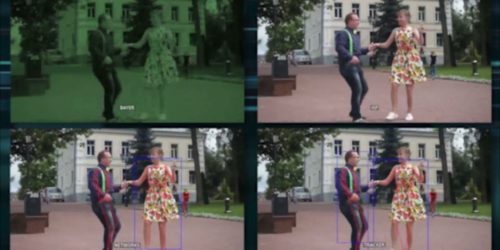

Blaize Demonstration of People and Pose Detection and Key Point Tracking Using the Picasso SDK

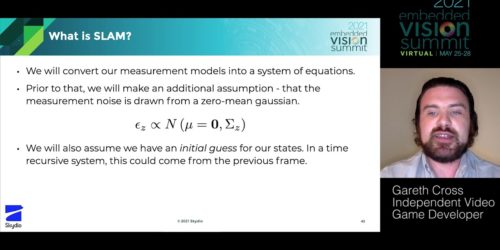

Rajesh Anantharaman, Senior Director of Products at Blaize, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Anantharaman demonstrates both people and pose detection and key point tracking using the Blaize Picasso SDK. A high resolution, multi-neural network and multi-function graph-native application built on the Blaize […]