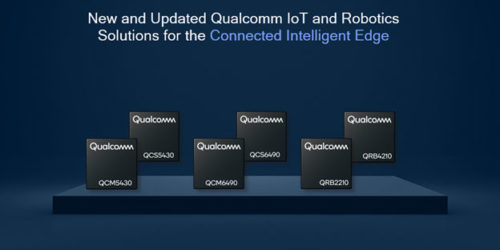

2023 Edge AI and Vision Product of the Year Award Winner Showcase: Qualcomm (Cameras and Sensors)

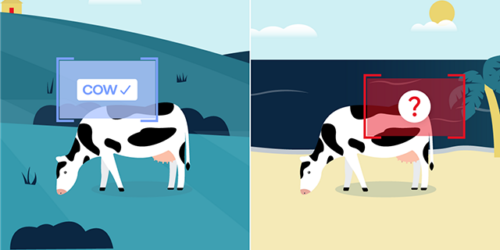

Qualcomm’s Cognitive ISP is the 2023 Edge AI and Vision Product of the Year Award Winner in the Cameras and Sensors category. The Cognitive ISP (within the Snapdragon 8 Gen 2 Mobile Platform) is the only ISP for smartphones that can apply the AI photo-editing technique called “Semantic Segmentation” in real-time. Semantic Segmentation is like […]